E

SSAYS ON

E

CONOMIC

C

OORDINATION AND

C

HANGE

from Industry and Labour Markets to

Macroeconomic Regimes of Growth

Candidate:

Maria Enrica Virgillito

Supervisor:

Prof. Giovanni Dosi

Commission:

Prof. Russell Cooper

Prof. Richard Freeman

Prof. Francisco Lou¸c˜a

Prof. Alessandro Nuvolari

Prof. Angelo Secchi

processes behind the real world. His endless desire to discover the new and investigate the economic processes, his curiosity and enthusiasm, his ability to debunk the common wisdom are the features that I would like to inherit from his mentoring. I am in debt with Prof. Marcelo Carvalho Pereira. Our collabo-ration has been an extremely exciting, challenging and fruitful experience. His guidance and support have been essential in undertaking my research. A spe-cial thanks goes both to Prof. Alessandro Nuvolari, Prof. Andrea Roventini and Prof. Angelo Secchi which markedly contributed in shaping my research pro-file. The final version of this dissertation has been written during a fruitful and challenging visiting period at the Labor and Worklife Program, Harvard Uni-versity. Prof. Richard Freeman, who gave me the incredible opportunity to visit him, has been an enviable source of intellectual exchanges and understanding of the labour markets. I am honoured to have Prof. Russell Cooper and Prof. Fransisco Louçã participating as committee members to my viva: the reading of their contributions exerts a considerable impact on the elaboration of my thesis. Finally, I wish to mention all the people of the Institute of Economics which is an outstanding and inspiring research community, able to instil a very rare sense of belonging to a grand research project: having the chance to discuss and confront

ideas with this network of people has enormously improved my research pro-file. The organizational and administrative support received by Laura Bevacqua and Laura Ferrari deserves a major acknowledgement.

1 Foundations of Evolutionary Economics 2

1.1 Evolutionary Economics . . . 2

1.2 Building blocks . . . 4

1.2.1 Routines, Rule of Thumbs, Heuristics . . . 4

1.2.2 Coordination vis - à - vis Equilibrium . . . 7

1.2.3 Heterogeneity . . . 9

1.2.4 Innovation and Growth . . . 12

1.2.5 History . . . 14

1.2.6 Institutions . . . 17

1.2.7 Methodology: why computer simulation . . . 19

1.3 Pushing the Frontier . . . 23

II Industrial dynamics

27

2 The footprint of evolutionary processes of learning and selection upon the statistical properties of industrial dynamics 28 2.1 Introduction . . . 282.2 Empirical stylised facts: productivity, size and growth . . . 31 iii

2.2.1 Productivity distribution and growth . . . 31

2.2.2 Size distribution . . . 32

2.2.3 Market turbulence . . . 34

2.2.4 Fat-tailed distributions of growth rates . . . 35

2.2.5 Growth-size scaling relationships . . . 35

2.3 Theoretical interpretations . . . 37

2.4 The model . . . 40

2.4.1 Idiosyncratic learning processes . . . 41

2.4.2 Market selection and birth-death processes . . . 43

2.4.3 Timeline of events . . . 44

2.5 Model properties and simulation results . . . 45

2.5.1 Productivity distribution . . . 47

2.5.2 Market turbulence and concentration . . . 48

2.5.3 Size distribution . . . 48

2.5.4 Firm growth . . . 52

2.5.5 Scaling growth-variance relationship . . . 56

2.5.6 Cumulativeness and selection. . . 57

2.6 Conclusions . . . 61

3 On the robustness of the fat-tailed distribution of firm growth rates: a global sensitivity analysis 62 3.1 Introduction . . . 62

3.2 Empirical and theoretical points of departure . . . 64

3.3 Exploring the robustness of the fat tails . . . 67

3.4 Discussion . . . 78

3.5 Conclusions . . . 80

III Labour markets and archetypes of capitalism

82

4 When more flexibility yields more fragility. The microfoundation of Keynesian aggregate unemployment 83 4.1 Introduction . . . 834.3.5 Timeline of the events . . . 109

4.3.6 The explored regimes . . . 110

4.4 Empirical Validation . . . 111

4.5 When more flexibility yields more fragility . . . 114

4.6 Conclusions . . . 129

Appendices 131 5 The Effects of Labour Market Reforms upon Unemployment and In-come Inequalities 134 5.1 Introduction . . . 134

5.2 Labour market regimes . . . 141

5.3 Policy experiment results. . . 142

5.4 Sensitivity analysis and further policy implications . . . 147

5.5 Conclusions . . . 153

Appendices 155

IV Macroeconomic regimes of growth

158

6 Profit-driven and demand-driven investment growth and fluctuations in different accumulation regimes 159 6.1 Introduction . . . 1596.2 “Classical” and Keynesian fluctuations. . . 161

6.4 A discrete time version of the Goodwin Growth Cycle model . . . 166

6.5 A generalized Goodwin plus Keynes model . . . 168

6.5.1 System dynamics under different regimes. . . 173

6.5.2 Stable regimes: “Victorian” versus “Keynesian” nexus . . 174

6.5.3 Chaotic regime . . . 176

6.5.4 Good and bad “Keynesian” regimes . . . 180

6.6 Conclusions . . . 180

Appendices 184

V Summary and Conclusions

187

6.1 Summary . . . 188

Source: Dosi and Grazzi, (2006). . . 32 2.3 Tent shaped productivity growth rate. Italy, Istat Micro.3 Dataset.

Source: Dosi et al., (2012). . . 33

2.4 Skewness in the size distribution. Elaboration on Fortune 500.

Source: Dosi et al., (2008). . . 34

2.5 Tent shaped size growth rate, Italy, Istat Micro.1 Dataset. Source:

Bottazzi and Secchi, (2006a). . . 35

2.6 Variance-size relation. Elaboration on PHID, Pharmaceutical

In-dustry Dataset. Source: Bottazzi and Secchi, (2006b).. . . 36

2.7 Log-normalised productivity distributions across regimes. . . 47

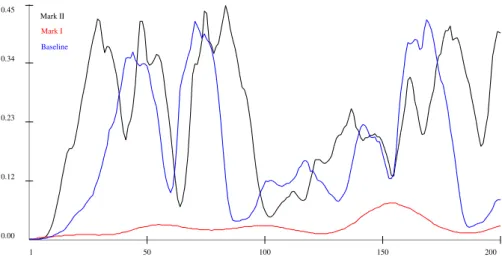

2.8 Market turbulence index (y axis) over time (x axis) across regimes. 50

2.9 Herfindahl-Hirschman concentration index (y axis) over time (x

axis) across regimes. . . 50

2.10 Log rank-size distribution across regimes (model results and

Log-normal fits). . . 51

2.11 Growth rates distribution across regimes (model results and

Sub-botin fits). . . 52

2.12 Growth rates distributions under different innovation shocks

dis-tributions across regimes (model results and Subbotin fits). . . 55

2.13 Scaling growth-variance relationship across regimes (model re-sults and linear fits). . . 58

2.14 Firm growth rate distributions under different degrees of cumu-lativeness γ in Schumpeter Mark II Regime. . . . 59

2.15 Firm growth rate distributions under different selection pressures A in Schumpeter Mark II Regime.. . . 60

3.1 Sensitivity analysis of parameters effects on meta-model response (Beta shocks). . . 73

3.2 Response surfaces – Beta shocks. All remaining parameters set at default settings (round mark at default sminand A). . . 76

3.3 Sensitivity analysis and response surfaces – Laplace and Gaus-sian shocks. All remaining parameters set at default settings (round mark at default smin, N and µ). . . . 77

4.1 The model structure. Boxes in bold style represent heterogeneous agents populations . . . 97

4.2 Capital-good Sector, Fordist (left-hand side) and Competitive (right hand side) Regimes . . . 117

4.3 Consumption-good Sector, Fordist (left-hand side) and Competi-tive (right hand side) Regimes . . . 118

4.4 Labour Market Stylised Facts . . . 119

4.5 Labour Market Stylised Facts . . . 120

4.6 Labour Market Stylised Facts . . . 121

4.7 Labour Market Stylised Facts . . . 122

4.8 Aggregate dynamics of alternative scenarios . . . 126 4.9 Comparison of key metrics on GDP and productivity among

al-ternative scenarios (gray bands indicate the Monte Carlo aver-ages 95% confidence interval). The lines are simply a visual

tion at t=100). . . 145

5.4 Personal income inequality: wages dispersion (regime transition

at t =100). . . 146

5.5 Personal income inequality: Gini coefficient (regime transition at

t=100). . . 146

5.6 Global Sensitivity Analysis: Competitive 2 alternative scenario. . 151

5.7 Global Sensitivity Analysis (continued): Competitive 2

alterna-tive scenario. . . 152

6.1 Goodwin discrete time model. Parameters values: A = 0.5, α =

0.02, β=0.01, δ =0.02, λ=0.03, ¯v=0.95. . . 169

6.2 Bifurcation regions . . . 175

6.3 The “Goodwinian” phase of the system . . . 177

6.4 “Classic” investment dynamics with path-dependent wages and

price making firms . . . 178

6.5 Multiple attractors . . . 179

6.6 Good and bad “Keynesian” regimes . . . 181

7 “Classic” investment dynamics with path-dependent wages and

price making firms. Parameters values: a = −0.9, b = −1.4, c =

−4, d=1.3, e=0.98 . . . 185

8 Multiple attractors. Parameters values: a = −1.5, b = −1.4, c =

List of Tables

2.1 Turbulence index. Italy, Istat Micro.3 Dataset. Source: Grazzi et

al., (2013) . . . 34

2.2 Parameters and simulation default settings. . . 45

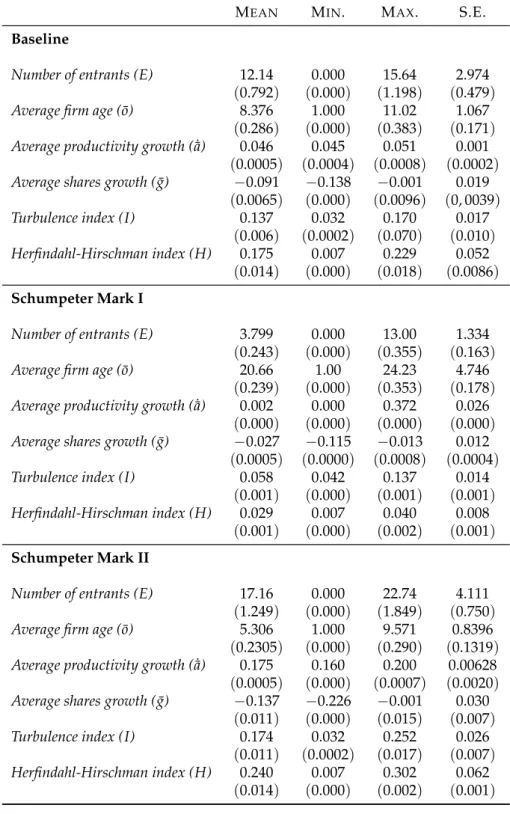

2.3 Unimodality, symmetry, stationarity and ergodicity tests of main result variables across regimes (Hdip: Hartigans’ dip, M: Mira, MGG: Miao-Gel-Gastwirth, ADF: Augmented Dickey-Fuller, KPSS: Kwiatkowski-Phillips-Schmidt-Shin, KS: Kolmogorov-Smirnov). Probability of non-rejection of unimodality, symmetry, stationar-ity and ergodicstationar-ity hypotheses at 5% significance for 50 simulation

runs. . . 46

2.4 AR(1) autocorrelation coefficient for normalised productivity across

regimes. . . 47

2.5 Descriptive statistics of main resulting variables across regimes (averages for 50 runs, numbers in parenthesis indicate the Monte

Carlo standard errors). . . 49

2.6 Gibrat’s Law tests across regimes. Probability of rejection of the null hypothesis at 5% significance and estimated coefficient MC

mean and standard error for 50 simulation runs. . . 54

2.7 Estimation of the Subbotin b parameter across regimes under dif-ferent innovation shocks (averages for 50 runs, numbers in

paren-thesis indicate the MC standard errors). . . 56

3.3 Kriging meta-model estimation.. . . 71

4.1 The two archetypical labour regimes . . . 103

4.2 Stylised facts matched by the model . . . 111

4.3 Correlation structure for Shimer, (2005) statistics.. . . 123

4.4 Key macro statistics (bpf: bandpass-filtered (6,32,12) series; Monte Carlo simulation standard errors and unit roots test p-values in parenthesis). . . 123

4.5 Fordist regime: Correlation structure between GDP and some macro variables (non-rate/ratio series are bandpass-filtered (6,32,12); Monte Carlo simulation standard errors and lag significance test p-values (5% significance) in parenthesis). . . 124

4.6 Competitive regime: Correlation structure between GDP and some macro variables (non-rate/ratio series are bandpass-filtered (6,32,12); Monte Carlo simulation standard errors and lag significance test p-values (5% significance) in parenthesis). . . 125

4.7 Comparison of [1] baseline Fordist regime with: [2] Competitive with full indexation and benefits, [3] Competitive with partial in-dexation and no benefits, [4] Competitive with full inin-dexation and no benefits, and [5] Competitive with no indexation or ben-efits (scenario/baseline ratio and p-value for a two means test where H0: no difference with baseline). . . 130

9 Model parameters and corresponding values. The labour market-and policy-specific parameters values are the baseline Fordist regime

ones (t=0). . . 133

5.1 The two archetypal labour regimes main characteristics

config-ured in the model.. . . 141

5.2 Scenario/baseline ratio and p-value for a two means test with

H0: no difference with baseline. Average values across 50 Monte

Carlo runs. . . 147

5.3 Critical model parameters selected for sensitivity analysis and corresponding values. The “chg” superscript indicates

parame-ters changed during regime transition at t=100. . . 148

4 Regime-specific parameter values. Competitive values apply for

all scenarios. . . 156

5 Average p-values and rate of rejection of H0 at 5% significance

across 50 Monte Carlo simulation runs. ADF/PP H0: series

non-stationary; KPSS H0: series stationary; KS/AD/WW H0: series

of industry, moving to the organization of labour markets and ending with the analysis of growth regimes. The micro-macro slant will both structure the dis-cussion and guide the organization of the papers. The evolutionary approach will inform the entire development of the thesis.

No empirical exercise is undertaken, even though providing plausible mod-elling descriptions of the empirical evidence is actually the main purpose of each chapter. The used methodology encompasses the analysis of stochastic processes, agent based simulations, non-linear dynamics and statistical analy-sis. In the modelling approach, along the thesis, a tension between reduced forms and fully-fledged models is clearly evident. In fact, one of the intrinsic scope of the thesis is also exploring alternative but complementary modelling strategies, aware of the compromise between realism and tractability.

The thesis is organised in five parts: introduction, industrial dynamics, labour market and archetypes of capitalisms, macroeconomic regimes of growth,

con-clusions. More specifically, the first Chapter (1) will provide an introduction and

a file rouge of the thesis, positioning the thesis in the evolutionary literature.

The second part addresses the topic of industrial dynamics. Chapter2, based

on Dosi et al., (2015c), tries to interpret an ensemble of stylised facts on industrial dynamics which ubiquitously emerges across industries, levels of aggregation, times and countries. They include wide and persistent asymmetries in degrees of relative efficiency; skewed size distributions; persistent turbulence in market dynamics; negative scaling relations between firm sizes and variance in firm growth rates; and, last but not least, fat-tailed distributions of growth rates. We show that all these regularities can be accounted for by a simple evolutionary model wherein the dynamics is driven by some learning process by incumbents and entrants together with some process of competitive selection.

The third Chapter (3), based on Dosi et al., (2016f), investigates, by means

of a Kriging meta-model, how robust the “ubiquitousness” feature of fat-tailed distributions of firm growth rates is with regard to a global exploration of the pa-rameters space. The exercise confirms the high level of generality of the results

got in Chapter2in a statistically robust global sensitivity analysis framework.

The third part focuses on labour markets and archetypes of capitalisms.

Chap-ter4, based on Dosi et al., (2016e), presents an Agent Based Model (ABM) that

investigates the effects of different archetypes of capitalism – in terms of regimes of labour governance defined by alternative mechanisms of wage determina-tion, degree of labour protection and productivity sharing –, on (i) macroeco-nomic dynamics (long-term rates of growth, GDP fluctuations, unemployment rates, inequality), (ii) labour market regularities. The model robustly shows that more flexibility in terms of variations of monetary wages and labour mobility – irrespectively of how it fosters allocative efficiency, a topic outside the concerns of this paper – is prone to induce systematic coordination failures, higher macro volatility, higher unemployment, higher frequency of crises.

The fifth Chapter (5), based on Dosi et al., (2016d), building on the model

of the previous Chapter (4), is meant to analyse the effects of labour market

structural reforms. We introduce a policy regime change characterized by a set of structural reforms on the labour market, keeping constant the structure of the capital- and consumption-good markets. The Chapter shows how labour market structural reforms reducing workers’ bargaining power and

compress-dynamics in a phase of the system characterized by accelerator-driven invest-ment (and, thus, demand- driven growth). Moreover, such a Keynesian set-up exhibits the coexistence of multiple attractors hinting at the multiplicity of growth paths whose selection plausibly depends on history and on public poli-cies.

Finally, the fifth part concludes, presenting a summary of the findings and further developments.

Part I

Foundations of Evolutionary

Economics

Evolutionary Economics

It is an evident stylised fact of modern economic systems that there are forces at work which keep them together and make them grow despite rapid and profound modifications of their industrial struc-tures, social relations, techniques of production, patterns of consump-tion. We must better understand these forces in order to explain possible structural causes of instability and/or cyclicity in the per-formance variables [...]. It might be useful to start from a more ex-plicit definition of “dynamic stability” and homoeostasis [...] what we could call the “bicycle postulate” applies: in order to stand up you must keep cycling. However, changes and transformation are by nature “disequilibrating” forces. Thus there must be other fac-tors which maintain relatively ordered configurations of the system and allow a broad consistency between the conditions of material re-production (including income distributions, accumulation, available techniques [...]) and the thread of social relations. In a loose thermo-dynamic analogy, it is what some recent French works call

“regula-tion”. [Dosi, (1984) p.

99-100]

This doctoral thesis is precisely meant at presenting and discussing theoreti-cal models able to provide an account of the two drivers of economic coordination and change. In particular, three main domains are under the scope of analysis: industrial dynamics, labour markets, archetypes of capitalisms and the ensu-ing macroeconomic regimes of growth. In fact we will present models wherein

technological change is the source of growth occurring inside firms (Chapter2),

it intertwines with wage determination and the institutional set-ups (Chapters

4and 5), can be lead by Goodwin-like and Keynesian-like investment types of

accumulation (Chapter6).

The interplay between change and coordination are at the core of the tionary Economics theorizing. Drawing on Smith, Marx and Schumpter, Evolu-tionary Economics interprets and formalizes the capitalist system as an evolving, complex one. Change and transformation of technologies, industrial structures, organizations and social relations shape the evolution of the capitalist system which is characterised by a process of sustained growth but punctuated by small and big crises. The process of economic growth is all but steady. In fact, growth occurs in alternate phases (or waves): periods of disruptive innovations alter-nate with period of relatively calm and diffusion, without any clear or ex-ante predefined duration.

Industrial mutation – if I may use the biological term – that inces-santly revolutionises the economic structure from within, incesinces-santly destroying the old one, incessantly creating a new one. This pro-cess of Creative Destruction is the essential fact about capitalism.

[Schumpeter, (1947), p. 83]

Marshall, among the funding father of modern economics, was well aware that economics should be devoted to the understanding of the forces behind movement, stating in the Preface of the Principles:

The main concern of economics is thus with human beings who are impelled, for good and evil, to change and progress. Fragmentary

the next Chapters.

Building blocks

Routines, Rule of Thumbs, Heuristics

The first question we are going to address is: how do agents behave? In the tradi-tion of Evolutradi-tionary Economics, routines, rules of thumb and heuristics have been

the core pillars describing the behaviour of agents. In Cohen et al., (1996),

Sid-ney Winter provides a conceptual classification of the three latter categories: (i) routines are automated, repetitive and unconscious behaviours which require a high level of information processing (e.g. working in an assembly lines, making airline reservation or bank transactions); (ii) rules of thumb are relatively sim-ple decision rules which require low level of information processing involving some quantitative decisions (e.g. the share of R&D over total sales, the fixed mark-up); (iii) heuristics are guidelines and behavioural orientations adopted to face problem solving which do not provide any ready-to-use solution, but conversely broad strategies (e.g. in decision making, “Do what we did the last time a similar problem came up”, in bargaining, “Ask always more than what you desire”, in technology, “Make it smaller/Make it faster”).

These three elements are the traits of organizations and they are both (i) problem-solving action patterns and (ii) mechanisms of control and governance inside the organization. The conflicting nature of interests, knowledge, and

pref-1A very enticing and compelling discussion complementary this exposition is in Shaikh,

erences inside organizations, like firms, is well summarized in March, (1962). According to the latter, rather than as maximizing units, firms are better rep-resented in terms of political coalitions. In fact, some regular patterns in the behaviour of firms like (i) the tolerance of inconsistencies in both goals and cisions over time and inside the organization, (ii) decentralised goals and de-cisions with loose cross connections, (iii) slowly shifts over time in response to shifts in the coalition are typical footprint of organizations acting as political coalition. But given the nature of the firm as a political coalition, how can we model its behaviour?

In the recent years, the introduction of the computer and the com-puter program model to the repertoire of the theorist has changed dramatically the theoretical potential of process description models of conflict systems. Complex process description models of organi-zational behaviour permit a development of a micro-economic

the-ory of the firm. [March, (1962) p.

674–675]

In tracing the Agenda for Evolutionary Economics, Winter, (2016)

under-lines how the use of the maximization procedure is intrinsically inappropriate

to study the behaviour of the firms. March, (1962) shared long ago the same

concern:

Generally speaking, profit maximization can be made perfectly mean-ingful (with some qualifications); but when made meanmean-ingful, it usu-ally turns out to be invalid as a description of firm behaviour. [...] With few exceptions, modern observers of actual firm behaviour re-port persistent and significant contradictions between firm behaviour

and the classical assumptions. [March, (1962) p. 670]

The profit seeking maximizing behaviour of firms is carefully debunked in

Winter, (1964) who points two lines of criticism to the assumption of profit

maxi-mization: (i) even though it is conceivable that firms do have goals, is not appro-priate to assume that the goal is profit maximization, conversely organizations

it is not that people do not go through the calculations that would be required by the subjective expected utility decision – neoclassical thought has never claimed that they did. What has been shown is that they do not even behave as if they have carried out those cal-culations, and that result is a direct refutation of the neoclassical

as-sumptions. [Simon, (1979), p.

507]

But, what is a heuristic?

A heuristic is a strategy that ignores part of the information, with the goal of making decisions more quickly, frugally, and/or accurately

than more complex methods. [Gigerenzer and Gaissmaier, (2011), p.

454]

Given this definition, heuristics are not sub-optimal behaviours (like in

Kahne-man, 2002), but they are “locally ex-post optimal strategies” that outperform

rational choices in large worlds characterised by substantive and procedural

uncer-tainty (Dosi and Egidi,1991).

A clear-cut example on the use of heuristics in treading behaviours is shown

by the financial economists DeMiguel et al., (2009). Over a sample of seven

datasets, they find out how the simple 1/N rule out-perform the Markov Port-folio strategy in fourteen alternative portPort-folio specifications. The estimation er-rors in the variance-covariance matrices were such high that very positive or very negative weights were elicited by rational allocation strategies. In fact, in

changing and distributional variant worlds, the simple 1/N strategy reveals to be much more robust and less error prone.

Coordination vis - à - vis Equilibrium

The term Evolutionary recalls the field of Biology wherein the processes of mu-tation and selection among species are at the core of evolution. Already in the Marshallian definition of Economics, the latter was conceived to be much more closer to Biology rather than Mechanical Physics:

The Mecca of the economist lies in economic biology rather than in economic dynamics. But biological conceptions are more complex than those of mechanics; a volume on Foundations must therefore give a relatively large place to mechanical analogies; and frequent use is made of the term "equilibrium," which suggests something of statical analogy. This fact, combined with the predominant attention paid in the present volume to the normal conditions of life in the modern age, has suggested the notion that its central idea is “stati-cal”, rather than “dynamical”. But in fact it is concerned throughout with the forces that cause movement: and its key-note is that of

dy-namics, rather than statics. [ Marshall, (1890), vol. 1, p.

xiv]

Nonetheless, the ultimate goal of the neoclassical economic analysis has been to study market coordination as an equilibrium outcome. Coordination is not an easy task: after all the economic processes are the results of individual deci-sions making characterised by ex-ante possible inconsistencies. But, economic agents coordinate themselves inside organizations, institutions, societies. Why do we observe such emergence of order (where the meaning of order should be accurately distinguished from the notion of equilibrium)? As well known, the neoclassical response to the problem of coordination has been my means of prices: exchange among decentralised agents, who are pursuing their own utility maximization transactions, lead to the emergence of a market.

tition and asymmetric information), the processes of interactions and evolution of behaviour have been remarkably neglected.

If dynamics and interactions count, the concept and the search for equilib-rium solutions necessitate to be re-thought and substituted by the concept and the search for coordination patterns. In fact, the usual concern for equilibrium does not find much place inside the evolutionary theorizing as a direct consequence of choosing the route of realism vis-à-vis instrumentalism. No ex-ante market clearing condition, necessary to endogenously determine a closed solution for the unknown variables (e.g. prices and quantities), is superimposed. Evolution-ary economic models are conversely meant at understanding the coordination patterns that result out of the interaction of heterogeneous agents. The scholar is not interested in finding any equilibrium solution, but conversely in

identify-ing emergent properties. Accordidentify-ing to the seminal contribution by Lane, (1993),

emergent properties are defined as:

An emergent property is a feature of a history that (i) can be de-scribed in terms of aggregate-level constructs, without reference to the attributes of specific MEs (Microlevel Entities); (ii) persists for time periods much grater than the time scale appropriate for describ-ing the underldescrib-ing micro-interactions; and (iii) defies explanation by reduction to the superposition of “built-in” micro-properties of the

AW. [Lane, (1993), p. 90-91]

Then, let us suppose to have an artificial world populated by machine-producers (ME) who realize new products and machine-buyers (ME) who order the

lat-ter machines to produce homogeneous good sold to workers (ME). Whenever aggregate output, consumption and investment exhibit a sustained long run growth path, this identifies an emergent property. Analogously, let us think to have an economy populated by firms who open vacancy posts to hire workers, and workers who send applications to a given number of firms, whenever a Beveridge Curve or a Matching Function emerge, these are emergent properties not directly linked to the attributes of the microlevel entities.

The emergent properties are conceived to be meta-stable and not unique nor stable equilibria: whenever attributes at the microlevel are changed also the emergent properties can be fairly different. Additionally, artificial economies are mainly high-dimensional stochastic models, hardly treatable analytically. Then, asymptotic convergence toward a steady-state condition or stability are almost impossible to prove analytically. But, notably the conditions under which or-dered patterns or trajectories emerge, are the relevant questions addressed in the analysis.

Heterogeneity

The absence of any explicit coordination process in standard neoclassical models

is further developed in Kirman, (1992), who focuses his analysis on an accurate

critique of the representative agent (RA):

Paradoxically, the sort of macroeconomic models which claim to give a picture of economic reality (albeit a simplified picture) have almost no activity which needs such coordination. This is because typically they assume that the choices of all the diverse agents in one sector-consumers for example-can be considered as the choices of one "rep-resentative" standard utility maximizing individual whose choices coincide with the aggregate choices of the heterogeneous

individu-als. [Kirman, (1992), p.

117]

According to Kirman, (1992), the RA apparatus is not simply unrealistic but

rejection, has to reject specifically the RA or some other behavioural hypothesis. If the objective of the neoclassical modeller is to reach the exact microfoun-dation, ensured by the optimization procedure performed by the RA, the latter procedure intrinsically contradicts the inner meaning of microfoundation. All in all, a direct consequence of the RA is that the no trade conundrum, according to which no exchange should persistently occur as in equilibrium supply and demand clear, becomes the rule and not the exception. Transactions are only mild turbulences around the equilibrium point.

Why the standard apparatus needed the RA? Results from the General Equi-librium scholars like the Sonneischann-Mantel-Debreau theorems show that the only three properties that the aggregate excess demand function inherits from the individual excess demand function are continuity, homogeneity of degree zero and satisfaction of the Walras Law. The other properties like transitivity of preferences, which if violated could lead to multiplicity of equilibria, are not guaranteed by the aggregation process, where the latter to be performed re-quires homothetic utility functions, e.g. satisfaction of the Gorman form. The only viable solution explored in the neoclassical models to overcome the aggre-gation problem has been reducing the dimensionality from n potentially hetero-geneous individuals (at least two in the original General Equilibrium program) to one. In this latter case the excess demand function will obviously satisfy all the regularity conditions required to get uniqueness and stability of the equilib-rium.

aggrega-tion among heterogeneous agents is sufficient to have a well behaved aggregate demand function:

Once one allows for different micro-behavior and for the fact that different agents face differing and independent micro-variables then [...] complex aggregate dynamics may arise from simple, [...],

indi-vidual behavior. [Kirman, (1992)

p.127]

Becker, (1962) in the early sixties already emphasized how the law of

de-mand is the results of the change in the opportunity, due to the effects that price changes exert on the budget constraint, and are not related to any decision rules: the negative demand slope is independent from the rational or irrational content

of the decision rules. A similar theoretical discussion is in Grandmont, (1991),

who provides evidence that, under some specific conditions, heterogeneous preferences when aggregated lead to the property of “gross-substitutability”,

implying a unique and stable equilibrium. The result in Grandmont, (1991)

shows the relatively unimportance of the maximization of a utility function, and conversely the relevance of the simple satisfaction of the budget constraint to obtain well-behaved aggregate demand functions. A very enticing and

comple-mentary exposition is in Shaikh, (2012). Finally, the interaction among

hetero-geneous agents, by itself, allows for the emergence of endogenous cycles and fluctuations, without the need of external shocks hitting the system.

Besides the theoretical fallacy of the RA, a large body of empirics militates against the RA. In fact, an overwhelming and blossoming empirical evidence on longitudinal data, both at firm/establishment and worker level, shows the emergence of distributions which persist over time and at different levels of ag-gregation (sector-country). The very existence of widespread empirical distri-butions in firms performance like productivity, sales, output growth rate and worker earnings clearly points at the persistent heterogeneity characterizing the real world.

Unfortunately and contrary to what predicted by the neoclassical approach, there is much more evidence of diverging paths, at all level of aggregation, with

Innovation and Growth

The process of economic change as been at the core of the “Grand Evolutionary

Project” since the seminal book by Nelson and Winter, (1982) which devoted to

it an entire section titled “Growth Theory”. The early growth neoclassical mod-els have been identifying in the so called Solow residual or, using the felicitous

expression by Abramovitz, (1956), the measure of our ignorance, the inexplicable

source of economic growth. Rather surprisingly, the early scholars of growth theory decided to shelve the enquiry exactly on the source of economic growth, treating “technological change as a residual neutrino”:

The neutrino is a famous example in physics of a labelling of an error term that proved fruitful. Physicists ultimately found neutrinos, and the properties they turned out to have were consistent with preser-vation of the basic theory as amended by acknowledgement of the existence of neutrinos. A major portion of the research by economists on processes of economic growth since the late 1950s has been con-cerned with more accurately identifying and measuring the residual called technical change, and better specifying how phenomena re-lated to technical advance fit into growth theory more generally. The

issue in question is the success of this work. [Nelson and Winter,

(1982), p. 198]

After the early models of exogenous technical change, during the beginning of the nineties a series of studies gave origin to the so called endogenous growth

theory. In this respect, there was a clear evidence that topics inherently Schum-peterian became of interest for the mainstream. Nonetheless, many of the en-dogenous growth models were not able to account for some elements typically characterizing the processes of innovation and growth.

Following one of the early evolutionary models of technological change

(Sil-verberg and Verspagen,1994), some requirements are needed to model

endoge-nous growth, namely: (i) coexistence of many technologies and techniques of production at any given time period; (ii) no unique production function (coexis-tence of multiple techniques of production even at the frontier); (iii) dependence of the aggregate rate of technical change on diffusion of innovation, not simply on the instantaneous innovation rate; (iv) if the innovative effort is represented by a stochastic draw from probability distributions, the parameters of the distri-butions have to be unknown for firms.

In fact, the listed requirements appeared to be appropriate to map a series of attributes of technology: search and discovery of innovation are processes intrinsically characterised by Knigthian uncertainty wherein there is no exact correspondence between ex-ante effort and ex-post performance; there is no convergence toward an optimal capital/labour ratio which defines the unique technique adopted by the users, but conversely constellations of techniques of production are employed by different firms; nonetheless, there are technological trajectories which lead to the adoption of a dominant design/artifact.

Then, in the evolutionary perspective it is the exactly contribution of knowl-edge, which is transferred into the innovative active, which is “responsible” for the neutrino. And innovation is a very special commodity which is not char-acterised by scarcity and by diminishing returns, overturning the old perception of economics as the science of allocation of scarce resource. If knowledge and innovation become the main determinants of economic growth, then abundance and dynamic increasing returns are the rule and not the exception:

If anything, innovation and knowledge accumulation are precisely the domains where the dismal principles of scarcity and conservation are massively violated: one can systematically get more out of less,

nonconvexities because of the fixed cost of acquiring it. Then, the benefits of information increase with the scale of its production. But given nonconvexities,

the existence of equilibrium is not guaranteed (see Rothschild and Stiglitz,1976

and its manual-level acknowledgement in Mas-Colell et al., 1995). In fact,

un-der nonconvex technologies, the supply curve is not equivalent to the marginal cost function and the intersection with the demand curve is not ensured.

Ar-row, (1996) clearly states how the introduction of information in the production

possibility set induces increasing returns:

[c]ompetitive equilibrium is viable only if production possibilities are convex sets, that is do not display increasing return [...] with information constant returns are impossible. [...] The same informa-tion [can be] used regardless of the scale of producinforma-tion. Hence there

is an extreme form of increasing returns. [Arrow,1996p. 647-648]

In fact, the existence of conventional General Equilibria is undermined in presence of innovation even neglecting increasing returns properties of

innova-tion itself: see Winter, (1971).

History

History and path dependency have been characterizing the debate since the

seminal paper by David, (1985). In order to understand the process of evolution

of a given technology, it is fundamental to understand its historical origin. The latter contribution emphasises the importance of the sequence of past events to interpret economics as an evolutionary process:

Cicero demands of historians, first, that we tell true stories. I in-tend fully to perform my duty on this occasion, by giving you a homely piece of narrative economic history in which “one damn thing follows another”. The main point of the story will become plain enough: it is sometimes not possible to uncover the logic (or illogic) of the world around us except by understanding how it got that way. A path-dependent sequence of economic changes is one of which important influences upon the eventual outcome can be exerted by temporally remote events, including happenings domi-nated by chance elements rather than systematic forces. Stochastic processes like that do not converge automatically to a fixed-point distribution of outcomes, and are called non-ergodic. In such cir-cumstances “historical accidents” can neither be ignored, nor neatly quarantined for the purpose of economic analysis; the dynamic

pro-cess itself takes on an essentially historical character. [David, (1985),

p. 332]

Exactly by means of a historical perspective, Abramovitz, (1993) is able to

iden-tify as technological progress has been mainly tangible capital-using biased in the nineteen century, and conversely intangible capital-using biased in the twenty century, debunking the idea that capital accumulation is the major source of economic growth. The author considers the nature of technological progress occurred in the twenty century a violation of the premise that technological ad-vances is only a stable function of the supply of saving and the cost of finance. If it is not the case, the growth accounting strategy isolating the contribution of each component of the aggregate production function, to estimate the total fac-tor productivity (TFP), is a misleading approach: there is an interdependency among the evolution of science and technology, and the political and economic institutions and modes of organization affecting the path of new discoveries and so of technological change, very little affected by the evolution of prices of the factors of production. What cannot be understood by means of changes in prices and factor shares has to be understood by means of history:

ation of the joint and interdependent action of the main sources of growth. I expect such historical studies to make major contributions

in the future. [Abramovitz, (1993), pp. 237-238]

Neoclassical economics has been attributing some sort of relevance to his-tory with the birth of the realm of New Economic Hishis-tory. New Economic His-tory has been traced as the reunification between neoclassical economics and

economic history, according to Fogel, (1965). This branch of economic history,

commonly defined as Cliometrics, is the attempt to reconcile an econometric dynamic framework with economic history, or put it simpler, to estimate long run production functions whose factors should also include historical determi-nants. Producing quantitative assessments of sociological and institutional phe-nomenona became the focus of cliometricians. But the purported rigours was obtained at the cost of vindicating instrumentalism against realism, using hypo-thetico deductive methods with little logical or methodological foundation.

Freeman and Louçã, (2001) provide an enticing discussion on cliometrics.

A series of unsatisfying outputs of this research stream culminated with the de-scription of slavery condition as a rational optimizing behaviour for both parties of the contract: the owners were praised for the superiority of their managerial capabilities and the slaves for the superior quality of black labour. Cliometrics, a part from abruptly importing the homo economicus, brought into the discourse the idea that randomness was an adequate attribute to historical events: first, some historical events can be interpreted as random, exogenous events altering the regular course; second, historical time series can be considered equivalent

to a sample from a universe of alternative realizations of the same stochastic process.

Landes, (1994) emphasises the inappropriateness of this research agenda in

providing exhausting explanation of historical phenomena:

Here let me state a golden rule of historical analysis: big processes call for big causes: this as what economists call a prior. I am con-vinced that the very complexity of large systematic changes requires complex explanation: multiple causes of shifting relative importance,

combinative dependency,...temporal dependency. [Landes, (1994),

p. 653]

Institutions

In absence of rational, profit-seeking maximizing agents, what does it shape the behaviour of individuals and organizations? How the relatively ordered patterns above mentioned are able to emerge?

The relation between individual behaviour, market structures, firm and worker organizations, whose coordination and evolution is able to lead to macroeco-nomic patterns, has been studied by the Regulation School who identified the

so called Regimes of Regulation (see Boyer,1988b).

Three main domains of the Regimes of Regulation are especially relevant to study the capitalist dynamics, namely: (i) the accumulation regime which entails the relation among technological progress, income distribution and aggregate demand, (ii) the institutional forms which encompass the wage-labour nexus and nature of the State, (iii) the mode of regulation which is the mechanism by which the former two categories evolve, develop and interact. The modes of regu-lation capture the specificities of disequilibrium process of adjustments in the accumulation patterns and in the coordination among different types of actors. The dynamics entails phases of “smooth” coordination, mismatches, cycles and crises.

The major question is, then, the coherence and compatibility of a given technical system with a pattern of accumulation, itself defined

tively consistent entailing some distinctive features: (i) decentralised decisions are taken without encompassing for each individual or organization the need of understanding the whole system; (ii) it shapes the accumulation regime; (iii) it reproduces a system of social relationships by means of given institutional forms.

The role of the industrial relations, the mechanism of wage determination, the relative power of conflicting classes, the industrial and labour policies be-come important elements in determining the national systems of innovation (see

Nelson, 1993) and hence the trajectory of the techno-economic paradigms. As

a consequence, non-market institutions become particularly relevant to study economic dynamics:

More generally, there is a lot more to the institutional structure of modern economies than for-profit firms and markets. Firms and markets do play a role in almost all arenas of economic activity, but in most they share the stage with other institutions. In many sectors firms and markets clearly are the dominant institutions, but in some they play a subsidiary role. National security, education, criminal justice and policing are good examples. Some sectors, like medical care, are extremely “mixed”, and one cannot understand the activity going on in them, or the ways in which their structure, ways of doing things, and performance have evolved, if one pays attention only to

Methodology: why computer simulation

Given the description of the economic dynamics we propose, the choice of the right instruments, suitable to study the economic process as it is, is of paramount importance. To overcome the straitjacket implied by the standard tools, we ex-plore the use of a set of instruments more appropriate to closely represent and model the real world.

It would appear, therefore, that a model of process is an essential component in any positive theory of decision making that purports to describe the real world, and that the neoclassical ambition of

avoid-ing the necessity for such a model is unrealizable. [Simon, (1979), p.

507]

The choice of computer simulation models is not by chance, it is in fact ex-actly the tool that allows to describe the economic agents behaving according to procedural routines, which are executed under the form of algorithms, wherein dynamics and time to build properties can be explicitly inserted and described, and heterogeneity is explicitly modelled. Simulations, in this respect, are not simply used to mimic but to test hypotheses.

By converting empirical evidence about a decision-making process into a computer program, a path is opened both for testing the ad-equacy of the program mechanisms for explaining the data, and for discovering the key features of the program that account, qualita-tively, for the interesting and important characteristics of its

behav-ior. [Simon, (1979), p.

508]

Many criticisms have been addressed to computer simulation models, or as they are nowadays labelled, Agent Based Models. Particularly:

1. lack of rigorous in the specification of the behavioural equations 2. lack of parameter estimation

issue of consistency is solved by means of a deductive approach.

In computer simulations, to test the internal consistency of the model, wherein a model is not only made by the behavioural equations, the rules, but also by algorithms and timeline of events, which define the chronological order accord-ing to which the procedures are undertaken, we ask the model results to have external consistency. What does it mean? It has to be coherent and as closest as possible to empirical data. Only abandoning the deductive-normative ap-proach of the maximization endeavour, the modeller is able to test the validity of behavioural rules and algorithms vis-à-vis empirical results. In particular, the modeller is in search of stylised facts or empirical regularities.

The first critique leaves oneself open to the second critique, that is the lack

of parameter estimation. According to Brock, (1999), regularities or scaling laws

which are particular widespread in economics are:

[...] ‘unconditional objects’, i.e. they only give properties of station-ary distributions, e.g. ‘invariant measures’, and hence cannot say much about the dynamics of the stochastic process which generated them. To put it another way, they have little power to discriminate

across broad classes of stochastic processes. [Brock, (1999), p. 410]

But the emergence of these scaling law, even if does not allow to discrimi-nate among alternative stochastic processes all equally able to replicate the same unconditional objects, are useful to identify acceptable and non acceptable the-ories:

Nevertheless, if a robust scaling law appears in data, this does restrict the acceptable class of conditional predictive distributions somewhat. Hence, I shall argue that scaling law studies can be of use in eco-nomic science provided they are handled and interpreted properly [...] scaling laws can help theory formation by provision of discipline on the shape of the ‘invariant measure’ predicted by a candidate

the-ory. [Brock, (1999)

p.411]

An advocacy to undertake both analysis of unconditional objects and esti-mation of conditional objects is presented trough this seminal paper. Brock,

(1999) underlines how the goal of economics should be to understand the

be-haviour of the system whenever hit by shocks, e.g. when policy interventions are undertaken: in the latter case, the search for unconditional objects may be unsatisfactory.

We completely share the idea of using models as laboratories for policy ex-periments. Instead of performing the usual methods of causality detection pro-posed by the toolbox of econometrics, what ABMs allow is to model “artificial” policy experiments. When ABMs are implemented, the protocol that we follow is exactly the one of: (i) using unconditional objects to reject theories unable to match statistical regularities, (ii) performing policy experiments which allow to understand the reaction of the system when hit by external shocks.

The ability to match statistical regularities, and particularly large ensemble of statistical regularities which stem from the micro to the macro ones, allows to reach the internal consistency above mentioned. In accordance with Brock,

(1999), we do not simply try to find unconditional objects, but to build models

able to provide a sound explanation to these regularities, whenever they are matched. Not all conditional objects are equivalent litmus tests to accept/reject a theory: if replicating business cycle co-movements can be rather easily, such as Pareto distributions, matching empirical regularities like the magnitude of the ratio between the standard deviations of two variables is much more complex.

the strength of ABMs lie on the inherent mechanisms upon which they are built, which require to be validated, and focus the effort in the modelling building phases. Values of the parameters are set as close as possible to empirical data, whenever available, but without any direct estimation of those latter ones.

On the contrary, neoclassical macroeconomic models like DSGE, being lin-earised in the neighbourhood of the equilibrium values, tend to exhibit a low ability in generating outputs which are different from the inputs themselves. To overcome this drawback, neoclassical models try to fit empirics using the esti-mated parameter values from the real data, or minimizing the distance between the series produced by the model and the empirical ones. Following a calibra-tion procedure, the modeller does not perform any testing on the inherent model structure, but simply imputes estimated values of the parameters.

In a nutshell, while standard macroeconomic model focus on parameter esti-mations, ABMs focus on hypothesis testing for the theory. Whenever a model is able to robustly replicate a number of stylised facts, without imposing specific values for the parameters, the mechanisms inside the model itself are reliable explanations of the facts observed in the empirics.

One open challenge is the comparability between high-dimensional ABMs and reduced aggregate law of motions: is there any equivalent transformation of the former into the latter? Can we get rid of high-dimensionality, both in terms of heterogeneity and in terms of variables and parameter space, and still building models able to have a successful explanatory ability?

statis-tical mechanics like master equations and Winer processes. Our main concern with respect to the so called “econophysics” approach rests in the choice of giv-ing priority not to the economic questions but to the suitable and analytically tractable stochastic models allowing to perform aggregation.

Ijiri and Simon, (1977) were among the first in using a master equation to

account for the skew size distribution of firms, but they were very explicit in saying that models are useful only when they augment our knowledge on reality and are able to provide sounded economic interpretation behind their analytical formulation. Unfortunately, it seems many models in the “econophysic” stream of research do prefer manageable and easy-to-solve stochastic processes with respect to stochastic processes informed by procedural behaviours, loosing any flavour of realism.

Pushing the Frontier

Giving the main pillars of evolutionary economics, how did we manage to pro-duce some steps ahead with respect to the state of the art?

Primarily, the thesis tries to provide a comprehensive analysis of various eco-nomic processes, which stem from the industrial dynamics to the labour market orga-nizations, to macroeconomic regimes of growth. Together with the modelling of the latter mechanisms, the attempt is also providing new methods to develop robust and profound model building and exploration. Finally, the two previous objectives are fulfilled by means of low and medium scale agent based models and non-linear system dynamics. All the analysis is undertaken by means of a rich ensemble of statistical tools which allow to compare theory with its empirical counterpart.

The mechanisms of learning, selection, entry and exit, which have been en-visaged by an overwhelming theoretical and empirical tradition as the cores of both firms organization and firms interrelation in the market, are studied in the

second Chapter (2). The novelty of this Chapter consists in offering a simple,

concise and comprehensible multi-firm evolutionary model which is able to ac-count for all the mechanisms above mentioned. Particularly, it advances with respect to the literature because it proposes potential generative mechanisms,

proach allows to dig inside the structure of the model and to overcome the one at the time parameters exploration with an interval, simultaneous parameters one. This Chapter offers a novel approach that can be used as protocol for sen-sitivity analysis in ABMs. The used methodology also replies to the critique of lack of rigour and robustness. By means of the global sensitivity analysis explo-ration, we are able to use a map, a guideline to improve the knowledge on our model.

Encompassing the role of demand and understanding the functioning of the

labour market is the aim of the forth Chapter (4). Developing a stream of

re-search rather new for evolutionary economics, traditionally interested in the study of supply conditions, with a privileged interest on technical change, firms and industrial dynamics, this Chapter explicitly focuses on the intertwining be-tween the processes of technological change and the wage determination one. Building on the “Schumpeter meeting Keynes” research program, which has in-troduced the role and the modelling of macroeconomics inside the evolutionary paradigm, this Chapter is meant at providing a microfoundation to the emer-gence of Keynesian-type aggregate unemployment, that is unemployment due to lack of aggregate demand. This is accomplished by the implementation of a decentralised matching process between workers and firms, and a wage deter-mination process which is declined under two variants, the Competitive wage labour-nexus and the Fordist wage labour-nexus. Embedding two alternative institutional set-ups inside the labour markets allows to get insight on both the 1929 and 2008 crises, being the model able to reproduce persistent

unemploy-ment. This Chapter advances with respect to state of the art as it proposes an evolutionary model of the labour market, twisted with an endogenous techno-logical progress, and embedded into institutional regimes.

Deepening the role of labour market institutions, the fifth Chapter (5)

ex-plores the effect of labour market structural reforms upon both unemployment and personal and functional income inequality. The Chapter builds upon the

model developed in Chapter4, but differently from the latter, where the

insti-tutional regimes are studied under a static comparative setting, this Chapter explores how an exogenous dynamic transition from a Fordist-type toward a Competitive-type of labour relations affects both unemployment and inequal-ity. In contrast with the expected standard result, in which unemployment and inequality are negatively correlated, we do find emergence of a strong positive correlation. In particular we test the effects of alternative types of firing rules, from longer-term toward short-term type of labour contracts. In a period of blossoming advocacy for labour market structural reforms as recipe for growth, this paper contributes in the economic debate, providing robust evidence on the absence of any direct relation between labour cost and productivity growth: productivity growth can be enhanced only by increasing the technological op-portunity space. The strength of this analysis is supported by the adoption of

the global sensitivity analysis proposed in Chapter 3 to perform extensive

ex-ploration in the parametric space.

The last Chapter (6) explores, by means of a non non-linear dynamical

sys-tem model, the coexistence of demand driven and profit driven investment and the ensuing types of business cycle fluctuations emerging out of the them.

It shares with Chapter 4 and 5 the aim of providing insight over alternative

archetypes of capitalisms, but differently from the previous Chapters, in this case we maintain constant the labour market structure and we modify the prod-uct market, and particularly the investment and the pricing equation. We exper-iment with a system dynamic approach, without heterogeneity and by means of aggregate low of motions. We recover both orderly, or disorderly patterns of limit cycle fluctuations and erratic chaotic trajectories. Finally, we do find

coex-Part II

Industrial dynamics

industrial dynamics

Introduction

Evolutionary theories of economic change identify the processes of idiosyncratic learning by individual firms and of market selection as the two main drivers of the dynamics of industries. The interplay between these two engines shapes the dy-namics of market shares and entry-exit and, collectively, the productivities and size distributions, and the patterns of growth of both variables. Firm-specific learning (what in the empirical literature is sometimes broadly called the within effect) stands for various processes of idiosyncratic innovation, imitation, and changes in techniques of production. Selection (what is usually denominated the between effect) is the outcome of processes of market interaction where more “competitive” firms – on whatever criteria – gain market share at the expense of less competitive ones, some firms die, and others enter. The ensuing indus-trial dynamics presents some remarkable and quite robust statistical properties – “stylised facts” – which tend to hold across industries and countries, levels of

aggregation and time periods (for a critical surveys, see Dosi,2007, Doms and

Bartelsman,2000, and Syverson,2011).

In particular, such stylised facts include:

• persistent heterogeneity in productivity and all other performance

vari-ables;

• persistent market turbulence, due to change in market shares and

entry-exit processes;

• skewed size distributions;

• fat-tailed distribution of growth rates;

• scaling of the growth-variance relationship.

Different theoretical perspectives address the interpretation of one or more of such empirical regularities. One stream of analysis, which could go under the heading of equilibrium evolution, tries to interpret size dynamics in term of passive

(Jovanovic,1982) or active learning (Ericson and Pakes,1995) by technically

het-erogeneous but “rational” firms. Another stream – from the pioneering work

by Ijiri and Simon, (1977) all the way to Bottazzi and Secchi, (2006a) –

stud-ies the joint outcome of both learning and selection mechanisms in terms of the ensuing exploitation of new business opportunities. A third stream, including

several contributions by Metcalfe (see among others Metcalfe,1998), focuses on

the competition/selection side often represented by means of replicator dynam-ics whereby market shares vary as a function of the relative competitiveness or “fitness” of the different firms. Finally, many evolutionary models try to un-pack the two drivers of evolution distinguishing between some idiosyncratic processes of change in the techniques of production, on the one hand, and the dynamics of differential growth driven either by differential profitabilities and

the ensuing rates of investment (such in Nelson and Winter,1982), or by an

ex-plicit replicator dynamics (as in Silverberg et al.,1988and in Dosi et al., 1995),

systematic departures from Gaussian stochastic processes. It is an intuition

al-ready explored by Bottazzi and Secchi, (2006a) with respect to the exploitation of

“business opportunities”, which we shall expand also in relation to competition

processes. This is what Bottazzi, (2014) calls the bosonic nature of firm growth,

in analogy with the correlating property of elementary particles – indeed the bosons. Systematic correlations induce non-fading (possibly amplifying) effects of intrinsic differences, or even different degrees of “luck”, across firms, both “intra-period” (however a “period” is defined) and over time.

Bottazzi and Secchi, (2006a) show how such intra-period correlations

gener-ically underlie fat-tailed distributions of firm growth rates. We push the anal-ysis further, showing that just competition-driven replicators yield similar fat-tailedness properties over time. Moreover, by unpacking the learning and se-lection parts of the process, we attempt to distinguish what is generic across all processes and what is specific to particular learning-competition regimes. Fi-nally, our model of industrial dynamics characterised by cumulative learning and competitive selection is able to account for a few other salient empirical features of industrial change.

Section 2 briefly summarises the empirical regularities and Section 3 dis-cusses the main theoretical models aimed at explaining some of them together with some important methodological caveats. Section 4 presents the model and Section 5 analyses the results.

Empirical stylised facts: productivity, size and growth

During the last few decades, enabled by the availability of longitudinal micro data, an increasing number of studies have identified a rich ensemble of stylised facts’ related to productivities, firm sizes and firm growth rate distributions. Let us consider some of them, germane to the results of the model which follows.

Productivity distribution and growth

As extensively discussed in Doms and Bartelsman, (2000), Syverson, (2011),

Dosi, (2007) and Foster et al., (2008), among many others, productivity

disper-sion, at all levels of disaggregation, is a striking and very robust phenomenon. Moreover, such heterogeneity across firms is persistent over time, (cf.

Bartels-man and Dhrymes,1998, Dosi and Grazzi, 2006and Bottazzi et al.,2008), with

autocorrelation coefficients in the range(0.8, 1). An illustration is provided in

Figure 2.1 for one 2-digit Italian sector and two 3-digit thereof. The

distribu-tion and its support are quite stable over time and so is the “pecking order” across firms, as suggested by the high autocorrelation coefficients: see Figure

2.2. These findings, which hold under parametric and non-parametric analyses,

empirically discard any idea of firms’ revealed production process as the out-come of an exercise of optimisation over a commonly shared production pos-sibility set – which, under common relative prices, ought to yield quite similar input/output combinations. Rather asymmetries are impressive (notice the wide support of the distribution that goes from 1 to 5 in log terms), which tells of a history of both firm-specific learning patterns and of co-existence in the market of low and high productivity firms, with no trace of convergence (see Dosi et al.,

2012). Even more so, asymmetries are pronounced in emerging economies (with

only some reduction along the process of development): see on China, Yu et al.,

(2015). In that, the survival of dramatically less efficient firms hint at a

struc-turally imperfect mechanism of market selection, which demand a theoretical interpretation. All this evidence relates to asymmetries in labour productivities but also to firm-level TFPs, notwithstanding the shakiness of the latter notion

Figure 2.1: Empirical distribution of labour productivity. Source: Dosi et al., (2013b).

Figure 2.2: AR(1) coefficients for Labour Productivity in levels (normalised with indus-try means) and first differences, Italy, Istat Micro.1 Dataset. Source: Dosi and Grazzi, (2006).

(more in Dosi et al.,2013b, and Yu et al.,2015).

A less explored phenomenon related to the dynamic of productivity is the double exponential nature of its growth rate distributions. Extensive evidence is

provided in Bottazzi et al., (2005) and Dosi et al., (2012): see Figure2.3for an

il-lustration. The double exponential nature of growth rate in productivity reveals both an underlining multiplicative process that determines efficiency changes, and together, processes of idiosyncratic learning characterised by discrete, relatively fre-quent “big” events.

Size distribution

Firm size distributions are skewed. And this is an extremely robust property which, again, holds across sectors, countries and levels of aggregation. But,

Figure 2.3: Tent shaped productivity growth rate. Italy, Istat Micro.3 Dataset. Source: Dosi et al., (2012).

how skewed is “skewed”?

The power-law nature (following Pareto or Zipf law, according to the slope of

the straight line, in a log-log plot)1of the firm size distribution, has been

investi-gated by many authors2since the pioneering work by Simon and Bonini, (1958).

This is not the place to discuss the possible generating mechanism of such

dis-tribution (an insightful discussion is in Brock, 1999). Here, just notice that on

empirical grounds, size distributions across manufacturing sub-sectors differ a

lot in terms of shape, fatness of the tails and even modality (Bottazzi et al.,2007

and Dosi et al., 2008). Plausibly, technological factors, the different degree of

cumulativeness in the process of innovation, the predominance of process vs. product innovation, and the features of market competition might strongly

af-fect (finely defined) sector-specific size distributions (Marsili,2005). Indeed, it

might well be that the findings on the Zipf (Pareto) law distributions are a mere

effect of aggregation as already conjectured in Dosi et al., (1995). Hence, what

should be retained here as the “universal” pattern is the skewness of the

distri-bution rather than their precise shapes (see Figure2.4for a quite frequent shape

of the upper tail).

1See Newman, (2005) for a succinct overview.

Figure 2.4: Skewness in the size distribution. Elaboration on Fortune 500. Source: Dosi et al., (2008).

SALES VALUEADDED

Mean 0.130 0.161

Std. Dev. 0.048 0.045

Min. 0.0544 0.082

Max. 0.559 0.601

Table 2.1: Turbulence index. Italy, Istat Micro.3 Dataset. Source: Grazzi et al., (2013)

Market turbulence

Underneath the foregoing invariances, however, there is a remarkable turbu-lence involving changes in market shares, entry and exit (cf. the discussions

in Baldwin and Rafiquzzaman,1995and Doms and Bartelsman,2000). A good

deal of such turbulence is due to “churning” – with 20%−40% of entrants dying

in the first two years and only 40%−50% surviving beyond the seventh year in

a given cohort.

An index that synthesises the market turbulence is shown in Table2.1

pre-senting some synthetic statistics on Italian micro data, both in terms of shares of sales and value added. The I index reads as the sum of the absolute value of market share variations

I =

∑

i