Virtual Humans:

A framework for

the embodiment in Avatars

and social perception of

Virtual Agents

in Immersive Virtual Environments

Phd Course

Emerging Digital Technologies

Academic Year

2017/2018

Virtual Humans: A framework for

the embodiment in Avatars and

social perception of Virtual Agents

in Immersive Virtual Environments

Author

Camilla Agnese Tanca

Supervisor

Franco Tecchia

Tutor

A B S T R AC T

Immersive Virtual Environments (IVEs) are increasingly pervasive, and are used not only in the entertainment industry, but also in many fields of the domestic and public everyday life, such as medical studies and treatments, training, learning techniques, and cultural heritage. The work presented aims to improve Virtual Humans naturalness in IVEs, taking a step forward into the Uncanny Valley. To reach this objective, research is developed in two main areas.

The first relies on well-established studies on human universal emotions (such as Ekman), that are exploited to create emotional virtual agents and virtual environments. This could be a base to create natural and ecological environments in which to carry out experiments on human behaviours. Different studies are presented in virtual environments with different types of participants and purposes: evaluations of the emotional content or evalu-ation of the impact of VR on training.

The second area explores embodiment and the induction of user’s body own-ership over a virtual human body. This could lead not only to more natural interactions in IVE, but could also be a base to meaningful studies on human nature. Two studies are carried out that contribute to this investigation, one that analyses vicarious responses in tetraplegic participants, and the other that evaluates voice shifts during a singing performance in VR. In each area, the work relies on two main expertise fields: neuroscience Paradigms, that exploit various techniques for embodiment and natural interactions, and state of art level graphics, animations, and VR development.

AC K N OW L E D G M E N T S

To Poppy, Pierina, Alessio, and Viola. To Maria and Granma.

To all my families.

I want to thank everyone who walked with me on this long road, on work and outside.

I know I will forget someone important, so I will avoid to write down each and every name. I just want to thank everyone who was close to me in this years, perhaps without noticing how much they gave me and how important they are, you know who you are. What you don’t know, maybe, it that you indeed are or have been a family to me.

In particularly thank all the PERCRO, now my second home, the EventLab in Barcelona, all the friends near and the near from afar. All the friends from Sassari, Pisa, Barcelona and all the rest of the world.

Finally, I want to thank who could not physically be with me along this path, but will always be, as it is part of what I am and what I will be.

R I N G R A Z I A M E N T I

A Poppy, Pierina, Alessio e Viola. A Maria e Nonna.

A tutte le mie famiglie.

Vorrei ringraziare tutti quelli che hanno camminato con me durante questo percorso, sia nel lavoro, sia al di fuori.

So che dimenticher`o qualcuno, quindi non star`o a fare lunghi elenchi, ma ringrazio semplicemente tutti coloro che mi son stati vicini, senza magari neanche accorgersi del bene che mi hanno dato o di quanto siano stati importanti nell’affrontare questi tre anni, sapete gi`a chi siete. Quello che forse non sapete `e che siete stati per poco, per molto o siete ancora la mia famiglia.

In particolare tutto il PERCRO, ormai mia seconda casa, l’EventLab di Barcellona, tutti gli amici che mi sono stati vicino e vicini anche da lontano, tutti i Sassaresi, tutti quelli conosciuti a Pisa durante questi anni e a Barcellona nell’ultimo.

Vorrei infine ringraziare chi non mi `e potuto stare accanto durante questo percorso ma ci sar`a sempre, parte integrante di quello che sono e che sar`o.

C O N T E N T S

Introduction 1

0.1 Aims and questions . . . 4

0.2 Contributions . . . 6

0.3 Thesis structure . . . 7

1 Virtual Reality and presence 9 1.1 Virtual Reality rise . . . 9

1.2 The impact of presence component on VR experience . . . . 17

1.3 The role of virtual humans in a virtual experience . . . 19

2 Virtual Humans: impact and challenges 21 2.1 The development of Virtual Humans . . . 21

2.2 Motion capture systems . . . 27

2.2.1 Body Motion Capture . . . 28

2.2.2 Face Tracking . . . 33

2.3 Overcome the uncanny valley . . . 35

2.4 Creating Realistic-Perceived Virtual Humans . . . 37

2.4.1 The impact of self body representation on users . . . 38

2.4.2 The impact of social interaction with virtual agents . 39 3 The impact of Immersive Environment context on users 41 3.1 Emotional content and social narratives . . . 41

3.1.1 Emotional narrative VEs . . . 43

3.1.2 Technological Setup . . . 44

3.1.3 Evaluation study . . . 45

3.1.4 Discussion . . . 48

3.2 VR potential to convey educative contents . . . 51

3.2.1 Virtual Safety Trainer . . . 52

3.2.2 Technological Setup . . . 53

3.2.3 Method . . . 55

Procedure . . . 56

Metrics . . . 58

Part 2: Performing procedures . . . 61

Trainee Involvement . . . 61

Sense of Presence . . . 62

3.2.5 Discussion . . . 63

4 Virtual Agents and Social Dynamics 66 4.1 Dynamic and emotional Virtual Agents in IVE . . . 66

4.1.1 Dynamic virtual characters . . . 67

Facial Stimuli . . . 68

Methods . . . 71

Results . . . 72

Discussion . . . 75

4.1.2 Facial perception of emotion in IVE . . . 76

Methods . . . 77

VE and DCV . . . 77

Procedure . . . 78

Measures . . . 80

Results and Discussion . . . 82

4.2 Enhance Virtual Agents through codified emotions . . . 84

4.2.1 Experimental paradigm . . . 84

4.2.2 Participants . . . 85

4.2.3 Procedure . . . 86

4.2.4 Results . . . 88

4.2.5 Congruence effect . . . 90

4.2.6 Discussion and Future Works . . . 93

4.3 Virtual Agents and Social Impairments . . . 95

4.3.1 State of the art . . . 99

4.3.2 Natural interaction metaphor for autism . . . 101

4.3.3 Theoretical assumption and experimental questions . 101 4.3.4 ASD patients . . . 102 4.3.5 Technological Set-up . . . 103 4.3.6 Procedure . . . 104 4.3.7 Measure . . . 106 4.3.8 Evaluation . . . 107 4.3.9 Discussion . . . 108

5 Embodiment in Realistic Avatars 110

5.1 Body Ownership affects behaviours . . . 110

5.1.1 Illusory agency . . . 111

5.1.2 Methods . . . 114

5.1.2.1 System set up . . . 114

5.1.2.2 Participants and Ethics . . . 119

5.1.2.3 Experimental Design . . . 119

5.1.3 Measures . . . 122

5.1.3.1 Questionnaires . . . 123

5.1.3.2 Audio signal analysis . . . 124

5.1.4 Results and Discussion . . . 126

5.1.4.1 Questionnaire response on agency . . . 127

5.1.4.2 Objective measures . . . 129

5.2 RHI and Vicarious Touch . . . 132

5.2.1 Vicarious Pleasant Touch . . . 133

5.2.2 Materials . . . 137

5.2.2.1 The environment . . . 138

5.2.3 The environment . . . 139

5.2.3.1 Virtual Humans . . . 139

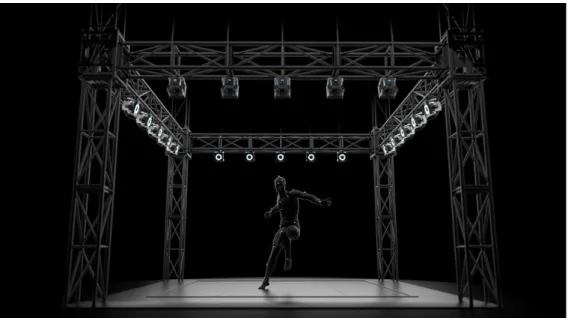

5.2.3.2 Animations Recording Procedure . . . 140

5.2.4 Methods . . . 141 5.2.4.1 Participants . . . 141 5.2.4.2 Procedure . . . 142 5.2.4.3 Physiological Measures . . . 142 5.2.4.4 Questionnaire . . . 143 5.2.5 Results . . . 143 5.2.6 Discussion . . . 145

Conclusions and future directions 149

Appendix

153

A Audio Analysis 153

B List of Publications 157

L I S T O F F I G U R E S

1 Milgram’s reality-–virtuality continuum. . . 1

2 Mori’s graph of the Uncanny Valley. . . 4

1.1 One of the first examples of hand-held stereoscope, realized by Brewster in the 50s . . . 10 1.2 Ivan Sutherland’s the Ultimate Display, also known as the

“Sword of Damocles” . . . 13 1.3 Taxonomy of the current hardware separated into input and

output devices from Anthes et al., 2016 . . . 16 1.4 on the left two user in A CAVE-like system, on the left an

HMD with an stylized body representation . . . 19

2.1 Virtual Marilyn in “Rendez-vous in Montreal” created by Nadia Magnenat-Thalmann and Daniel Thalmann in 1987. . 24 2.2 The evolution of the Lara Croft’s character in 20 years,

relative advance in graphics, and the impact on the Uncanny effect. from Dong and Jho, 2017 . . . 26 2.3 Optitrack motion capture system design with only upper

cameras. Image credit: VR Fitness Insider . . . 29 2.4 Optitrack full body system used in the experiment in Chapter

5 . . . 30 2.5 The perception neuron suit. Design images from Marino,

2014. The device was used also to record the animation in the experiment about vicarious touch (Chapter 5) . . . 32 2.6 Leap Motion Device used with XVR engine in the project

described in Carrozzino et al., 2015. The device was used also in the body ownership experiment (Chapter 5) . . . 33 2.7 Faceware software image with the faceware live SDK that

consents real time facial tracking from video and a plugin for UE4 from (Tech, 2017). The software was used to record the lipsync of the singers in the body ownership experiment (Chapter 5) . . . 34 2.8 Faceshift software used in the emotion faces recording in

2.9 Render styles of the character used in the experiment of Zibrek et al., 2018 (from left to right): Realistic, Toon CG,

Toon Shaded, Creepy and Zombie render style. . . 38

3.1 The three virtual scenario and animations visualized in the experiment . . . 45

3.2 Congruence effect between the emotion value of the ENVE and the emotion perceived. . . 47

3.3 Answers to the Reality Judgment and Presence Questionnaire. 50 3.4 Scenario containing low- and medium-voltage apparatus. . . 53

3.5 A picture showing a user interacting with the VE seen from the outside. . . 54

3.6 Scheme of the adopted experiment procedure . . . 58

3.7 The confined spaces scenarios . . . 59

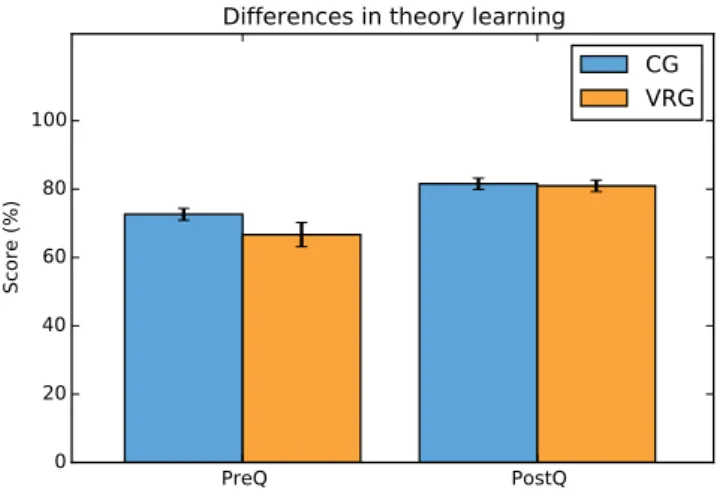

3.8 Theory learning results . . . 60

3.9 Comparison of theory learning . . . 61

3.10 Comparison of procedure learning . . . 62

3.11 Trainee’s involvement between course . . . 63

3.12 Trainee’s involvement between groups . . . 63

3.13 Results of the Presence Questionnaire . . . 64

4.1 The 3D character selected from the rocketbox-libraries, used in the application. . . 69

4.2 Rocketbox model in the Autodesk Maya . . . 69

4.3 Basic facial emotions of DVCs . . . 70

4.4 Experimental Setup . . . 71

4.5 Results in the mean score . . . 73

4.6 Results in the mean time . . . 74

4.7 VE during the exploration in IVE setup . . . 78

4.8 A participant during the experiment . . . 79

4.9 Box plots of emotion recognition accuracy . . . 82

4.10 Screenshot of the immersive virtual environment used in the experiment . . . 85

4.11 Box plots of emotion recognition accuracy . . . 88

4.12 Results of IPQ Questionnaire . . . 89

4.13 Illustration of Sally-Anne test . . . 97

4.14 The LEAP motion mounted on the Oculus Rift . . . 104

4.15 VC are pointing . . . 105

4.17 The user is completing the puzzle . . . 108

5.1 Schema of the RHI experiment . . . 112

5.2 Face motion capture retargeting on the male character in 3Ds max. . . 116

5.3 The environment from the mirror perspective. . . 117

5.4 The environment from the user perspective during the per-formance. . . 118

5.5 Comparison of agency . . . 128

5.6 Comparison of VoiceAgency . . . 128

5.7 Comparison of Pitch Distance . . . 130

5.8 Comparison of median F0 . . . 131

5.9 Comparison of Tempo . . . 131

5.10 A patient affected by a spinal cord injury performing the experiment. . . 138

5.11 A third view perspective of the virtual environment. . . 139

5.12 Doctor and Patient characters modified and adapted to be used in UE4. . . 140

5.13 Conceptual scheme of the animations recording procedure . 141 5.14 Baseline corrected physiological results. First panel shows the Respiratory Sinus Arrhythmia and second panel the Skin Conductance Responses in terms of number of event detected in phasic component. . . 144

5.15 Questionnaire results for each experimental condition. The first panel reports the subjective pleasantness rating. The second plots reports the perceived duration. . . 145

L I S T O F TA B L E S

3.1 Results of Repeated Measure Anova . . . 47 3.2 Results of Post-Hoc Test . . . 48 4.1 Social Interaction Impairments in Autism Spectrum Disorder 96 4.2 Considerable researches using VR for Children with Autism. 101

AC RO N Y M S o s t optical see-through v s t video see-through a r augmented reality m r mixed reality v r virtual reality v h virtual humans ve virtual environment

i ve immersive virtual environment

I N T RO D U C T I O N

virtual reality experience The term Virtual Reality (VR),

credited to Jaron Lanier, founder of Visual Programming Language (VPL) Research in the 70s, is becoming everyday more used in our everyday life. In fact from the first experimental devices to the modern systems huge steps were made. Today we can easily access to many different systems that

provides immersive 3D experiences, as CAVE-like systems 1, Head-Mounted

Displays (HMDs), either cable-based or mobile, haptics devices, controllers, vests, omnidirectional treadmills, tracking technologies, as well as optical scanners for gesture-based motion tracking (Anthes et al., 2016). But what defines virtual reality?

Figure 1: Milgram’s reality-–virtuality continuum.

Milgram and Kishino, 1994 describe a continuum that goes from a natural environment to a completely virtual one (Figure 1). The different degrees are referred at the increasing presence of virtual elements in a system respect to tangible ones. All the systems situated in different position of the continuum are present in numerous fields and applications nowadays. In this work we acquire this definition and we will refer to VR as a system in which the user is immersed in a completely artificial Virtual Environment (VE).

It is still problematic to create a totally realistic reproductions of the five senses with which we perceive the surrounding world. To the present moment he most refined seems to be the visual/auditive component and the haptic one is starting to have an higher position. However we are still far from a realistic experience of virtual smell or taste.

1Cave Automatic Virtual Environment (CAVE) is a trademark of the University of

For that reason many studies have been done on the elements that mad us perceive the experience as realistic as the real deal, and which mental or graphical workaround could be used to guarantee a realistic perceived experience. Studies have also been conducted aimed at understanding how to balance the realism of representation and the use of resources, which are extremely important to be taken into account, especially in view of the commercial use of the VR. But along with the graphical aspects is extremely important to understand what are the features that most impact on our mind into let us perceive the experience as realistic. In this research of the realism, the human component is particularly important, both in the measure of which the humans respond to the VE, both in term of their own representation in systems were the body is not present.

vr presence and cognition When developing a VE, the first

thing to keep in mind is that the virtual environment is not supposed to be a perfect replica of the world we live in. Would be in fact, not only extremely difficult and expensive, but also a waste of the possibilities that an artificially crated world could offer us. In fact, VR allows us to go beyond the boundaries of human experience and that might be extremely useful, not only for recreational applications that allow us to play with imagina-tions, but also to investigate the human mind, for example with controlled alterations of a semi-realistic scenario, or with accurate reproductions of events. Those two features made the technology even more interesting in the view of an ecological environment for experimentations.

However, having fictive aspects means that there are unrealistic features to be accepted in a virtual reality experience in order to “live” the experience. To accept the mentioned fictitious nature of the virtual surrounding envi-ronment we must operate a step similar to what Samuel Taylor Coleridge called the Suspension of Disbelief, the sacrifice of realism and logic for the sake of narration worlds’ acceptance.

Cognitive science have investigated what make us do this step easier, and which are the components to take in consideration to develop a plausible virtual environment. Particularly interesting is the concept of Sense of Presence (SoP) that is the subjective experience of being some place we are not physically located (Witmer and Singer, 1998). The SoP seems to be connected mainly to the level of Involvement, which is a psychological state experienced as a consequence of focusing one’s energy and attention on a coherent set of stimuli or meaningfully related activities and events,

and Immersion, a psychological state characterized by perceiving oneself to be enveloped by, included in, and interacting with an environment that provides a continuous stream of stimuli and experiences.

Those studies lead of many further explorations in which one extremely important seems to be the the mechanisms that made us perceive our

bodily self-consciousness, our sense of being a self in the world, constructed

using multi-sensory information (Herbelin et al., 2016). In this paradigm of studies has becoming extremely important the concept of the representation of the human figure in a virtual environment, both in terms our physical counterpart in the virtual environment, both of the humans we find as part of the environment, being them leaded by Artificial Intelligence (AI) or from other users.

virtual humans Our Avatar it is in fact the primary interface with

which we interact in the virtual world in systems that do not consent to us to see our body as the mentioned HMDs. Not having our own body, as on the contrary is in CAVE-like systems, makes necessary to overcome the lack of it with a virtual one, to enhance the SoP. The virtual body could be represented with different degrees of entirety and realism, and those factors will influence our experience.

The strong impact of bodily representation and the possibility of manip-ulation though consents us many interesting manipmanip-ulations that made us possible to deeply study cognitive processes. Those effect, already broadly studied will be explored in this work in Chapters 4 and 5. The human presence in a VE is not limited to our own body. We have the possibility to create environments in which other humans are present, both controlled by other humans, both with autonomous movements, controlled by the application. The use Virtual Agents, made possible further exploration on the human presence in VR regarding the interactions with other people. It is hence of paramount importance to understand which are the best methods to create a realistic human presence in the VR. Nevertheless the computer graphics made possible to create photo-realistic humans with accurate tracked movements, the perfect reproduction of a human figure is still a challenge, especially for real-time applications. Mori in 1970 (Mori, 1970) discovered that there is a factor of Uncannyness when something contains extremely realistic feature along with something unrealistic.

Figure 2: Mori’s graph of the Uncanny Valley.

As figure 2 shows, Mori hypnotized a function that maps the human likeness of a robot (lately adapted to Virtual Humans) and the affinity of the human to it, affirming that over a certain point the empathy over the figure abruptly shift from empathy to revulsion as it approached, but failed to attain, a lifelike appearance. An example of the mentioned eerie sensations could be found if we think for instance to the dolls with extremely realistic eyes or photo-realistic characters with unrealistic motions. Nowadays, even though the actual technologies and the study on the human mind made huge steps in terms of creating realistic humans, the sense of uncanniness is still perceivable, and it’s interesting to explore new ways from graphics to psychology to overcome this challenges.

0.1 aims and questions

The aim of this work is to understand how to create Immersive Virtual Environments (IVEs)—Virtual, Augmented and Mixed Realities— involving Virtual Humans by providing suggestions and technological solutions. It is essential to understand how present VR technologies have evolved and how

1 (RQ1)). In particular, this work will focus on understanding the role Virtual Humans (VH), their social component, and the users’ sense of Body in embodied avatars (Research Aim 1 (RA1)) (Research Question 2

(RQ2)).

In the first part of this thesis, an exploration of how the Virtual Environment and Virtual Humans developed from the first ideas to the present will be conducted, including the studies done in cognitive sciences about user’s experience related to VR in general and on VH in particular. Then, the impact of the VEs will be explored with two experiments investigating the power of VEs to convey specific contents (Research Aim 2 (RA2)). The first experiment is aimed at understanding how emotional contents and social narratives impact the user virtual experience (Research Question 3

(RQ3)). The second investigates the training power of virtual environments

compared to traditional learning techniques (Research Question 4 (RQ4)). Then, the role of the human figure will be explored with three experiments aiming to understand the social perception of VH in IVEs (Research Aim 3 (RA3)). First the impact of dynamism in facial character animation is explored (Research Question 5 (RQ5)), then the power of dynamic emotional content in VH is analysed (Research Question 6 (RQ6)). In the third experiment the emotional content of IVEs and VHs is combined to understand how those factors could enhance the virtual experience (Research Question 7 (RQ7)). In the last experiment of chapter 4 the aim is to observe how the training power of VEs and dynamic emotional Virtual Agents could be used in conditions of social impairments (Research Question 8 (RQ8)). In the last part of this thesis the observations made with the previous experiments are tested to observe the effects of the embodiment in a realistic Avatar in first person perspective (Research Aim 4 (RA4)). In the first experiment the effects of embodiment in a realistic Avatar that moves synchronous to the user are observed to test the effect of illusory agency over a singing performance (Research Question 9 (RQ9)). In the second it is investigated the subjective and physiological response to a vicarious virtual pleasant touch on people with body de-afferent conditions (Research Question 10 (RQ10)).

0.2 contributions

In this section, a list of the contributions of this thesis is presented. Between brackets the research aims/questions answered by each contribution are reported.

C1: An overview on the progress of virtual reality through advances in

technologies and cognitive research. The present situation is defined based on the historical key points, and the actual challenges are explored. (Chapters 1 ) (RA1)(RQ1).

C2: An analysis of the two main categories of user interactions with virtual

humans in IVE. The roles of virtual humans and social interactions, and of embodiment in realistic avatars are identified. The technical development and actual challenges of character creation is explored. (Chapter 2) (RA1) (RQ2).

C3: A study of evaluation of Emotional Narrative Immersive Enviroments.

A paradigm to create IVEs charged with emotional content and social narratives, capable to elicit emotion in users is formed. (Chapter 3)

(RA2) (RQ3).

C4: The development a VR safety training simulator that could improve

knowledge transfer to train workers’ adherence to the safety procedures (Chapter 3) (RA2) (RQ4).

C5: The creation of Dynamic Virtual Characters (DVCs) that could

repro-duce the vividness and intensity of facial emotions in a life-like way. For this purpose, an experiment aimed at investigating the accuracy of the correspondence between facial expressions of DVCs and basic emotional stimuli perceived by an observer was developed (Chapter 4) (RA3) (RQ5).

C6: In order to ecologically investigate the social perception of DVCs faces,

faces were evaluated in an IVE simulating a realistic scenario. The experiment focused on understanding whether the effect of providing emotional facial stimuli in an isolated context could be a limitation to the emotion perception, since real facial interactions usually take place in real-life situations (Chapter 4) (RA3) (RQ6).

C7: An investigation on the congruency effect between the facial emotions

appearing on the 3D character’s face and the contextual information conveyed by the IVE (Chapter 4) (RA3) (RQ7).

C8: An exploitation of DVCs and ENVE to study the effects of training

in users with social impairments. (Chapter 4) (RA3)(RQ8).

C9: An evaluation on the effects of embodiment through the development

of an experiment that tests the effect of illusory agency over a singing performance (Chapter 5) (RA4) (RQ9) .

C10: A feasibility study of a virtual environment in which a Pleasant Touch

(PT) and a neutral touch are applied to an avatar, on the right forearm or on the right leg. The system allows to understand the reaction of bodily impaired persons by collecting behavioural and physiological responses in an ecological way. The aim is to understand the sense of body perceptions (Chapter 5) (RA4) (RQ10).

0.3 thesis structure

This thesis is organised as follows:

Chapter 1. In the first chapter an overview on the commercial rise of

Virtual Reality is shown. The software’s and hardware’s development is re-traced, from the first mentions of VR to the actual state of art technologies, with an evaluation on the ongoing challenges. A survey of the studies on sense of presence is inserted to clarify the perceptive perspective of VR and the role of the human presence in it.

Chapter 2. In the second chapter the overview focus on the human

component depiction in VR, the role of Avatars and Virtual Agents is explored, an history of the graphical progress is analysed, and the ongoing challenges are evaluated. In particular a review of the most interesting motion capture device is done and a reflection on the techniques to overcome some typical problem of the VH representation.

Chapter 3. In the third chapter the power of VEs to convey contents

and the second that investigates the training potential of VR.

Chapter 4. In the fourth chapter three experiments aimed at creating

a framework for the development of Dynamic Virtual Agents (DVC) are presented. The dynamism of expression is tested first in VEs and then in VEs containing emotional content. Those factors are the tested in a clinical scenario with participants with social impairments.

Chapter 5. In the last chapter two experiments using first person

perspective embodiment are evaluated. The first tests the effects of illusory agency over a singing performance. The second tests the effects of vicarious touch on people with neural conditions that provokes sense of body owner-ship alterations.

1

V I RT U A L R E A L I T Y A N D P R E S E N C E

“When anything new comes along, everyone, like a child dis-covering the world, thinks that they’ve invented it, but you scratch a little and you find a caveman scratching on a wall is creating virtual reality in a sense. What is new here is that more sophisticated instruments give you the power to do it more easily.”

Sutherland, 1968.

In the last years the word Virtual Reality (VR) has became everyday more common in our life. The advances in technologies of production and visualization of 3D content in fact (and the lowering of their price), made possible a huge commercial expansion not only in industries and laboratory research but also to the great public.

This sudden embedding of new technologies in ordinary life however, seems to carry the impression that Virtual Reality and 3D graphics are recent discoveries. But the idea of a virtual reality, did not born now, nor 50 years ago when the term was first mentioned. If we look closer, we can detect VR roots way deeper in human history. Virtual Reality roots can be traced on two main lines: graphics and human cognition.

1.1 virtual reality rise

Indeed, if we look the problem from the perspective of creating the illusion of something not actually present the origin of VR, or what we could call

analog VR can be traced from the caveman paintings, where the humans

used their imagination to communicate through paintings or words (Jerald, 2015). Moreover, the use of magical illusions to entertain and control the masses was common from the Egyptians, Chaldeans, Jews, Romans, and Greeks times. And even in the Middle Ages, magicians used smoke and mirrors to produce fake ghost and demon illusions to gull audiences (Low and Hopkins, 2009).

Taking a step more closer to VR as we know it today we can trace the static version of today’s stereoscopic 3D TVs in stereoscope images, invented

before photography in 1832 by Sir Charles Wheatstone (Gregory, 2015). The device used mirrors angled at 45° to reflect images into the eye from the left and right side. Twenty years later David Brewster, the inventor of kaleidoscope, used lenses to make a smaller hand-held stereoscope, presented at the 1851 Exhibition at the Crystal Palace and praised even from the Queen Victoria. By 1856 Brewster estimated over a half million stereoscopes had been sold (Brewster, 1856). This first 3D craze included various forms of the stereoscope, including self-assembled cardboard versions with moving images controlled by the hand in 1860 (Zone, 2014). One company alone sold a million stereoscopic views in 1862. Brewster’s design is conceptually the same as the 20th century View-Master and today’s Google Cardboard. In the case of Google Cardboard and similar phone based VR systems, a cellular phone is used to display the images in place of the actual physical images themselves.

Figure 1.1: One of the first examples of hand-held stereoscope, realized by Brewster in the 50s .

1895 Midwinter Fair in San Francisco, and is still one of the most compelling technical demonstrations of an illusion to this day. The demo consisted of a room and a large swing that held approximately 40 people. After the audience seated themselves, the swing oscillated, and users felt motion similar to being in an elevator while they involuntarily clutched their seats. The swing, however, hardly moved at all, only the surrounding room moved, resulting in the sense of self-motion and motion sickness.

That were the same age of the expansion of the movies market to the masses. In those year the famous episode of people screaming and running to the back of the room when they saw a virtual train coming at them through the screen in the short film L’Arriv´ee d’un train en gare de La

Ciotat. Although the screaming and running away is more rumor than

verified reports, there was certainly hype, excitement, and fear about the new artistic medium, perhaps similar to what is happening with VR today. Innovation continued in the 1900s that moved beyond simply presenting visual images.

Edwin Link developed in the 20s the first simple mechanical flight sim-ulator, a fuselage-like device with a cockpit and controls that produced the motions and sensations of flying. However, the military for which he designed it was not initially interested, so he pivoted to selling to amusement parks. By 1935, the Army Air Corps ordered six systems and by the end of WorldWar II, Link had sold 10,000 systems. Link trainers eventually evolved into astronaut training systems and advanced flight simulators complete with motion platform and real-time computer-generated imagery, and today is Link Simulation & Training, a division of L-3 Communications. Since 1991, the Link Foundation Advanced Simulation and Training Fellowship Program has funded many graduate students in their pursuits of improving upon VR systems, including work in computer graphics, latency, spatialized audio, avatars, and haptics (Link, 2015).

As early 20th century technologies started to develop, science fiction and questions inquiring about what makes reality started to become popular. In 1935, for example, science fiction readers got excited about a surprisingly similar future that we now aspire to with head-mounted displays and other equipment through the book Pygmalion’s Spectacles. The story opens with the words “But what is reality?” written by a professor friend of George Berkeley, the Father of Idealism (the philosophy that reality is mentally constructed) and for whom the University of California, Berkeley, is named. The professor then explains a set of eyeglasses along with other equipment

that replaces real-world stimuli with artificial stimuli. The demo consists of a quite compelling interactive and immersive world where “The story is all about you, and you are in it” through vision sound, taste, smell, and even touch. One of the virtual characters calls the world Paracosma—Greek for “land beyond-the-world.” The demo is so good that the main character,

although skeptical at first, becomes convinced that it is no longer illusion, but reality itself. Today there are countless books ranging from philosophy to science fiction that discuss the illusion of reality. Perhaps inspired by Pygmalion’s Spectacles, McCollum patented the first stereoscopic television glasses in 1945. Unfortunately, there is no record of the device ever actually having been built.

In the 1950s Morton Heilig designed both a head-mounted display and a world fixed display. The head-mounted display (HMD) patent (Heilig, 1960) claims lenses that enable a 140◦ horizontal and vertical field of view, stereo earphones, and air discharge nozzles that provide a sense of breezes at different temperatures as well as scent. He called his world-fixed display the Sensorama.The Sensorama was created for immersive film and it provided stereoscopic color views with a wide field of view, stereo sounds, seat tilting, vibrations, smell, and wind (Heilig, 1992).

In 1961, Philco Corporation engineers built the first actual working tracked HMD that included head tracking. As the user moved his head, a camera in a different room moved so the user could see as if he were at the other location. This was the world’s first working telepresence system.

One year later IBM was awarded a patent for the first glove input device. This glove was designed as a comfortable alternative to keyboard entry, and a sensor for each finger could recognize multiple finger positions. Four possible positions for each finger with a glove in each hand resulted in 1,048,575 possible input combinations. Glove input, albeit with very differ-ent implemdiffer-entations, later became a common VR input device in the 1990s. Starting in 1965, Tom Furness and others at the Wright-Patterson Air Force Base worked on visually coupled systems for pilots that consisted of head-mounted displays. While Furness was developing head-head-mounted displays at Wright Patterson Air Force Base, Ivan Sutherland was doing similar work at Harvard and the University of Utah. One of the most famous HMD, or better: BOOM (Binocular Omni Orientation Monitor), was indeed invented by Ivan Sutherland: the “Sword of Damocles” developed by him in 1968 (Sutherland, 1965). It had to be hanged to the ceiling because is was too bulky to head-mount (characteristic of BOOMs). Hence, it received his

name after the story of King Damocles who, with a sword hanging above his head by a single hair of a horse’s tail, was in constant peril. The Sword of Damocles was capable of tracking both the position of the user and his eyes and update the image of its stereoscopic view according to user’s position (Boas, 2013).

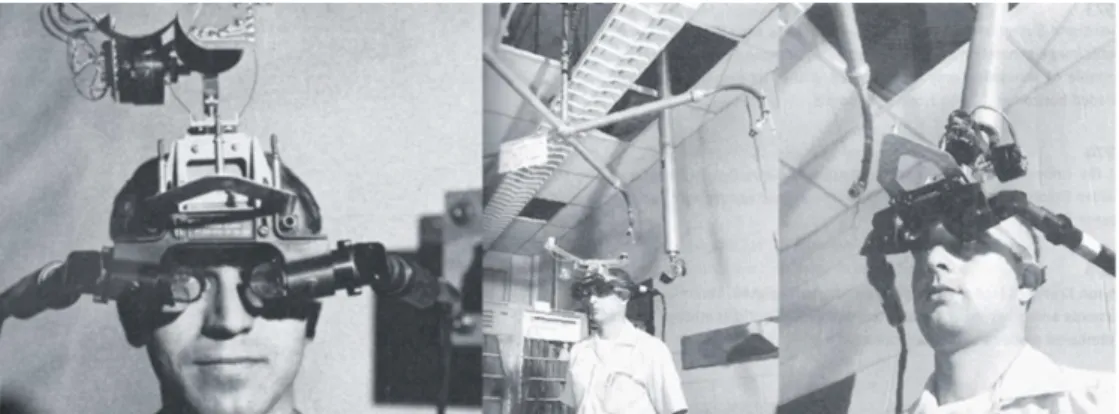

Figure 1.2: Ivan Sutherland’s the Ultimate Display, also known as the “Sword of Damocles”

Dr. Frederick P. Brooks, Jr., inspired by Ivan Sutherland’s vision of the Ultimate Display (Sutherland, 1965), established a new research program in interactive graphics at the University of North Carolina at Chapel Hill, with the initial focus being on molecular graphics. This not only resulted in a visual interaction with simulated molecules but also included force feedback where the docking of simulated molecules could be felt. UNC has since focused on building various VR systems and applications with the intent to help practitioners solve real problems ranging from architectural visualization to surgical simulation. In 1981, on a very small budget, NASA created the prototype of a liquid crystal display (LCD)-based HMD, which they named the Virtual Visual Environment Display (VIVED). NASA sci-entists simply disassembled commercially available Sony Watchman TVs and put the LCDs on special optics. These optics were needed to focus the image close to the eyes without effort. The majority of today’s virtual reality head-mounted displays still use the same principle. NASA scientists then proceeded to create the first virtual reality system by incorporating a DEC PDP 11-40 host computer, a Picture System 2 graphics computer (from Evans and Sutherland), and a Polhemus noncontact tracker. The tracker was used to measure the user’s head motion and transmit it to the PDP 11-40. The host computer then relayed these data to the graphics

computer, which calculated new images displayed in stereo on the VIVED (Burdea and Coiffet, 2003).

In 1982, Atari Research, led by legendary computer scientist Alan Kay, was formed to explore the future of entertainment. The Atari research team, which included Scott Fisher, Jaron Lanier, Thomas Zimmerman, Scott Foster, and Beth Wenzel, brainstormed novel ways of interacting with computers and designed technologies that would soon be essential for commercializing VR systems.

Jaron Lanier and Thomas Zimmerman left Atari in 1985 to start VPL Research (VPL stands for Visual Programming Language) where they built commercial VR gloves, head-mounted displays, and software. During this time Jaron Lanier coined the term ”virtual reality”. In addition to building and selling head-mounted displays, VPL built the NASA-specified Data-glove— a VR glove with optical flex sensors for measuring finger bending and tactile vibrator feedback(Zimmerman et al., 1987). Scott Fisher joined the aforementioned VIVED project in 1985 and integrated into the simula-tion his new type of sensing glove.

In the 1990s, VR exploded with different companies focusing mainly on the market for professional research and location - based entertainment. Virtuality, Division, and Fakespace were examples of the more well - known newly formed VR companies. Existing companies such as Sega, Disney, and General Motors, as well as numerous universities and the military, have also begun to experiment more extensively with VR technologies. Movies were made, numerous books were written, newspapers emerged, and conferences were formed — all focused exclusively on VR.

In 1993, Wired magazine predicted that within five years more than one in ten people would wear HMDs while traveling in buses, trains, and planes (Negroponte, 1993). It seemed VR was about to change the world and there was nothing that could stop it. Unfortunately, technology could not support the promises of VR. In 1996, the VR industry peaked and then started to slowly contract with most VR companies, including Virtuality, going out of business by 1998. The first decade of the 21st century is known as the “VR winter.”. Although there was little attention given to VR by mainstream media from 2000 to 2012, VR research continued in-depth at corporate, government, academic, and military research laboratories around the world. The VR community started to turn to human-centered design with an extra emphasis on user research and then it became difficult to get a VR paper accepted at a conference without including some form of formal evaluation.

Thousands of VR - related research papers from this era contain a plethora of information that is unfortunately pretty much unknown and ignored by those new to VR today.

A wide field of view was a major missing component of consumer HMDs in the 1990s, and without it users were just not getting the “magic” feeling of presence (Mark Bolas, personal communication, June 13, 2015). In 2006, Mark Bolas of USC’s MxR Lab and Ian McDowall of Fakespace Labs created a 150◦ field of view HMD called the Wide5, which the lab later used to study the effects of field of view on the user experience and behavior. For example, users can more accurately judge distances when walking to a target when they have a larger field of view (Jones et al., 2012).

The team’s research led to the low-cost Field of View To Go (FOV2GO), which was shown at the IEEE VR 2012 conference in Orange County, Cal-ifornia, where the device won the Best Demo Award and was part of the MxR Lab’s Open-source project that is the precursor to most of today’s consumer HMDs. Around that time, a member of that lab named Palmer Luckey started sharing his prototype on Meant to be Seen (mtbs3D.com) where he was a forum moderator and where he first met John Carmack (now CTO of Oculus VR) and formed Oculus VR. Shortly after that he left the lab and launched the Oculus Rift Kickstarter. The hacker community and media latched onto VR once again. Companies ranging from start-ups to the Fortune 500 began to see the value of VR and started providing resources for VR development, including Facebook, which acquired Oculus VR in 2014 for $2 billion. The new era of VR was born(Jerald, 2015). The low price launch of the Oculus Rift started what is commonly called second wave of VR, that is still running in these days. Many companies started to launch product oriented to develop the original idea of the

Ulti-mate Display, but this time the prototyping is much affordable and there are

25 years of research on which to build on to create efficient solutions. Along with the commercial expansion, research is investing a huge amount of resources in the development of non-consumer devices like spatially immer-sive installations as CAVE-like installations or professional motion capture systems It could be argued that companies and research developments will ultimately affect the scientific community in a more direct way than the Kinect or the Wiimote affected the Human Computer Interaction (HCI) community. Those devices are indeed, great for experimental prototypes but the development in commodity VR could bring beneficial for the scientific community in an immediate way as is the development of graphics cards

driven by the gaming industry. One example is the recent inclusion by nVidia and AMD have of features in their graphics boards that supports current and upcoming HMDs. It could be argued that final technologies used in research will rapidly improve as displays and tracking hardware become mass produced and thus are available for low prices with a more robust and ergonomic design (Anthes et al., 2016).

Figure 1.3: Taxonomy of the current hardware separated into input and output devices from Anthes et al., 2016

1.2 the impact of presence component on vr experience The actual term VR is often referred to a computer-generated environment in which the user can perceive, feel and interact in a manner that is similar to a physical place. As we seen chapter before this is achieved by combin-ing stimulation over multiple sensory channels—such as sight, sound and touch—with force-feedback, motion tracking, and control devices. In an ideal VR system, the user would not be able to distinguish an artificial environment from its physical counterpart(Parsons et al., 2017). We already observed how the hardware systems had worked in that way to achieve results everyday more surprising. However, the perfect technical reproduc-tion of senses is not the only feature that plays on our percepreproduc-tions of the surrounding worlds, and the path to a perfect artificial reconstruction of the world to all of our senses is still long. Significant advances in the perceptual fidelity of virtual environments, however, have been achieved over the last few years.

A key point in creating the illusion of being in a virtual space is the sense of

presence. Presence is defined as the feeling of being inside a mediated world.

In the same way that we “feel present”, or consciously “being there”, in the physical world around us is based upon perception, physical action and ac-tivity in that world, so the feeling of presence in a technologically-mediated environment is a function of those components(Lombard et al., 2015; Riva et al., 2014). When users experience presence, they feel that the technology has become part of their bodies and that that they are experiencing the virtual world in which they are immersed. Moreover, they react emotionally and bodily (at least to some extent), as if the virtual world exists physically (Parsons et al., 2017).

During the development of the studies on presence many theories were formed around the concept. For instance Slater et al., 1994 underlines the importance of the sense of “being here” and the isolation from the surrounding “real world”. On the line of “exclusive presence” Biocca, 1997 states that presence oscillates on the three poles of physical environment, virtual environment and imaginary environment. Users could not be present in more than one so, more time they perceive to stay in virtual reality, more is the sense of presence they felt.

Witmer and Singer, 1998 gave instead a dichotomous definition of presence related to attention. They identify the presence as composed on one side by the psychological state of involvement, defined as the consequence of

the focused attention on some coherent stimuli, activities or events; on the other side related on immersion, the psychological state of perceiving to be enveloped by, included in, and interacting with a VE. They believe that both components are essential to raise the sense of presence, that they consider similar to the concept of selective attention.

Other theories on perception revolve around the concept of affordance(Flach and Holden, 1998; Schuemie and Mast, 1999; Zahorik and Jenison, 1998). It couple the perception of the environment with the actions an organism (i.e a human or an animal) can do with it. For instance the sky can afford a bird to fly but not to a human. Those theories, as the same way, indicates the perception of tools in function of their use in the task performed (Heidegger et al., 1962). In this light the perception of VR is seen centred on the interactions with virtual equipment and environment, and the perception of existing in that environment would be stronger as much similar is the response to those of the real world in which we evolved (Zahorik and Jenison, 1998).

Another two lines of studies are particularly centred on the virtual hu-man(VH) factor. The first one stress on the role of interactions and the intrinsic sociality nature of action (Mantovani and Riva, 1999). The level of presence in this view is related to the users’ cultural expectations and other peoples’ interpretation of the VE. On the same path, Sheridan, 1999 proposed a more rationalistic approach with the distinction between objec-tive and subjecobjec-tive reality. However, he still sees the estimation of reality as a continuous making and refining of our own mental model, based on senses and interaction with our surroundings(Sheridan, 1999).

The other line that revolves around human perception is the concept of

embodied presence. Schubert et al., 1999, base his definition of presence

on the cognition framework, and states that the mental representation of the environment is constructed around the perceived possibility to perform actions, based on perception and memory, i.e the possibility to navigate and move own body. In predicting the outcome of actions, humans have the ability to suppress contributions of the current environment to conceptual-ization, thus explaining why we can experience presence in a VE despite sensing conflicting features of the real environment (Schuemie et al., 2001). Most of these theories around presence had produces subjective codified measures of evaluation, that proved to be very effective in score the user experience inside VR, for an overview see Schuemie et al., 2001.

1.3 the role of virtual humans in a virtual experience Understanding the actual work about technologies and perception in virtual environments is essential to develop environments in which the user can feel totally immerse and present. A key point in creating a sense of presence seems to be the human component of self and the others. It is crucial hence to understand the impact that the human figure representation have in a VE. Considering the depiction of our own body, in some immersive technologies like CAVE-like systems, this is inherent to the media, since we are immerse in a virtual environment but we can still see our own body. However, this is not always the case, especially with HMDs, that are nowadays the most diffuse technology for VR. Seems crucial hence to find a way to reproduce the graphical aspect and the movements of an Avatar, the virtual counterparts of our physical body that we control to explore the VE. On the other side is equally important the social interaction with Virtual

Agents, the virtual characters in the story carried from the VE. Those are

the most alive part of a surrounding and they can drastically impact on the sense of presence in a VE(Schuemie et al., 2001).

Figure 1.4: on the left two user in A CAVE-like system, on the left an HMD with an stylized body representation

Avatar derives from the Sanskrit word avatarah, meaning “descent” and refers to the incarnation the descent into this world—of a Hindu god. A Hindu deity embodied its spiritual being when interacting with humans by appearing in either human or animal form. In the late twentieth century, the term avatar was adopted as a label for digital representations of humans

Although many credit Neal Stephenson with being the first to use avatar in this new sense in his seminal science fiction novel Snow Crash (1992), the term and concept actually appeared as early as 1984 in online multi-user dungeons, or MUDs (role-playing environments), and the concept, though not the term, appeared in works of fiction dating back to the mid1970s. This thesis will explore concepts, research and ethical issues related to avatars as digital human representations. (The discussion here is limited to digital avatars, excluding physical avatars such as puppets and robots. Currently, most digital avatars are visual or auditory information, although there is no reason to restrict the definition as such.)

Within the context of human-computer interaction, an avatar is a percepti-ble digital representation whose behaviours reflect those executed, typically in real time, by a specific human being. An embodied agent, by contrast, is a perceptible digital representation whose behaviours reflect a compu-tational algorithm designed to accomplish a specific goal or set of goals. Hence, humans control avatar behaviour, while algorithms control embodied agent behaviour. Both agents and avatars exhibit behaviour in real time in accordance with the controlling algorithm or human actions.

Not surprisingly, the fuzzy distinction between agents and avatars blurs for various reasons. Complete rendering of all aspects of a human’s actions (down to every muscle movement, sound, and scent) is currently

techno-logically unrealistic. Only actions that can be tracked practically can be rendered analogously via an avatar; the remainder are rendered algorith-mically (for example, bleeding) or not at all (minute facial expressions, for instance). In some cases avatar behaviours are under non-analogous human control; for example, pressing a button and not the act of smiling may be the way one produces an avatar smile. In such a case, the behaviours are at least slightly non-analogous; the smile rendered by the button-triggered computer algorithm may be noticeably different from the actual human’s smile. Technically, then, a human representation can be and often is a hybrid of an avatar and an embodied agent, where in the human controls the consciously generated verbal and non-verbal gestures and an agent controls more mundane automatic behaviours (Bainbridge, 2004).

After the overview done this chapter of some of the key points of VR experience development, this work with follow with a survey on Virtual Humans and their role in a virtual experience.

2

V I RT U A L H U M A N S : I M PAC T A N D C H A L L E N G E S

It is essential to have in mind all the factors that made us perceive the human figure realistic in a virtual environment to create realistic avatars and virtual humans. They have in fact a crucial role in the virtual experience and they could be exploited in many different areas.

Before we analyse deeply the essential factors we tested to provide some solution to this challenge however, seems right to have an overview on how the human representation in VR changed in those fast years of technology rise and the solutions provided in companies and academies.

2.1 the development of virtual humans

One of the earliest example of modelling of the human figure in computer graphics is provided by ergonomic analysis (Magnenat-Thalmann and Thal-mann, 2005) The mentioned figure was William Fetter’s Landing Signal Officer (LSO), developed for Boeing in 1959 (Fetter, 1982). The seven jointed ‘First Man’, used for studying the instrument panel of a Boeing 747, enabled many pilot actions to be displayed by articulating the figure’s pelvis, neck, shoulders, and elbows.

The first use of computer graphics in commercial advertising took instead place in 1970 when this figure was used for a Norelco television commercial. The addition of twelve extra joints to “First Man” produced “Second Man”. This figure was used to generate a set of animation film sequences based on a series of photographs produced by Muybridge, 1955. ‘Third Man and Woman’ was a hierarchical figure series with each figure differing by an order of magnitude in complexity. These figures were used for general ergonomic studies. The most complex figure had 1000 points and was displayed with lines to represent the contours of the body. In 1977, Fetter produced ‘Fourth Man and Woman’ figures based on data from biostereometric tapes. These figures could be displayed as a series of colored polygons on raster devices.

Cyberman (Cybernetic man-model), developed by Chrysler Corporation to

model human activity in and around a car (Blakeley, 1980). Although he was created to study the position and motion of car drivers, there was no

check to determine whether his motions were realistic and the user was responsible for determining the comfort and feasibility of the position after each operation. It was based on 15 joints and the position of the observer was pre-defined.

Combiman (Computerized biomechanical man-model), specifically designed

to test how easily a human can reach objects in a cockpit (Evans, 1976). Motions had to be realistic and the human could be chosen at any percentile from among three-dimensional human models. The vision system was very limited. Combiman was defined using a 35 internal-link skeletal system. Although the system indicated success or failure with each reach operation, the operator was required to determine the amount of clearance (or distance remaining to the goal).

Boeman was designed in 1969 by the Boeing Corporation (Dooley, 1982). It

was based on a 50th-percentile three-dimensional human model. He could reach for objects like baskets but a mathematical description of the object and the tasks is assumed. Collisions were detected during Boeman’s tasks and visual interferences are identified. Boeman was built as a 23-joint figure with variable link lengths.

Sammie (System for Aiding Man Machine Interaction Evaluation) was

designed in 1972 at the University of Nottingham for general ergonomet-ric design and analysis (Bonney et al., 1972). This was, so far, the best parametrized human model and it presents a choice of physical types: slim, fat, muscled, etc. The vision system was very developed and complex objects can be manipulated by Sammie, based on 21 rigid links with 17 joints. The user defined the environment by either building objects from simple primitives, or by defining the vertices and edges of irregular shaped objects. The human model was based on a measurement survey of a general population group.

Buford, developed at Rockwell International in Downey, California, to find

reach and clearance areas around a model positioned by the operator (Doo-ley, 1982). The figure represented a 50th-percentile human model and was covered by CAD-generated polygons. The user could interactively design the environment and change the body position and limb sizes. However, repositioning the model was done by individually moving the body and limb segments. He has some difficulty in moving and has no vision system. Buford is composed of 15 independent links that must be redefined at each modification.

In 1971 Parke produced a representation of the head and face in the Uni-versity of Utah, and three years later achieved advances in good enough parametric models to produce a much more realistic face (Parke, 1974). Another popular approach was based on volume primitives. Several kinds of elementary volumes have been used to create such models, e.g. cylin-ders by Potter and Willmert, 1975 or ellipsoids by Computer Science and Herbison-Evans, 1986. Designed by Badler and Smoliar, 1979 of the Uni-versity of Pennsylvania (1979), Bubbleman is a three-dimensional human figure consisting of a number of spheres or bubbles. The model is based on overlap of spheres, and the appearance (intensity and size) of the spheres varies depending on the distance from the observer. The spheres correspond to a second level in a hierarchy; the first level is the skeleton.

Representation of the head and face by Fred Parke, University of Utah, 1974 (Reproduced with permission of Fred Parke) In the early 1980s, Tom Calvert, a professor of kinesiology and computer science at Simon Fraser University, Canada, attached potentiometers to a body and used the output to drive computer-animated figures for choreographic studies and clinical assessment of movement abnormalities. To track knee flexion, for instance, they strapped a sort of exoskeleton to each leg, positioning a potentiometer alongside each knee so as to bend in concert with the knee. The analog output was then converted to a digital form and fed to the computer anima-tion system. Their animaanima-tion system used the moanima-tion capture apparatus together with Labanotation and kinematic specifications to fully specify character motion (Calvert et al., 1982).

In the beginning of the 1980s, several companies and research groups produced short films and demos involving Virtual Humans. Information International Inc, commonly called Triple-I or III, had a core business based on high resolution characters which could be used for digital film scanning and digital film output capabilities which were very advanced for the time. Around 1975, Gary Demos, John Whitney Jr, and Jim Blinn persuaded Triple I management to put together a “movie group” and try to get some ‘Hollywood dollars’. They created various demos that showed the potential for computer graphics to do amazing things, among them a 3-D scan of Peter Fonda’s head, and the ultimate demo, Adam Powers, or “the Juggler”. In 1982, in collaboration with Philippe Bergeron, Nadia Magnenat-Thalmann and Daniel Thalmann produced Dream Flight, a film depicting a person (in the form of an articulated stick figure) transported over the Atlantic Ocean from Paris to New York. The film was completely programmed using the

MIRA graphical language, an extension of the Pascal language based on graphical abstract data types. The film won several awards and was shown at the SIGGRAPH ’83 Film Show.

A very important milestone was in 1985, when the Film Tony de Peltrie used for the first time facial animation techniques to tell a story. The same year, the Hard Woman video for Mick Jagger’s song was developed by Digital Productions and showed a nice animation of a woman. “Sexy Robot” was created in 1985 by Robert Abel & Associates as a TV commercial and imposed new standards for the movement of the human body (it introduced motion control).

In 1987, the Engineering Society of Canada celebrated its 100th anniver-sary. A major event, sponsored by Bell Canada and Northern Telecom, was planned for the Place des Arts in Montreal. For this event, Nadia Magnenat-Thalmann and Daniel Thalmann simulated Marilyn Monroe and Humphrey Bogart meeting in a caf´e in the old town section of Montreal. The development of the software and the design of the 3-D characters (now capable of speaking, showing emotion, and shaking hands) became a full year’s project for a team of six. Finally, in March 1987, the actress and actor were given new life as Virtual Humans.

Figure 2.1: Virtual Marilyn in “Rendez-vous in Montreal” created by Nadia Magnenat-Thalmann and Daniel Thalmann in 1987.

In 1988 Tin Toy was a winner of the first Oscar (as Best Animated Short Film) for a piece created entirely within a computer. The same year, de Graf/Wahrman developed “Mike the Talking Head” for Silicon Graphics to show off the real-time capabilities of their new 4-D machines. Mike was driven by a specially built controller that allowed a single puppeteer to control many parameters of the character’s face, including mouth, eyes, expression, and head position. The Silicon Graphics hardware provided real-time interpolation between facial expressions and head geometry as controlled by the performer. Mike was performed live in that year’s SIG-GRAPH film and video show. The live performance clearly demonstrated that the technology was ripe for exploitation in production environments. In 1989, Kleiser-Walczak produced Dozo, a (non-real-time) computer anima-tion of a woman dancing in front of a microphone while singing a song for a music video. They captured the motion using an optically-based solution from Motion Analysis with multiple cameras to triangulate the images of small pieces of reflective tape placed on the body. The resulting output is the 3-D trajectory of each reflector in the space.

In 1989, in the film The Abyss, there is a sequence where the watery pseu-dopod acquires a human face. This represents an important step for future synthetic characters. In 1989, Lotta Desire, star of The Little Death and Virtually Yours established new accomplishments. Then, the Terminator II movie in 1991 marked a milestone in the animation of synthetic actors mixed with live action. In the 1990s, several short movies were produced, the best-known being Geri’s Game from Pixar which was awarded the Academy Award for Animated Short.

In those years the Jack software package was developed at the Center for Human Modeling and Simulation at the University of Pennsylvania, and was made commercially available by Transom Technologies Inc. Jack provided a 3-D interactive environment for controlling articulated figures. It featured a detailed human model and included realistic behavioural controls, an-thropometric scaling, task animation and evaluation systems, view analysis, automatic reach and grasp, collision detection and avoidance, and many other useful tools for a wide range of applications.

In the 1990s, the emphasis shifted to real-time animation and interaction in virtual worlds. Virtual Humans began to inhabit virtual worlds and so had we. To prepare our own place in the virtual world, we first developed techniques for the automatic representation of a human face capable of being animated in real time using both video and audio input. The objective

was for one’s representative to look, talk, and behave like us in the virtual world. Furthermore, the virtual inhabitants of this world should be able to see our avatars and to react to what we say and to the emotions we convey. The virtual Marilyn and Bogart where, at the time of those first reflections, 17 years old. The Virtual Marilyn acquired a degree of independent intelli-gence; in 1997 she even played the autonomous role of a referee announcing the score of a real-time simulated tennis match on a virtual court, contested by the 3-D clones of two real players situated as far apart as Los Angeles and Switzerland (Molet et al., 1999).

During the 1980s, the academic establishment paid only a attention to research on the animation of Virtual Humans. Today, however, almost every graphics journal, popular magazine, or newspaper devotes some space to virtual characters and their applications(Magnenat-Thalmann and Thal-mann, 2005). The graphical aspects of a Virtual Character have made huge advance during the last decades, both in offline productions and in real-time application, especially in consumer field. An example that can give an instantaneous picture of the mentioned development in gaming industry is the character of Lara Croft, the evolution of which is took as case study to explore the concept of Uncanny Valley in Dong and Jho, 2017 2.2.

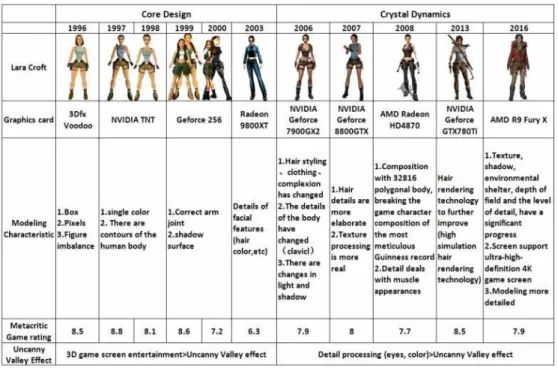

Figure 2.2: The evolution of the Lara Croft’s character in 20 years, relative advance in graphics, and the impact on the Uncanny effect. from Dong and Jho, 2017

Along with the graphical aspect the animation of virtual characters mad huge steps during the years. Before going further it is interesting to analyse some of the of the resources for motion capture in real time. In fact many technologies are available that consent a realistic motion both for the body and the face of virtual humans, that have been also been exploited in the experiments of this thesis.

2.2 motion capture systems

The term Motion Capture refers to the process of capturing human body (or of part of it) motions, at some resolution. Motion capture devices are either based on active sensing or passive sensing. The concept of active sensing is to place devices on the subject which transmit or receive real or artificial generated signals. When the device works as a transmitter it generates a signal which can be measured by another device located somewhere in the surroundings. When it works as a receiver it receives signals usually generated by some artificial source in the surroundings.

In passive sensing the devices do not effect the surroundings. They merely observe what is already in the world, e.g. visual light or other electro magnetic wavelengths, and do generally not need the generation of new signals or wearable hardware (Moeslund, 2000).

The systems used to capture human motion consist of subsystems for sensing and processing, respectively. The operational complexity of these subsys-tems is typically related, so that high complexity of one of them allows for a corresponding simplicity of the other. This trade-off between the com-plexities also relates to the use of active versus passive sensing (Moeslund and Granum, 2001). Two kind of trackers are particularly used nowadays in research and commercial productions: inertial trackers and optical trackers, with some combinations of the two.

Infrared (optical) trackers utilize several emitters fixed in a rigid ar-rangement while cameras or “quad cells” receive the IR light. To fix the position of the tracker, a computer must triangulate a position based on the data from the cameras. This type of tracker is not affected by large amounts of metal, has a high update rate, and low latency (Baratoff and

Blanksteen, 1993). However, the emitters must be directly in the line-of-sight of the cameras or quad cells. In addition, any other sources of infrared light, high-intensity light, or other glare will affect the correctness of the measurement (Sowizral, 1995).

Inertial trackers apply the principle of conservation of angular momentum. Inertial trackers allow the user to move about in a comparatively large working space where there is no hardware or cabling between a computer and the tracker. Miniature gyroscopes can be attached to HMDs, but they tend to drift (up to 10 degrees per minute) and to be sensitive to vibration. Yaw, pitch, and roll are calculated by measuring the resistance of the gyroscope to a change in orientation. If tracking of position is desired, an dditional type of tracker must be used (Baratoff and Blanksteen, 1993). Accelerometers are another option, but they also drift and their output is distorted by the gravity field. (Onyesolu et al., 2012)

2.2.1 Body Motion Capture

Optitrack

The Optitrack full body motion capture system consists of multiple in-frared cameras that are connected to a PC via USB. A minimum number of 6 cameras is recommended in order to track the 34 markers of the adaptable skeleton with predefined structure (Spanlang et al., 2010). Optitrack V100 cameras consist of a 640 480 Black and White CMOS image capture chip, a interchangeable lens, an image processing unit, a synchronization input and output to synchronize with other cameras, an infrared LED source and a USB connection for controlling the camera capture properties and to transmit the processed data to a PC. Lenses with different FOV from 45-115degrees can be used with these cameras. The CMOS capture chip is sensitive to infra-red light.

Figure 2.3: Optitrack motion capture system design with only upper cameras. Image credit: VR Fitness Insider

The latest Optitrack cameras can deliver images at a frame rate of 100Hz. At this rate the USB can transmit the complete images of only one camera. In order to work with multiple cameras the images need to be pre-processed by a threshold method on the camera so that only 2D marker positions are transferred. Data delivered by say a 12 camera system is reduced from several Mb/s to a few Kb/s by this pre-processing step. Dedicated pre-processing also reduces the latency of the system. More traditional optical tracking systems can suffer from higher latencies if all images are transferred to a PC first and are processed there.

The Optitrack cameras can work in different image processing modes: object, segment and grayscale precision modes. Object mode is used to extract markers at high speed. Segment mode is for extracting reflective stripes, which is not common in motion capture. The precision mode delivers more grayscale information of the tracker position in order to be able in the software to identify the tracker position at higher precision.

The system here described from Spanlang et al., 2010 , here reported for his similarity to the one used in 5 is now even more evolved and the last Optitrack cameras have a precision of 4.1 MP, a framerate of 180 fps, an horizontal field of view of 51 degrees, can track 170 leds on an area of 100’ × 100’ (30 meters × 30 meters), and benefits also of active LED marker