June 22, 2017

Phd Course in

BioRobotics

Accademic Year

2016-2017

Wearable Sensors for Gesture Recognition

in Assisted Living Applications

Author:

Alessandra Moschetti

Supervisor:

Dr. Filippo Cavallo

"You can’t connect the dots looking forward. You can only connect them looking backwards. So you have to trust that the dots will somehow connect in your future. You have to trust in something: your gut, destiny, life, karma, whatever. Because believing that the dots will connect down the road will give you the confidence to follow your heart, even when it leads you off the well worn path."

Scuola Superiore Sant’Anna

Abstract

BioRobotics Institute

Wearable sensors for gesture recognition in assisted living applications

by Alessandra MOSCHETTI

Several technological solutions have been proposed to face the future demographic challenge and guarantee good-quality and sustainable health care services. Among the different devices, wearable sensors and robots are gaining a lot of attention. Thanks to low cost and miniaturisation, the former have been investigated widely in Ambient Assisted Living scenarios to measure physiological and movements parameters. Sev-eral examples of wearable sensors are already daily used by people. The latter have been proposed in the last years to assist elderly people at home, being able to per-form different types of tasks and to interact both physically and socially with humans. Robots should be able to assist persons at home, helping them in physical activities, as well as to entertain and monitor them.

To increase the abilities of the robots, it is important to make them aware of the en-vironment that surrounds them, especially when working with people. Robots must have a strong perception that allows them to interpret what users are doing to moni-tor elderly persons and be able to interact properly with them. Wearable sensors can be used to increase the perception abilities of robots, enhancing their monitoring and interacting capabilities.

In this context, this dissertation describes the use of wearable sensors to recognise human movements and gestures that can be used by the robot to monitor people and interact with them.

Regarding monitoring tasks, hand wearable sensors were used to recognise daily gestures. Initially, the performances of wearable sensors alone were investigated by mean of an extensive experimentation in a realistic environment to assess whether these sensors can give useful information. In particular, a mix of hand-oriented ges-tures and eating modes was chosen, all involving the movement of the hand to the head/mouth. Despite the similarity of the gestures, the addition of a sensor worn on the index finger to the commonly used wrist sensor allowed to recognise the differ-ent gestures, both with supervised and unsupervised machine learning algorithms, maintaining however low obtrusiveness.

Then, the use of the same sensors was evaluated in a system composed of the wear-able sensors and a depth camera placed on a mobile robot. In this case, it was possible to see how sensors placed on the user can help the robot to improve its perception ability in more realistic conditions. An experimentation was performed placing the moving robot in front and sideways with respect to the user, thus adding noise to the

data and occluding the dominant arm of the user. In this case, the wearable sensors placed on the wrist and on the index finger provided the useful information to in-crease the accuracy in distinguishing among ten different activities, overcoming also the problem of the occlusion that can affect vision sensors.

Finally, the feasibility and perceived usability of a human-robot interaction task carried out by mean of wearable sensors were investigated. In particular, wearable sensors on the feet were used to evaluate real-time human gait parameters that were then used to control and modulate the robot motion in two different tasks. Tests were carried out with users, which expressed a positive evaluation of the performance of the system. In this case, the use of wearable sensors allowed to make the robot moving according to the user movements, without the need of links between the robot and the persons.

Through the implementation and evaluation of these monitoring and interacting tasks, it can be seen how wearable sensors can increase the amount of information that the robot can perceive about the users. This dissertation is, therefore, a first step in the implementation of a system composed of wearable sensors and robot that can help people in daily life.

Contents

Abstract iii 1 Introduction 1 1.1 Demographic context . . . 1 1.2 User needs . . . 2 1.2.1 Primary Stakeholders . . . 3 1.2.2 Secondary Stakeholders . . . 4 1.2.3 Quaternary Stakeholders . . . 41.2.4 Potential of AAL technologies on perceived Quality of Life . . . . 5

1.3 AAL Technologies . . . 6

1.3.1 Wearable Sensors . . . 6

Wearable sensors in daily activity recognition . . . 7

Management of chronic diseases . . . 8

1.3.2 Service Robots . . . 8

Domestic Robots . . . 9

Toward more natural human-robot interaction . . . 10

1.4 Human-Oriented Perception . . . 10

1.5 Scientific Challenges of this PhD Work . . . 13

2 Sensing Technologies 16 2.1 Hand Gesture Recognition Technologies . . . 16

2.1.1 Glove-based system . . . 18

2.1.2 IMU-based system . . . 19

2.1.3 EMG-based and hybrid systems . . . 19

2.1.4 Smartwatch . . . 20

2.2 The SensHand . . . 22

2.3 Gait Analysis Technologies . . . 24

Pressure and Force Sensors . . . 24

Inertial Sensors . . . 25

Other sensors . . . 25

2.4 The SensFoot . . . 26

3 Daily Gesture Recognition 28 3.1 Related works . . . 29

3.2 Experimental Protocol . . . 35

3.3.1 Signal Processing and Classification . . . 39

3.3.2 Results . . . 41

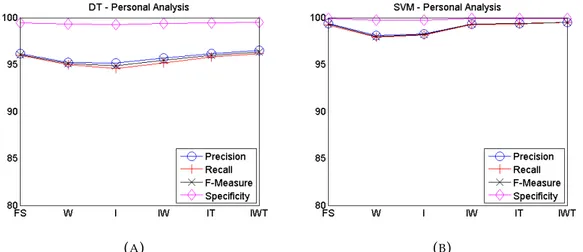

Personal Analysis . . . 41

Impersonal Analysis . . . 42

3.3.3 Discussion . . . 46

3.4 Comparison of Supervised and Unsupervised Approach Using Index Finger and Wrist Sensors . . . 49

3.4.1 Data Analysis and Classification . . . 49

Feature Extraction . . . 50

Classification . . . 50

Feature Space Visualisation . . . 51

3.4.2 Results . . . 52

Supervised Analysis Results . . . 52

Unsupervised Analysis Results . . . 53

Features’ Space Analysis . . . 55

3.4.3 Discussion . . . 56

3.5 Conclusions . . . 57

3.6 Acknowledgement . . . 57

4 Multiple Sensing Strategies to improve monitoring and interacting abilities 58 4.1 Related works . . . 58

4.2 System Description . . . 60

4.2.1 Mobile Robot . . . 61

4.2.2 SensHand . . . 63

4.2.3 Data Processing Module . . . 63

4.3 Experimental Protocol . . . 64

4.4 Signal Processing and Classification . . . 67

4.4.1 Feature Extraction . . . 67

User Location . . . 67

Skeleton Activity Features . . . 68

Inertial Features . . . 68

4.4.2 Classification . . . 68

Classification with Skeleton and Localization Data . . . 68

Classification with Inertial Data . . . 69

Fusion at Decision Level . . . 69

4.5 Results and Discussion . . . 69

4.6 Conclusion . . . 73

4.7 Acknowledgement . . . 74

5 Human Robot Interaction through SensFoots 75 5.1 Related works . . . 75

5.2 System Description . . . 76

5.2.2 SensFoot . . . 77

5.2.3 Data Processing Module . . . 77

Gait Parameters Extraction . . . 78

Robot Control . . . 80 5.3 Experimental Protocol . . . 81 5.4 Results . . . 83 5.5 Conclusion . . . 87 5.6 Acknowledgement . . . 88 6 Conclusion 89 A Publications and Other Activities 94 A.1 List of Publications . . . 94

A.1.1 Publication on Journals and Book Chapters . . . 94

ISI JOURNAL . . . 94

BOOK CHAPTER . . . 94

A.1.2 Publication on Conference Proceedings . . . 94

A.2 Other Activities . . . 95

A.2.1 Publications . . . 95

A.2.2 Participation to Conference and other events . . . 95

A.2.3 Summer Schools . . . 95

A.2.4 Other Projects . . . 95

B Results of gesture recognition with different combination of Sensors 96 C Results of Different Combination of Features in Multi-modal Sensors

Ap-proach 98

D User Questionnaire 102

References 117

List of Figures

1.1 Population pyramids, EU-28, 2014 and 2080 (% of total population) [1] . 1 1.2 Model of QoL during ageing and the potential effect when AAL devices

are involved in preventive, supporting and independent living

scenar-ios [2] . . . 5

1.3 Example of AAL scenarios [3] . . . 8

1.4 Flow-chart of HRI [4] . . . 11

2.1 Hand Devices: (A) CyberGlove [5], (B) Android Smartwatch [6], (C) Inertial Ring [7], (D) Accelerometer Glove [8], (E) sEMG Sensors [9], (F) 5DT Glove [10]. . . 20

2.2 Different version of the SensHand . . . 22

2.3 Screenshots of the interface used for the acquisition of data . . . 23

2.4 The SensFoot device . . . 27

3.1 Placement of inertial sensors on the dominant hand and on the wrist. In the circle a focus on the placement of the SensHand is represented, while in the half-body figure the position of the wrist sensor is shown. . 35

3.2 Focus on grasping of objects involved in the different gestures (A) grasp some chips with the hand (HA); (B) take the cup (CP); (C) grasp the phone (PH); (D) take the toothbrush (TB). . . 36

3.3 Example of (A) eating with the hand gesture (HA); (B) drink from the cup (CP); (C) answer the telephone (PH); (D) brushing the teeth (TB). . . 38

3.4 Precision, recall, F-measure and specificity of personal analysis for (A) DT and (B) SVM. . . 42

3.5 Precision, recall, F-measure and specificity of impersonal analysis for (A) DT and (B) SVM. . . 43

3.6 Precision, recall, F-measure and specificity of SVM impersonal analysis for (A) HA; (B) GL; (C) FK; (D) SP; (E) CP; (F) PH; (G) TB; (H) HB; (I) HD. 44 3.6 Precision, recall, F-measure, and specificity of SVM impersonal analysis for (A) HA; (B) GL; (C) FK; (D) SP; (E) CP; (F) PH; (G) TB; (H) HB; (I) HD. (cont.) . . . 45

3.7 Overview of the considered configuration of sensors. . . 49

3.8 Comparison of Supervised and Unsupervised Analysis (k=9): F-Measure (A) Precision (B) Recall (C). . . 54

3.9 Normalised Mutual Information (NMI) computed for KM, SOM, and

HC, assuming different values for k. . . 54

3.10 Confusion matrix for k = 9 (A) K-means (B) self-organizing map (C) hierarchical clustering. . . 55

3.11 Features space representation considering the mean values of each ges-ture (circle) and the centroids (diamond) on the plane of the first, second and third principal component. The gray grid represent the standard deviation . . . 56

4.1 Overall software architecture of the system. . . 61

4.2 The DoRo robot. The depth camera is mounted on its head. . . 62

4.3 Synchronization of heterogeneous data: at time tm, an array of data is provided for each timestamp tn m−1composed of IMU and Skeleton data. Skeleton data are computed at tnm−1 as linear interpolation among tm−1 and tm . . . 63

4.4 Representation of the experimental setting. It includes the DoRo robot, and the SensHand. Only kitchen, bedroom, and living room were con-sidered. . . 64

4.5 Software architecture of the activity recognition system. Two indepen-dent classifiers are trained on location, skeleton, and inertial data. A decision-level fusion approach is used to exploit the heterogeneous in-formation. The dotted arrow refers to the configurations where the lo-cation features are used with the inertial features in the independent classifier. . . 67

5.1 The Pepper Robot (SoftBank). . . 77

5.2 Gait parameters [11]. . . 78

5.3 Robot control scheme. . . 80

5.4 Scheme of following task (A) and follow-me task (B). . . 82

5.5 Example of tests with users (A), and a sequence from following task (B) and from follow-me task (C). . . 83

5.6 Occurence of the aswers for each question. . . 84

5.7 Values of the turning angle for each user during the path for the sensor placed on the left foot (A) and on the right foot (B). . . 86

D.1 Questionnaire submitted to the user at the end of the tests with the Pep-per robot . . . 102

List of Tables

2.1 Summary of technologies used in hand gesture recognition in terms of technology adopted, advantages and disadvantages . . . 22 2.2 Summary of existing technologies for gait analysis and measured

pa-rameters. . . 26 3.1 Activity recognition: Related Works . . . 32 3.2 Summary of the presented related work in terms of activities to be

recog-nised, learning approach and use of only inertial sensors (PA= Physical Activities, HOA= Hand-Oriented Activities, EM= Eating Modes, S= Su-pervised, U= UnsuSu-pervised, Y=Yes, N=No) . . . 33 3.3 List of the acronyms used for the chosen activities. . . 37 3.4 Combination of sensors used for the analysis for each model. . . 40 3.5 Average F-measure and accuracy of personal analysis with standard

de-viation (SD). . . 41 3.6 Average F-measure and accuracy of LOSO analysis with standard

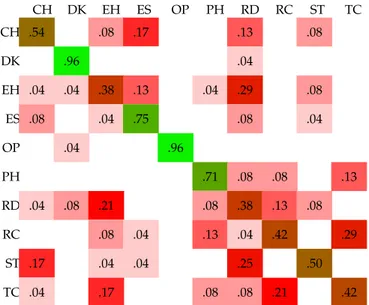

devi-ation (SD). . . 43 3.7 Values of precision, recall, F-Measure of LOSO model SVM IW . The

gestures are classified as follows: HA stands for Hand, GL stands for Glass, FK stands for Fork, SP stands for Spoon, CP stands for Cup, PH stands for Phone, TB stands for Toothbrush, HB stands for Hairbrush and HD stands for Hair dryer. . . 46 3.8 Comparison of supervised and unsupervised analysis (K = 9) in terms

of accuracy, F-measure, precision and recall, computed as the mean value 52 4.1 Summary of works combining inertial and depth data presented in

lit-erature. . . 59 4.2 List of the acronyms used for the chosen activities and locations where

they were performed (B: Bedroom, L: Living Room, K: Kitchen). When the depth camera is considered, activities are considered different ac-cording to the relative position between robot and user (F: Front, S: Side). 65 4.3 Description of the adopted configurations. . . 66 4.4 Classification Results according to different features in terms of

Accu-racy, F-measure, Precision and Recall (S=Skeleton, W=Wrist, L=Location, I=Index) . . . 69

4.5 The confusion matrix using only the robot (i.e. skeleton and location

features). F= Front, S= Side . . . 70

4.6 The confusion matrix using the robot (i.e. skeleton and location fea-tures) and the wrist only: (S&L)+W . F= Front, S= Side . . . 71

4.7 The confusion matrix using the combination of all the features (skele-ton, location, wrist, and index). F= Front, S= Side . . . 72

5.1 Results of the questionnaires made after the experimentation. . . 84

5.2 Linear regression analysis. . . 85

B.1 Values of precision, recall, F-Measure of LOSO model SVM FS. . . 96

B.2 Values of precision, recall, F-Measure of LOSO model SVM W. . . 96

B.3 Values of precision, recall, F-Measure of LOSO model SVM I . . . 97

B.4 Values of precision, recall, F-Measure of LOSO model SVM IT. . . 97

B.5 Values of precision, recall, F-Measure of LOSO model SVM IWT. . . 97

C.1 The confusion matrix using only features from the Wrist. . . 98

C.2 The confusion matrix using features from the wrist and location. . . 99

C.3 The confusion matrix using the robot (i.e. skeleton and location fea-tures) and the wrist with location: (S&L)+(W&L) . F= Front, S= Side . . . 99

C.4 Values of F-measure, precision , and recall using the robot (i.e. skeleton and location features): (S&L) . F= Front, S= Side . . . 100

C.5 Values of F-measure, precision , and recall using the Wrist: W. . . 100

C.6 Values of F-measure, precision , and recall using the robot (i.e. skeleton and location features) and the wrist : (S&L) + W . F= Front, S= Side . . . 100

C.7 Values of F-measure, precision , and recall using the Wrist and the loca-tion: W+L. . . 101

C.8 Values of F-measure, precision , and recall using the robot (i.e. skeleton and location features) and the wrist plus location : (S&L) + (W&L) . F= Front, S= Side . . . 101

C.9 Values of F-measure, precision , and recall using the robot (i.e. skele-ton and location features) and the wrist plus index finger and location: (S&L) + (W&I&L) . F= Front, S= Side . . . 101

Chapter 1

Introduction

1.1

Demographic context

Thanks to recent advances in medicine, life expectancy has grown in the last years. Together with a low level of fertility, low level of mortality led to an increase in the percentage of the population aged 65 and older with a particular increase in the num-ber of very old persons (aged 85 and over) [12]. In particular, the share of very old persons is projected to increase from 5.1% in 2014 to 12.3% by 2080. As shown in Figure 1.1 [1], the working population will significantly decrease in the next years, increasing, therefore, the burden on the working-age people to sustain the dependent population. The ratio of working-age persons versus elderly persons will go from 4:1 in 2014 to 2:1 in 2080 [1]. These demographic trends will impact on labour markets, pensions and provisions for healthcare, housing and social services.

FIGURE 1.1: Population pyramids, EU-28, 2014 and 2080 (% of total population) [1]

As a matter of fact, ageing can cause decreases in people’s physical and cognitive abilities, thus increasing the need for care. The higher request for care will lead to a higher demand for nurse practitioners (+94% in 2025) [13] and physician’s assistants (+72% in 2025) [14], as well as an increased need for a higher level of care and for

future assistance. Today, having a good quality of care and highly sustainable health-care services are increasingly imperative in EU countries [15].

Since the majority of elderly people would prefer to stay in their homes as long as possible it is important to prevent the deterioration of chronic disease, to support elderly persons in daily activities, and to delay entry into institutional care facilities [16]. It is, therefore, unavoidable to determine how to ensure that longer lives will also mean an increase in the number of healthy years. It is important to find solutions that can help people to stay healthy and independent, to enjoy their life, and to continue to feel part of society.

In the last years1, several efforts have been spent to find solutions to face the

cur-rent socioeconomic context, and in this setting, the Ambient Assisted Living (AAL) model is gaining a foothold. The main characteristic of the AAL approach is to inves-tigate the use of technologies to help elderly people, informal caregivers, and profes-sional service providers with benefits such as:

• improving the efficiency of health and social care for older people;

• extending independence and autonomy of elderly persons in need of care; • improving life style of older people enhancing their Quality of Life (QoL); • helping caregivers to monitor users, optimising time and tasks, reducing the care

burden;

• reducing costs to society, avoiding early institutionalisation due to the decrease in physical and cognitive abilities.

In the past years, several AAL researches have focused on this approach. Accord-ing to this vision, AAL could support elderly people in stayAccord-ing independent and active and help them enjoy more years of healthy life. Technologies could indeed monitor their health, assist them in executing daily life activities and support them in maintain-ing social contacts and bemaintain-ing involved in community life. The studies carried out in this field show the great potentialities and the possible benefits of AAL in the society [17].

1.2

User needs

AAL solutions2span from smart wearable and environmental sensors to social robots. It is a very wide field, where different solutions can be used to help the different actors in the elderly people management. In order to design services and technological solu-tions that will be effectively deployed and used by the persons, it is import to analyse 1Adapted from A. Moschetti, L. Fiorini, M. Aquilano, F. Cavallo, and P. Dario. Preliminary findings

of the AALIANCE2 ambient assisted living roadmap. In Ambient assisted living (pp. 335-342). Springer International Publishing. (2014).

2Adapted from F.Cavallo, A.Moschetti, M.Aquilano, P.Dario et al. "AALIANCE2 Roadmap", available

all stakeholders’ needs and requirements. There are different actors in the chain of the elderly care, in particular, four groups can be identified:

• Primary Stakeholders: including old users and their informal caregivers (i.e. their families);

• Secondary Stakeholders: including service providers;

• Tertiary Stakeholders: including industries producing AAL technologies and all the organisations supplying goods;

• Quaternary Stakeholders: including policy makers, insurance companies and all other organisations that analyse the economic and legal context of AAL.

In particular, the main societal drivers are represented by the primary, secondary and quaternary stakeholders. Analysing their needs is the starting point to find moti-vations and new services that will foster the use of AAL devices in daily living.

1.2.1 Primary Stakeholders

This group is made of elderly persons and all the unpaid persons that help them in managing their life. These people are the starting point in the design-chain of services and AAL solutions. Old persons have a higher risk of suffering from chronic diseases and their independence may be reduced. But they still need to have the opportunity to enjoy life and stay at home. According to the researches made for the AALIANCE2 Roadmap, there are several important needs.

Old persons are inclined to premature degeneration of both physical and cognitive health and are therefore more at risk for having incidents, especially falls. Improving the safety of their domestic environment, carrying out physical exercises and main-taining good motor abilities and balance is crucial to reduce the probability of injuries. These prevention activities, together with helping them in managing chronic diseases, which often affect them, will allow them to stay longer at home or in the environment they choose.

Moreover, elderly people are often inclined to depression and to the perceived loneliness and vulnerability. Reducing these negative feelings is important together with increasing the social and active participation in community life. It is important to enable older people to be part of the society as long as possible, to not be consid-ered as a burden for their families and the society itself. Maintaining an active ageing would indeed benefit both them and the society. In this context, policy makers should promote preventive actions to preserve workers’ health, delay the retirement (when they want to) and enable older persons to be active in their families or in voluntary associations. Older people do not want to feel isolated, they want to be part of the community and of their family.

New care and service systems have to be organised considering also the economic aspect since elderly people are often at risk of poverty. AAL services should, therefore,

guarantee senior citizens’ right to be cared, maintaining dignity and an adequate QoL and health.

These services should also help the informal caregivers (family members and com-munity volunteer) that support elderly persons allowing them to stay longer at home in a friendly environment. In EU countries, these persons undertake almost the 60% of care requests [18], managing different tasks, from health care to support for Activ-ities of Daily Living (ADL). Caring for dependent individuals at home can bring to many complications for the informal caregivers, such as emotional distress and phys-ical health problems [16]. It is, therefore, necessary to develop policies and organise healthcare and services to support the primary stakeholders in daily life.

1.2.2 Secondary Stakeholders

Secondary stakeholders provide services to elderly persons in need of care. They can do that in different ways according to the health, personal preferences, and financial situations of persons who receive the services. In particular, three kinds of cares can be identified: home-care, long-term care and intermediate solutions. All these forms of service providing involve many professional caregivers. Due to the increasing de-mand for care [19], it is essential to provide them with right tools to assist and monitor more elderly in an efficient way. AAL devices can help the service providers to renew the services and integrate and coordinate also with the other actors of the care chain. Technological solutions should, therefore, help secondary stakeholders in:

• monitoring elderly persons in terms of health condition and critical situations; • supporting healthy ageing by helping elderly people to carry out activities; • monitoring the drug intake;

• recognising the activities carried out by the users to monitor if the person is maintaining a healthy lifestyle or to prevent dangerous situation and decrease in physical and cognitive abilities.

AAL services should be therefore designed to help care providers to face the de-mand for care increasing at the same time the quality of the delivered care. These solutions can also give the opportunity to monitor the users 24/7 by using sensors that make it possible to check on the user conditions and daily living.

1.2.3 Quaternary Stakeholders

Quaternary stakeholders have a key role in the future development and deployment of ICT for ageing. Technological solutions can help them to face the challenge of the ageing society but in order to do that these stakeholders need to address some issues. Due to the increasing demand for care and the economic crisis, the public health care and socio-medical services are becoming insufficient and inefficient. It is, therefore, necessary to present new policies to reorganise services, including AAL solutions, and

help those people who cannot afford these kinds of services. The proposed services have to be accessible for all the persons, including the ones living in rural areas, which often have problems accessing community services. The development of smart and friendly cities can be a solution, implying thus an improvement of the infrastructure to help all the citizens to really enjoy and be part of the community. Moreover, quater-nary stakeholders need to address issues like security, privacy, ethics, standardisation, and certification to help the real deployment of technological solutions and AAL ser-vices.

1.2.4 Potential of AAL technologies on perceived Quality of Life

AAL solutions can be introduced in the healthcare chain to improve the current care system and to help the main stakeholders. AAL technologies have, in fact, the poten-tial to improve the QoL supporting persons in their daily life, enabling participation in activities at home and in the community, and improving the cost-effectiveness and quality of health and social services [20].

It is important, however, to identify innovative solutions that can impact on the QoL of old persons. In particular, in the AALIANCE2 Project, three main areas have been identified to group solutions used to support elderly people, namely Prevention, Compensation and Support, and Independent and Active Ageing [2].

Due to disabilities and morbidities, QoL would normally decrease after a certain age as shown by the violet line in Figure 1.2. The blue line represents the increase in the QoL that can be obtained when preventive actions are undertaken, whereas the red line shows how the engagement of compensation or support actions can influence the QoL. Finally, the yellow line highlights how independent and active ageing can slow down the decrease of the QoL. The green arrow shows how the engagement of all the three actions can increase the QoL and slow down its decrease. All these actions can be supported by technological devices, demonstrating that the adoption of AAL technologies can help people in improving the perceived QoL.

FIGURE1.2: Model of QoL during ageing and the potential effect when AAL devices

1.3

AAL Technologies

The growing demand for cares and the preference to stay at home as long as possible have pushed the request for development and deployment of assistive technologies. The market for AAL technologies is projected to exceed USD 3.9 billion by 2020 [21]. The market for medical electronics is expected to reach USD 4.41 billion by 2022 [22], whereas the smart home market, which has been experiencing steady growth, is ex-pected to reach USD 121.73 billion by 2022 [23]. Similarly, the telehealth market was valued at $2.2 billion in 2015 and is predicted to reach $6.5 billion by 2020, with an annual growth rate of 24.2% [24].

One of the aims of AAL technologies is to offer intelligent, unobtrusive, and ubiq-uitous supports to older adults. AAL technologies generally include Information and Communications Technologies (ICT), stand-alone assistive devices, and smart home technologies that help people to live and age well. Technologies span from low-tech devices to high-tech ones. There is a wide range of health-monitoring and emergency-alert devices that can help in dangerous situations, monitor vital signs, allowing also an easy access to the information by care providers. Cognitive stimulation, personal health management, activity monitoring and social support can be performed by the use of wearable and environmental sensors and robotic companions. As a matter of fact, to assist and support people in everyday life, different kind of technologies have to be part of the same system, thus creating a structure able to perform several tasks, from monitoring to facilitating social interaction. AAL systems should be therefore well-integrated, but at the same time modular, so that the person can decide which level of support would like to have [2].

1.3.1 Wearable Sensors

In the last years, researches and clinicians have focused their attention on wearable technologies, due to the possibility of long-term monitoring of persons both at home and in community settings. As a matter of fact, one great opportunity in AAL is rep-resented by telecare, which has been defined as

"remote, automatic and passive monitoring of changes in an individual’s condition of lifestyle in order to manage the risks of independent living" [25].

The possibility to be monitored continuously and the evidence of reduced costs are considered some of the facilitators of telecare that allows to create also a Continuum of Care without caregivers staying always with the persons.

The benefits that wearable devices could bring in healthcare sector, together with the advancement in miniaturisation and costs of wearable sensors, drive the grows of wearable sensors market, which is expected to grow from USD 189.4 million in 2015 to USD 1.65 billion by 2022 [26].

As a matter of fact, recent advances in micro-electromechanical systems (MEMs) and epidermal technologies has been important to develop body sensors networks

able to monitor vital signs and movements in an unobtrusive way. Blood glucose, blood pressure, and cardiac activity, for example, can be measured through wearable sensors [16]. On the other hand, movement sensors, such as accelerometer and gyro-scope, can be used to monitor daily activities. The great interest in wearable sensors for healthcare application is demonstrated also by the fact that companies like Apple, Samsung, and others are pushing the research in this field. For example, Samsung is developing a device, Samsung Bio-Processor, which will allow to measure different bio-data 3, while Apple, beyond the development of the Apple watch, presented a patent for a wearable device capable of performing an electrocardiogram4.

When dealing with elderly users, it is important to consider three fundamental characteristics, i.e. ease-of-use (easy configuration and maintenance), coverage (no limited working area) and privacy [27]. Wearable sensors have these features. Sensors can be worn easily by the users while keep on doing their daily activities without staying in a specific place or being filmed.

Using these wearable sensors, caregivers and family could monitor the person’s health and motion being connected constantly by Smartphone, tablet, or other con-nected devices.

Two examples of AAL scenarios (see Figure 1.3) concerning the use of wearable sensors are presented in the following paragraphs.

Wearable sensors in daily activity recognition

A change in the daily habits can be seen as a first symptom of degeneration in cogni-tive abilities in old people leaving alone. As a matter of fact, persons with dementia often forget to eat or drink or to carry out simple activities of daily living such as personal hygiene behaviours [28]. The ability to recognise daily gestures is, therefore, important to remotely monitor elderly people to check whether they are still maintain-ing their daily routines [29]. In order to have a non-invasive monitormaintain-ing, devices that can recognise movements can be adopted to avoid the physical presence of a person in the house.

The same devices can be used also to enable a Continuum of Care. Many persons, and in particular, elderly people, could have to perform exercises at home as assess-ment tools or for rehabilitation purposes. The recognition of the training session and in particular of the performed exercises can help the therapist to monitor persons and check the accuracy of the work. In this way, after the first training sessions accom-plished with the therapist, the patient could perform the activities at home while be-ing monitored. The user could, therefore, increase the amount of time spent dobe-ing the exercises, without the need for a session with the physician [3].

3http://www.samsung.com/semiconductor/about-us/news/24521/ 4https://www.apple.com/watch/compare/

Management of chronic diseases

Many old people suffer from chronic diseases, which require constant monitoring, and they can have problems in managing all the therapies they require.

The use of wearable sensors to monitor health parameters automatically and on demand of clinicians could help elderly persons and caregivers to control the health status and find early deterioration of the diseases. Moreover, thanks to the continuous control, some actions could be suggested by physicians to improve the health situa-tion, such as suggestions for the meals or for some physical activities. The monitoring of health parameters can help also during physical activities to detect potentially dan-gerous conditions so that caregivers can be alerted promptly [2].

FIGURE1.3: Example of AAL scenarios [3]

1.3.2 Service Robots

In the last few years, the use of robots in AAL has been investigated to help people to carry out some tasks and to maintain their independence as long as possible. Ser-vice robots ("robot that performs useful tasks for humans or equipment excluding industrial automation application" [30]) will become part of our everyday life as helpers and com-panions [31].

Robots could be used in public places, such as museums, supermarkets, shopping centres, and childhood education centres. Here robots can introduce exhibitions to people or help teachers to work with children. For instance, GRACE is a robot de-signed to go to a conference room and give a presentation, while RoboX and Rackham have been used to present exhibits to tourist [4]. On the other hand, Robovie and RUBI were designed to help people in public places, the former to assist elderly persons in

the shop, while the latter to assist teachers for early childhood, teaching numbers, colours and some basic concepts [4].

Robots can also become part of the domestic environment, performing tasks of en-tertainment, everyday tasks, and assistance to the elderly. Robots can have different functionality including health care, companionship, entertainment and personal as-sistant. The global market for Service Robots will reach USD 10.8 billion by 2020 [32], showing the growing interest in this technology.

Regarding personal assistance, assistive robots can help people with daily activ-ities. Several types of assistive robots can be identified in literature, spanning from rehabilitation robots to mobility aides, companion robots, manipulators, housekeep-ing robots and educational robots [33, 34]. ASMAR III, for example, was designed to execute some housekeeping tasks, such as open and close a dishwasher or pick up cups and dishware, while interacting with humans. PaPeRo, instead, can interact with the user through speech conversation, touch and face recognition, and story telling [4]. On the other hand, the Robot-Era project designed a system to assist elderly persons at home through the cooperation of three robotic platforms, namely DoRo, Coro, and Oro. The three robots can be used alone or all together to perform more complex ser-vices. Among the possible services, the user can use the robots to order and bring the grocery shopping at home, to throw away the garbage, as a walking support, and to bring objects to the person [35].

Robots will be able to perform different roles, thanks to their capabilities to act and interact safely with humans, providing for an improved QoL [2]. They have to be aware of the environment around them, increasing their perception abilities to per-form their tasks in the best way possible. Moreover, to perper-form the tasks they have to and share the environment with people, robots have to be dependable and safe. Finally, robots should have abilities such as manipulation capacities and navigation, both indoor and outdoor, to interact and change environment [2].

Domestic Robots

To let people stay longer at home, a personal service robot could daily sustain them. As a matter of fact, robots can be designed to perform tasks, such as cleaning, picking objects, carrying heavy things, that can help elderly people in daily living, reducing also the risk of injuries.

The presence of a robot at home can also increase the sense of safety being able to localise the user in the house and check possible dangerous situation. Robots can help also with leisure activities. In fact, they can facilitate the access to information; knowing the main interest of the persons they stay with, they can select tailored events and news the persons could be interested in, beyond reminding appointments. More-over, robots could be able to play games, to make the users play and at the same time stimulate them with games that can be used as cognitive training.

Toward more natural human-robot interaction

Make the robot understand human behaviour, as it occurs in human-human interac-tion, is one of the challenges in Human-Robot Interacinterac-tion, so to have a more natural interaction. In this way, the robot could be able to perceive the intention of the user without the person communicating directly to the robot.

Moving around the house, the robot could look at the user and be able to perceive what the user is doing, whether he is eating or drinking or talking to somebody, or simply sitting alone on the sofa becoming bored. In this way, it could approach the user in a proper manner, choosing a tailored way to get closer to the person and to interact with him/her, becoming thus more social. It could also suggest proper things to do according to the activities performed during the day and according to the mood of the persons, trying also to improve it in case the user looks sad or bored.

1.4

Human-Oriented Perception

To become part of everyday life, robots should not only be able to perform different tasks, but they should also be able to interact with persons in a natural way, they should thus become social.

A social robot can be defined as:

a robot which can execute designated tasks, and the necessary condition turning a robot into a social robot is the ability to interact with humans by adhering to certain social cues and rules [4].

Human-Robot Interaction (HRI) is, therefore, the hearth of social robots. Social robots should have the ability to interact with humans, understanding their social sig-nals and cues and responding appropriately to facilitate "natural" HRI [36]. To increase this ability robot’s functionality, perception and acceptability should be enhanced.

Inter-personal communication is made not only of verbal cues, but also of non-verbal ones, such as facial expressions, movements, and gestures. All these cues are used to express feeling, intentions, and give feedback. Robot’s social abilities could be increased by the capacity to sense and understand all these cues and respond appro-priately to the users, tailoring, therefore, the content of the interaction [4].

The HRI in social robots is made of three parts [4] (see Figure 1.4):

• Perception, which consists of the acquisition of the information coming from what surrounds the robot, and the analysis module, which extracts significant features and associates some meaning to them;

• Action, which refers to the responses made by the robot;

• Intermediate layer, which is a mechanism that connects perception and action, producing motor-control signals according to what has been perceived.

FIGURE1.4: Flow-chart of HRI [4]

Fong et al. [37] distinguished among the social robots between robots which use "conventional" HRI and socially interactive robots, where the social interaction is cru-cial. In particular, they stated that:

"a socially interactive robot must proficiently perceive and interpret human activ-ity and behaviour. This includes detecting and recognising gestures, monitoring and classifying activity, discerning intent and social cues, and measuring the hu-man’s feedback" [37].

The perception part becomes, therefore, dominant in HRI. Understanding the world around the robot is crucial to let it correctly interact and make appropriate actions [4]. Robots, in fact, have to be able to understand as much as possible the environment they are working in, the different situations, and track people to implement a natural HRI [31]. Perceiving what the user is doing and be able to interpret his/her activities, gestures and movements is, therefore, pivotal for the robot to become part of daily life. Usually, a social robot is able to perceive four classes of signals, namely visual-based, audio-visual-based, tactile-visual-based, and range-sensors based. All these signals are col-lected through the sensors the robot has mounted on. According to the final applica-tion of the robots, the percepapplica-tion module can vary. As a matter of fact, if the robot is developed to have physical contact with the users, detect tactile contact is crucial, as well as recognition of the environment, persons tracking, and sounds detection are very important for robots that have to stay in public places, such as museums or su-permarkets [4]. On the other hand, domestic robots should have a strong speech inter-action capabilities, together with recognition of the environment, touch, and gesture and movement according to the tasks they are designed to perform.

So the application scenarios influence heavily the choice of sensors and features to be extracted. However, it is important that the whole perception module is stable, fast and accurate.

According to the state of art, several perception abilities can be implemented in social robots [4]. In particular, among others, human detection and tracking, face

detection and expression recognition, sound localisation, speech recognition, gesture and movements recognition, and emotion recognition can be implemented to increase the interaction abilities of robots. Among these robots capabilities, face detection and expression recognition require the robot to stay in front of the user, having its face in its field of view. Despite the valuable information that can come from the recog-nition of face expression, this kind of interaction between human and robot can be quite restrictive if the relative position between robot and user is considered. Speech recognition overcomes the issue related to the position of the robot with respect to the person but can suffer from noisy places, where the surrounding noise can cover the user voice, making more difficult for the robot to understand what the person is saying unless a wearable microphone is used. On the other hand, to recognise ges-ture and movements, the robot has to be close to the user (depending on the sensors used to recognise these features) but it has not to stay perfectly in front of him/her. According to the sensors used to recognise the movements, this perception ability can be influenced by the surrounding conditions, but the use of external sensors can help to overcome this issue, together with the issue related to proximity of the robot to the user. Moreover being able to recognise gestures and movements will help the robot to understand also non-verbal cues linked to the movement of body parts. These signal can be used by the robot to interact more naturally with the persons, like in a human-human interaction [4].

The perception abilities of the robot can be used, also, to observe and monitor human daily behaviours so to merge the interactive and the assistive abilities of the robot. Understanding human movements, activities, behaviour, and body language is really important in AAL applications since it makes possible to use technological solutions for prevention or monitoring and can also help the communication between the users and the technological devices.

Being able to interpret what the user is doing enables the robot to monitor him/her and, at the same time, choose the best way of interaction. For instance, if a person is speaking or is eating with other people, the robot could choose whether to interrupt him/her or not, according to the urgency of the communication. If the person is cook-ing or eatcook-ing alone instead, it could approach the user suggestcook-ing receipts or enter-taining him/her. On the other hand, understanding that the user is sitting on the sofa for a long time, the robot could go to the user and suggest to do something different, maybe some physical activity or some games.

Robots could help therefore to maintain a healthy lifestyle by controlling the daily behaviour and suggesting a healthy way of living [2]. Particularly, robots could inter-act properly with the user, personalising the interinter-action and suggesting some tailored hints to improve the human’s lifestyle and to identify any behavioural changes typical of elderly persons showing cognitive disorders [28].

The recognition of human movements and gestures can, therefore, help the robot to recognise activities and daily behaviour, enabling it to increase its assistive and interaction abilities.

To increase perception abilities of robots, sensors can be used that are not properly part of the robot itself. The use of external sensors, such as environmental sensors and wearable sensors, and other external resources can be considered to extend the perception module of the robot. In [38], for instance, two external sensors, namely a depth camera mounted in front of the user and a CyberGlove (see Section 2.1.1) worn by the user, are used to extract information about human movements and develop a HRI system.

Nowadays, advances in Internet of Things [39] and cloud robotics [40] can extend social robot abilities in terms of perception of surrounding environments and com-puting abilities respectively. Thanks to these advances, the robot has the possibility to collect more information from the user and, at the same time, to be aware of the context, increasing, therefore, the knowledge of the robot about what is around it. In this context, the robot could be aware of what the user is doing without the need to be in the same environment and interact with him/her only in case of need and of proper interaction [41].

1.5

Scientific Challenges of this PhD Work

In this context, the aim of this work is to improve the recognition and analysis of hu-man gestures and movements through wearable sensors with the purpose to increase the perception capabilities of robots.

In particular, the proposed system is composed of inertial wearable sensors that are used alone or merged with sensors mounted on the robot to recognise daily ac-tivities or interact with the robot. This work aims, therefore, to use wearable sensors as an extension of the robotic system to enhance robot capabilities and improve its monitoring and interacting abilities in AAL scenarios.

Concerning the recognition of daily gestures, after the choice and optimisation of the sensors to be used, the advantage of extracting kinematic features not only from the wrist but also from the fingers is demonstrated by using a novel instrument based on a bracelet and rings. In particular, differently from the state of the art (see Section 3.1), a mix of hand-oriented gestures and eating mode very similar to each other, and therefore challenging to be recognised, is chosen and the ability to distinguish among them of fingers- and wrist- worn sensors is investigated. The purpose is, therefore, to find the best combination of sensors able to recognise the different gestures consider-ing the trade-off between recognition ability and obtrusiveness of the system. After having found the best combination, a comparison between supervised and unsuper-vised machine learning algorithm is carried out to evaluate the performance of unsu-pervised algorithms, which provide the basis to implement daily gesture recognition in real conditions where is not possible to have large training set.

Once shown the importance of having sensors on the finger beyond the sensor on the wrist, information from inertial sensors are combined with data from the robotic system, i.e. location of the user and skeleton data from depth camera. Conversely

from the state of the art (see Section 4.1), in this case, acquisitions were made using a moving robot and from two relative positions between the robot and the user, thus considering the limitation of vision sensors linked to occlusion and the noise added by the movement of the robot. Thanks to the merging of the technologies, the perception abilities of the robot are increased by the information given by the wearable sensors. Beyond recognising the daily activities, the system is able also to recognise the relative position between the robot and the user, so that this information can be used to tailor the approach of the robot to the user.

After demonstrating how wearable sensors can increase the perception abilities of the robot, a first step in the implementation of the entire HRI flow-chart (see Fig-ure 1.4) is implemented. Also in this case, the perception module is not part of the robotic system itself, but it uses information coming from sensors placed on the user. In particular, gait parameters evaluated from the sensors placed on the feet are used to control the action made by the robot. The feasibility, performances, and perceived usability are investigated in a real application where wearable sensors are used to en-hance the information that the robot can perceive from the user. The implementation of this work aims to show the possibility to create a more natural interaction between the robot and the user, controlling the robot navigation without the need to use physi-cal linkers and with no restriction on the relative position between the human and the robot.

Through the implementation of the aforementioned analyses, it is shown how wearable sensors can increase the robot perception abilities and how the robot itself can respond appropriately to the information gained by these sensors. This is a first step in the development of a system consisting of wearable sensors and robots to assist and monitor elderly persons continuously in a more natural way.

As a matter of fact, the merging of these two technologies can enhance not only the HRI but can also improve monitoring capabilities of both of them. The use of wearable sensors allows to monitor the user even when the robot is not in the same room, whereas the use of both of them can improve the recognition capabilities.

Adopting a robot to assist and monitor persons is very interesting due to the fact that a robot can stimulate the user to maintain a healthy lifestyle. Understanding daily routine is important to monitor people, especially recognising eating and drinking ac-tivities would also help to check food habits, to prevent conditions such as obesity and eating disorders, helping individuals to maintain a healthy lifestyle, delaying physi-cal and cognitive decline [42]. The robot could be used therefore as a monitoring tool that is able also to intervene to suggest actions to improve the lifestyle. In this way, it would be possible to help caregivers in their daily work and consequently propose a solution to the future demand for health-care services.

Moreover, the robot can tailor its behaviour and its movement according to what the user is doing, resulting more social and more acceptable. These features are crucial to develop and deploy good quality and sustainable health care services that will let elderly persons have more healthy years and stay longer at their own place, being thus

more independent and postponing the transfer in long-term care facilities.

The remain of the thesis is structured as follow: Chapter 2 describes the current state of the art of wearable sensors used in hand gesture recognition and in gait analy-sis. Chapter 3 presents the results of the analysis of the performance of hand wearable sensors to recognise daily gestures with supervised and unsupervised machine learn-ing algorithms. Chapter 4 describes results of the evaluation of the fusion of data from wearable sensors and robot’s sensors aiming to show how the wearable sensors could increase the perception abilities of robots. Chapter 5 reports the implementation of a HRI task using sensors placed on the feet of the user to control robot navigation. Finally, Chapter 6 concludes the work.

Chapter 2

Sensing Technologies

As stated in Chapter 1, in future AAL technologies will play an important role in pro-viding good quality and sustainable health-care services. Robots can be part of these services, being able to perform several physical tasks but also to monitor and interact with people. Recognition of human movements and gestures plays an important role in the monitoring task and can become also a mean of interaction.

Different technologies can be used to recognise gestures and movements, but wear-able sensors allow to capture data without privacy and coverage issues and can be designed to be easy to use and non-invasive [27].

Since focus of this work is the recognition of human hand gestures linked to daily activities for monitoring reasons and the interaction of human and robot by mean of walking parameters, this chapter gives an overview of the main technologies used in hand gesture recognition and in gait analysis ending with the description of the chosen sensors to be used for the work carried out during this PhD: the SensHand and the SensFoot.

2.1

Hand Gesture Recognition Technologies

To recognise gestures human body position, configuration (angles and rotations), and movements need to be sensed [43]. Thus, the technologies related to the capture of gestures should be developed to be fully reliable and highly sensitive, with a low level of invasiveness to minimise the discomfort of the monitoring task. Different approaches can be used and, basing on different sensing technologies, two groups can be identified: vision-based approach, and wearable sensor approach [44]. These technologies vary along several dimension, including accuracy, resolution, latency, range of motion, user comfort, and cost.

Vision-based technologies, which include also depth camera, have been employed in gesture recognition, avoiding problems of discomfort linked to wearing sensors. In the last years, much attention has been given to depth sensors, such as Microsoft Kinetic [45] and Leap Motion controller, especially used for hand gesture recognition [46]. The former one is a commercial product based on the emission of an IR pattern and the simultaneous capture of image with a traditional camera that are then coupled

with RGB camera [47]. The latter is a commercially available optical device based on three light emitting diodes and two IR cameras.

Both these sensors have been used widely in gesture recognition, however, the use of vision-based technologies raises issues linked to the field of view, as individuals should stay in precise area to be targeted by the cameras. Moreover, other limitations linked to the use of this kind of sensors are privacy, since not everybody would like to be permanently monitored and registered by cameras, illumination variations, occlu-sion and background change, linked to the ability of the sensors to identify the parts of the body involved in the movement to be recognised [48]. Furthermore, strictly speaking of hand gesture recognition, vision-based system will get the general type of finger motion, unless a camera is pointing directly to the hand [43].

In contrast, wearable sensors overcome the limitation of obstruction and light. Be-ing worn by the person, they receive information directly from the movement of the user, detecting also fast and subtle movements without forcing them to stay in front of a camera or interacting with specific objects [49]. However, to use this type of sen-sors, the user has to wear something on the body and/or on the hand, which can be felt as cumbersome and can limit the ability to perform gestures in a natural way [43]. However, thanks to the miniaturisation and affordability of MEMs and in partic-ular of Inertial Measurement Units (IMUs), the approach based on wearable sensors is gaining popularity. The combination of different types of sensors can improve the accuracy of recognition tasks, but in the case of wearable sensors, it is important to always pay attention to obtrusiveness, maintaining a good trade-off between recogni-tion performance and invasiveness [50].

Wearable sensors have experienced an increasing commercialisation and new de-signs have been studied to reduce invasiveness and discomfort. To increase the wear-ability is, in fact, important to include sensors in objects people are already used to wear, like jewellery [51]. Thus new customised devices can be developed to track human motion, always considering obtrusiveness [52].

Wearable devices are now coming to market with form factors that increase com-fort during various daily activities. CCS Insight has updated its outlook on the future of wearable technologies, indicating that it expects 411 million smart wearable devices to be sold in 2019 and that fitness and activity trackers will account for more than 50% of the unit sales. Particularly, Smartwatches will account for almost half of wearables revenue in 2019 [53].

All these smart devices can provide a significant amount of information that could be used in the AAL context to support the understanding of human movements, and hand gestures, without being invasive, but simply as a part of daily living. Differ-ent working principles have been studied during these years to find a good trade-off between precision, accuracy, and obtrusiveness [54].

2.1.1 Glove-based system

1

Over the past 30 years or more, researchers have begun to develop wearable de-vices, particularly glove-based systems, to recognise hand gestures. Much attention has been given to glove-based systems because of the natural fit of developing some-thing to be worn on the hand to measure hand movement and finger bending.

A glove-based system can be defined as:

“a system composed of an array of sensors, electronics for data acquisition/processing and power supply, and a support for the sensors that can be worn on the user’s hand” [54].

The various devices can differ based on sensor technologies (i.e. piezoresistive, fiber optic, Hall effect, etc.), number of sensors per finger, sensor support (i.e. cloth or mechanical support), sensor location (i.e. hand joints, fingertip positions, etc.), and others [54].

A typical example of a glove-based system is the CyberGlobe [5] (Figure 2.1a), a cloth device with 18 or 22 piezo-resistive sensors that measures the flexion and the abduction/adduction of the hand joints (depending on the number of sensors, the measurable movements increase).With a sensor resolution smaller that one degree, it is considered one of the most accurate commercial systems [54]. The Sensor data rate is up to 120 Hz and the battery duration is limited to 2 hours [55]. It has provided good results in recognising sign language based on the biomechanical characteristics of the movement of the hand (overall accuracy of 0.75 [56] and of 0.99 [57]).

Another example of a glove-based system is the 5DT Glove [10] (Figure 2.1f), which is based on optical fiber flexor sensors. The bending of the fingers is measured by measuring the intensity of the returned light indirectly [54]. Each finger has a sensor that measures the overall flexion of the finger with a high resolution (Minimum 10-bit flexture resolution) [10]. This device is famous for application in virtual reality [58, 59]. Other examples of glove-based systems include an elastic fabric glove equipped with 20 Hall effect sensors distributed among hand joints (used for the quantitative assessment of the digit range of motion with an overall error in measuring the flex-ion of the joints of 6.72°) [60] and a sensorized glove based on resistive sensors [61]. Carbonaro et al. [62] added textile electrodes and an inertial motion unit to the defor-mation sensors of the glove-based device to add emotion recognition made through electrodermal activity.

Even though glove-based systems can be very accurate in measuring hand degrees of freedom, they also can be perceived as cumbersome by users because they reduce the dexterity and natural movements.

1Adapted from A. Moschetti†

, A. Haleem Butt†, L. Fiorini, P. Dario, and F. Cavallo, “Wearable sensors for gesture analysis in smart healthcare applications,” in Human Monitoring, Smart Health and Assisted Living: Techniques and Technologies, S. Longhi,A. Monteriù, and A. Freddi, Eds. IET

2.1.2 IMU-based system

To reduce the invasiveness of the wearable sensors, different technologies have been introduced to recognise hand and finger gestures. One of the proposed solutions in-troduced the use of IMUs to recognise gestures. In particular, these sensors are worn on the hand to perceive the movements made by the fingers and the hand. Bui et al. [8] used a device similar to the AcceleGlove with an added sensor on the back of the hand (the accelerometers have a measurement range of ±2g) (Figure 2.1d) to recognise postures in Vietnamese Sign Language, with an overall recognition rate over 0.90. In this way, they were able to evaluate the angle between the fingers and the palm and use it for identifying the gesture made.

Kim et al. [63] developed a device composed of three 3-axis accelerometers, worn on two fingers and on the back of the hand to recognise gestures. With the help of these sensors, they reconstructed the kinematic chain of the hand, allowing the de-vice to recognise simple gestures reaching high recognition rate. In addition, an ac-celerometer and a gyroscope were used on the back of the hand by Amma et al. [64] to recognise 3-D-space handwriting gestures (word error rate reached of 0.11).

Lei et al. [65] implemented a wearable ring (Figure 2.1c) with an accelerometer (se-lected scale of ± 1.5g) to recognise 12 one-stroke finger gestures, extracting temporal and frequency features, reaching an overall accuracy over 0.86. The chosen gestures were used to control different appliances in the house [7].

Using a nine-axis inertial measurement unit (selected scale of ± 8g for the ac-celerometer, ±2000 deg/s for the gyroscope and ±2 gauss for the magnetometer), Roshandel et al. [66] were able to recognise nine different gestures with four different classifiers with an overall accuracy of 0.98.

IMU-based systems are less accurate with respect to glove-based systems, due to the minor number of sensors. Moreover, some of these devices still use a textile sup-port that can influence the naturalness of the movements. On the other hand, they showed good results in gesture recognition problems.

2.1.3 EMG-based and hybrid systems

New sensors have been developed to read the surface electromyography (sEMG), which is the recording of the muscle activity from the surface, detectable by surface electrodes. In recent years, attention has been paid to this kind of signal to recognise hand and finger gestures. Various technical aspects have been investigated to deter-mine the optimal placement of the electrodes, and select the optimal features for the more appropriate classifier [67]. Naik et al. [67] used sEMG to recognise the flection of the fingers, both alone and combined, obtaining good results (accuracy < 0.84). Using the same signal, Jung et al. [68] developed a new device that uses air pressure sensors and air bladders to measure the EMG signal. Using six of these sensors, they were able to recognise six hand gestures with an overall accuracy over 0.90.

(A) (B) (C)

(D)

(E) (F)

FIGURE 2.1: Hand Devices: (A) CyberGlove [5], (B) Android Smartwatch [6], (C) Inertial Ring [7], (D) Accelerometer Glove [8], (E) sEMG Sensors [9], (F) 5DT Glove

[10].

To increase the number and the kind of gestures to be recognised, often different sensors are combined together. Frequently, EMG sensors are combined with inertial sensors worn on the forearm to increase the amount of information obtained by the device. Wolf et al. [69] presented the BioSleeve, a device made of sEMG sensors and an IMU to be worn on the forearm. By coupling these two types of sensors, the authors were able to recognise, with high accuracy, 16 hand gestures, corresponding to various fingers and wrist positions that were then used to command robotic platforms. Lu et al. [9] use a similar combination of sensors (Figure 2.1e) to recognise 19 predefined gestures to control a mobile phone, achieving an average accuracy of 0.95 in user-dependent testing. Georgie et al. [70] fused the signals of an IMU worn on the wrist with EMG sensor at the forearm to recognise a total of 12 hand and finger gestures with a recognition rate of 0.74.

Sensors based on sEMG can detect fingers and hand flection, but, unless an IMU is added, they do not have information about the orientation of the hand and the forearm. Moreover, except for flection, they do not give any other information about fingers.

2.1.4 Smartwatch

Smartwatches are commercial, wearable devices capable of measuring physiological parameters like heart rate variability and temperature and capable of estimating the movement of the wrist by means of three-axial accelerometers. These devices allow a continual monitoring of the user’s daily activities. The acquired information could be analysed and used for different purposes such as wellness, safety, gesture recognition, and the detection of an activity/inactivity period [71]. Today, many different Smart-watches are available on the market. Apple watch, Samsung Gear S3, Huawei Watch, and Polar M600 are several examples.

Because of the Smartwatch’s sensor functionality, the device enables new and in-novative applications that expand the original application field. Since 2001 [72], re-searchers have been paying attention to the movement of the wrist to estimate hand gestures. Now, Smartwatches are offering a concrete possibility to exploit the recog-nition of gestures in real applications. For instance, Wile et al. [73] use Smartwatches (with a ± 8g three-axis accelerometer) to identify and monitor the Parkinson Disease’s postural tremor in 41 subjects. The results suggest that the Smartwatch could mea-sure the tremor with good correlation with respect to other devices. In other research, Liu et al. [74] fuse the information of an inertial sensor placed on the wrist with a Kinect camera to identify different gestures (agree, question mark, shake hand) to im-prove the interaction with a companion robot reaching an overall recognition rate of 0.91. Ahanathapillai et al. [6] use an Android Smartwatch, with a ± 2g three-axis accelerometer (Fig 2.1b) to recognise common daily activities such as walking and as-cending/descending stairs. The parameters that are extracted in this work include activity level and step count. The achievements confirm good recognition of all the selected features.

Smartwatches can, therefore, provide information about gestures and activities, but are limited to the wrist. They are really easy to wear and are not invasive, but no information about the fingers is given.

A summary of the presented technologies is reported in Table 2.1.

Referenced

work Technologyadopted Advantages Disadvantages

CyberGlobe [5] 18 or 22 piezo-resistive sensors Sensors for Flexion/Extension and Abduction/Adduction High accuracy

Often re-calibration required Very expensive

5DT Glove

[10] Optical fiber flexorsensors Flexion/Extension andAbduction/Adduction

Calibration required for new users

Expensive

Human-glove

[60] Hall- effect sensors

Flexion/Extension and Abduction/Adduction

Difficult recording from some joints

Calibration required for new users

Sensorized

glove [61] Resistive flexsensors

Low-power Low-cost Wireless Cumbersome Carbonaro et al. [62] Textile electrodes, deformation sensors and IMU

Recognition of gestures and Electrodermal activity for

emotion recognition

Glove based Emotion recognition still

theoretical

Bui et al. [8] accelerometer6 two-axis No calibration requiredLow cost Lack of information on oneaxis Kim et al.

[63] accelerometer3 three-axis Cheap and simple prototype Need a textile-based supportCumbersome on the wrist Amma et al.

[64] 3D accelerometerand gyroscope Large vocabulary recognised Need a textile-based supportOne sensor on the hand Lei et al. [65] One accelerometeron the finger Good for simple gestures

Used for remote control

Quite cumbersome Limited to one finger Roshandel

et al. [66] Nine-axis IMU

Small finger ring Good in recognition of

simple gestures

Naik et al.

[67] sEMG

Hand free from sensors Good recognition of finger

flection

Limited information about fingers Jung et al. [68] Air-pressure sensors and air-bladders to recognise muscular activity

Hand free from sensors Limited information aboutfingers Can be cumbersome

BioSleeve

[69] IMU plus sEMG

One band on the forearm Information about the forearm given by IMU

Limited information about fingers

Lu et al. [9] IMU plus sEMG LightweightSmall Hand free from sensors

Limited information about fingers

Georgie et

al. [70] IMU plus sEMG

Hand free from sensors Good recognition of selected

gestures

Limited information about fingers

sEMG sensors and IMU placed in different position

Ahanathapil-lai et al. [6]

IMU Easy to wearUnobtrusive No information aboutfingers movements

SensHand IMUs

Lightweight Good accuracy

Modular Cheap

Current version has wired connection between fingers

units and wrist unit

TABLE2.1: Summary of technologies used in hand gesture recognition in terms of technology adopted, advantages and disadvantages

2.2

The SensHand

The SensHand is a device developed by Cavallo et al. [75] [76] in 2013. This device is composed of four, nine-axis inertial sensor units to be worn on the hand as rings and bracelet, belonging therefore to the IMU-based category. In particular, three modules are designed to be worn on three fingers (typically thumb, index, and middle finger), and one module is designed to be worn on the wrist.

FIGURE2.2: Different version of the SensHand

The SensHand has gone through different improvements over the years (see Fig-ure2.2). The last version is made of four inertial sensors integrated into four INEMO-M1 boards with dedicated STM32F103xE family microcontrollers (ARM 32-bit Cor-tex™-M3 CPU, from ST Microelectronics, Milan, Italy). Each module includes LSM303-DLHC (6-axis geomagnetic module, dynamically user-selectable full scale acceleration range of ±2 g/±4 g/±8 g/±16 g and a magnetic field full-scale of ±1.3 / ±1.9 / ±2.5