Summary ...2

CHAPTER 1 1. Anatomy and function of the inner ear...3

1.1 Epidemiology of sensorineural hearing loss...6

1.2 Aetiology of hearing loss...7

CHAPTER 2 2. Cochlear implant ...10

2.1 Cochlear implant characteristics...10

2.2 How cochlear implants encode speech...11

2.3 Strategies...13

2.4 Surgical procedures ...13

CHAPTER 3 3.1 Assessment for cochlear implantation in adult patients...15

3.2 Cochlear Implant audiological indications in adults and review of the literature...15

3.3 Review of the literature of cochlear implant's prognostic factors in adult patients ...21

3.4 Electrophysiological study post cochlear implantation in adult patients...23

3.4.1 Spread of excitation ...24

3.4.2 Neural response telemetry ...28

CHAPTER 4 4 Aims of the study...30

4.1 Materials and methods...31

4.2 Preliminary results - Statistical report...37

4.3 Discussion...41

4.4 Conclusion...51

References...52

Abbreviation List...64

Figures ...65

Key words: cochlear implant – hearing loss – telemetry - neural response telemetry – spread of excitation – objective measures – audiological outcomes - prognostic factors

Summary

Cochlear implants (CI) provide the opportunity for post-lingually deafened adults to regain a part of their auditory communicative skills. Despite significant improvements in average performance there remains a considerable amount of unpredictable inter-subject variability. Most previous studies seeking to predict post-operative speech understanding have been handicapped by heterogeneous patient populations with variable devices, speech processing strategies, speech discrimination tests or clinical rehabilitation programs. The use of varying clinical programs, particularly different devices, can influence the usefulness of performance predictors. Electronics and electrode designs have evolved over time, leading to improved speech perception for the whole group of CI recipients.

Another possible explanation for the broad variety in performances after implantation could be related to differences in peripheral nerve excitability. This activity could be estimated by measuring the widths of spread of excitation profiles through neural response telemetry.

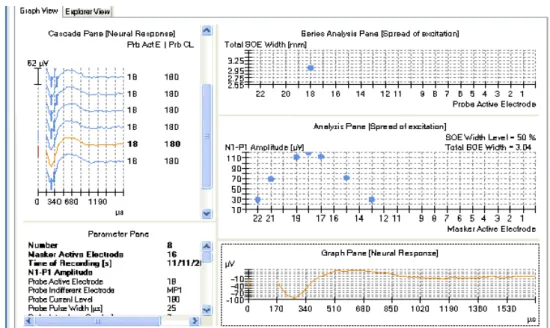

The study group was made up of 20 adult subjects who underwent CI surgery by the same otosurgeon and were rehabilitated by the same speech therapist at Pisa CI centre in the period from 2000 to 2011. In all these patients a Cochlear® perimodiolar electrode array was used, an array with 22 electrode contacts directed towards the inner wall of the scala tympani. The only variation was in the surgical insertion procedure, advance off stylet and in-stylet technique. One of this study's aims was to compare electrophysiological data to audiological outcomes in a CI adult population in order to find a specific method to predict future audiological outcomes from first programme mapping. To investigate dependence between excitability and patient benefits (described as speech understanding results) all the patients were submitted to a neural response telemetry and spread of excitation width on all the odd electrodes, evaluated with logopedical test using the new MAP performed on the basis of behavioural cues. All these data were compared to audiological outcomes. Another goal to achieve was to find a correlation between neural response telemetry and width of spread of excitation in 11 electrodes out of 22 measured.

The measure of spread of excitation is not commonly used in the follow up of a CI population, but it could be of great importance in difficult cases such as non-collaborative patients and to study patients who do not achieve the expected results. In this context we tried to statistically demonstrate that as the SOE width increases, audiological performances of the patient declines. Finally, we compared all patient maps and their performances in order to find a correlation between MAP's modifications and their audiological outcomes.

CHAPTER 1

1. Anatomy and function of the inner ear

The inner ear is a sensory organ responsible for the senses of hearing (cochlea), balance (vestibule) and detection of acceleration in vertebrates (semicircular canals).

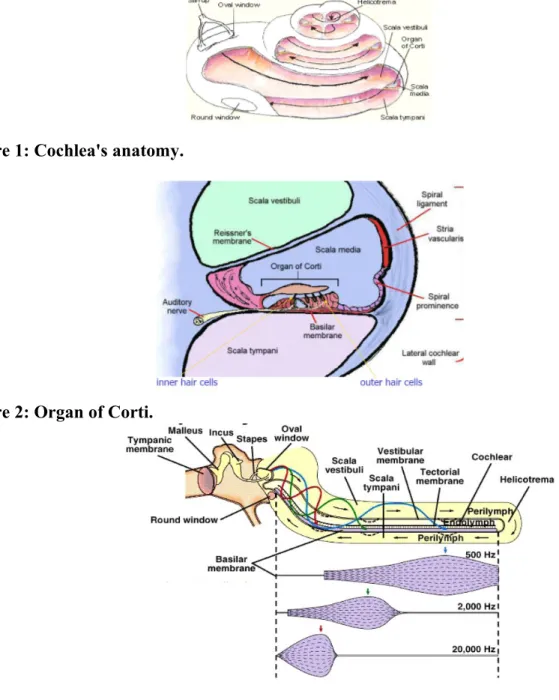

The cochlea is a bony tube, coiled around a central axis, the modiolus, thereby forming a spiral of two and a half turns in humans. The tube has a total length of 30 mm, its internal diameter tapers from 2 mm at the window level, to about 1 mm at the apical end. The overall size of the coiled cochlea also decreases from the basal turn to the apical one. The cochlea is divided by the anterior membranous labyrinth into three compartments or scalae: the scala vestibuli, scala tympani and scala media (Figure 1).

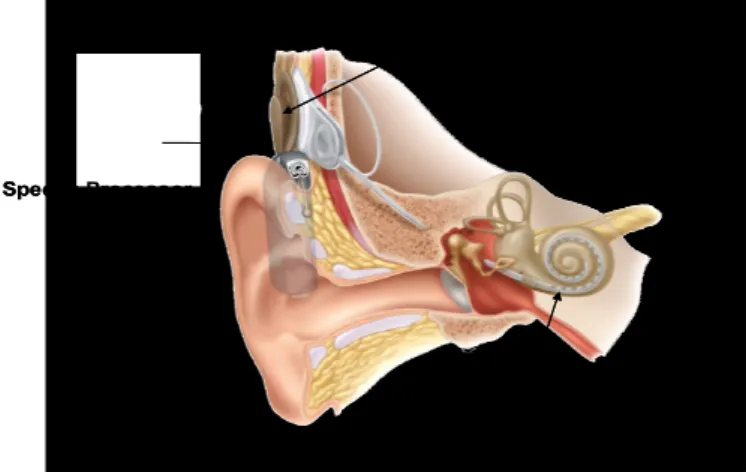

The scala media is bounded by the osseus spiral lamina projecting from the modiolus and prolonged externally by the basilar membrane, by the Reissner's membrane and by the lateral wall. Its cross-section is thus approximately tiangular. The basilar membrane, extending from the spiral lamina to the spiral ligament, supports the organ of Corti (Figure 2) containing the sensory cells. The lateral wall is lined by the spiral ligament and the heavily vascularized stria vascularis.

The scala vestibuli is above the scala media bounded by the Reissner's membrane. The oval window, closed by the stapes footplate and annular ligament, opens into the vestibule, connected to the scala vestibuli by the fenestra vestibuli. Therefore, stapes movements directly result in pressure variations in scala vestibuli.

The scala tympani is below the scala media, bounded by the basilar membrane. The round window opens into the scala tympaniat the beginning of the basal turn. It is separated from the gaseous middle ear cavity by the round window membrane. On the apical side of the cochlea, since the scala media is shorter than the cochlear tube, a small opening called helicotrema connects the scala vestibuli and tympani (Figure 2).

The scala media is filled with a few microlitres of endolymph, formed by the stria vascularis, which has a high K+ concentration of about 150 mM and a low Na + concentration (1 mM). Owing to the function of the stria vascularis, a large positive endocochlear potential can be measured in the cochlea: 80-90 mV.The scalae vestibuli and tympani contain perilymph , an extracellular-like fluid with a low K+ concentration (4 mM) and a high Na+ concentration (140 mM). As these two scalae are connected through the helicotrema, there is little difference between their ionic contents. Resting potential in perilymph is 0 mV.

The organ of Corti, first described thoroughly by Alfonso Corti in 1851, rests on the basilar membrane and osseus spiral lamina (Figure 2). The basilar membrane is made up of fibres embedded in extracellular amorphous substance. Two zones are separated, the pars tecta or

arcuate zone extending from the spiral lamina, and the pars pectinata or pectinate zone reaching the spiral ligament. The structure of the basilar membrane is responsible for the passive resonant properties of the cochlea. The mechanical properties of the basilar membrane, and particularly its stiffness, vary gradually from base to apex and, as a result, its resonance frequency decreases while the distance from the round window increases, with a rate of about one octave every 3 mm. This progressive decrease is mainly due to two geometrical factors: in a basoapical direction, the width of the membrane from the osseus spiral lamina to the spiral ligament increases from about 0.12 mm at the base to about 0.5 mm at the apex, while its thickness decreases by a similar amount.

The major components of the organ of Corti are the inner and outer hair cells (Figure 3), resting on the basilar membrane, surrounded by supporting cells (Deiter's cell, pillar cells, Hensen cells and Claudius cells). The tops of sensory cells bathe in endolymph and are covered by a flap of gelatinous substance called the tectorial membrane(Figure 3). The apical parts of the inner and outer hair cells and the supporting cells form the reticular lamina. Its cell junctions are thight, and thus, the reticular lamina acts as an ionic barrier between the endolymph and the perilymph. Conversely, perilymph can diffuse through the basilar membrane. Thus a perilymph-like fluid bathes the cell bodies of sensory and support cells.

Aligned with the length of the cochlea from base to apex, one row of inner hair cells and about three parallel rows of outer hair cells can be found. The overall number of inner hair cells is around 3500 in humans, whereas about 12000 outer hair cells are found. Both types of cells have apical stereocilia bundles. Rather than being true cilia made of tubulin, the stereocilia are microvilli, made of actine filaments inserted into the cuticular plate. They vary in height, particularly those of outer hair cells, as a function of distance to the oval window. Tip and lateral links connect neighbouring stereocilia. They are aligned in about four V- or W- shaped rows, with the tallest stereocilia being on the outer, lateral wall side of the cells. In mammals, the tallest stereocilia of the outer hair cells are strongly embedded in the tectorial membrane, whereas the stereocilia of inner hair cells do not seem to touch the tectorial membrane. Instead, their tips are very close to to Hensen's stria, forming a groove along the lower surface of the tectorial membrane.

Sound stimuli have their origin in the surrounding environment and are transmitted to the inner ear through the external and middle ear. Specialized structures convert sound waves from the external air to the liquids of the inner ear permitting a mechanical vibration of the endolymphatic fluid, which results in the stimulation of the auditory hair-cells and the generation of electrical activity. These messages are transmitted as action potentials by the auditory nerve fibers towards more central parts of the brain (Figure 3).

Owing to the piston-like action of the stapes footplate in the oval window, a differential pressure occurs between the scala vestibuli and tympani. It is thought that Reissner's membrane fully transmits pressure waves from the scala tympani to the scala media and thus the differential pressure is actually applied on the two sides of the basilar membrane and induces vibrations at the level of the organ of Corti. Stereocilia bundles are deflected by two different mechanisms: shearing for the outer hair cells due to the movement of the tectorial membrane relative to the reticular lamina, and the movements of subtectorial endolymph acting on inner hair cells stereocilia through viscous forces. Deflection of stereocilia by sound waves alternately opens and closes ion channels, presumably at or near the tip links. These tip links are therefore believed to be of great functional importance. (Pickles JO et al. 1984)

As a result of the strong electrochemical gradient existing between endolymph (+80 mV) and intracellular space (-40 to -70 mV), K+ ions flows into the sensory cells and induce a decrease in the membrane potential. This depolarization determine a release of glutamate by the inner hair cells activating the afferent nerve fibers. Outer hair cells exhibit electromotility contributing to shaping the mechanical excitation of inner hair cells (Figure 4).

Hair-cells are innervated by sensory neurons that project towards the auditory nuclei in the brainstem. The spiral ganglion contains about 25000 cell bodies of afferent auditory nerve fibers. Their dendrites come from the base of the hair cells, through small holes distribuited along the osseus spiral lamina (Figure 5). About 95% come from inner hair cells and are called type I neurones. These neurons have a large diameter and are covered with a myelin sheath, enabling fast conduction of action potentials towards the first relay, in the cochlear nucleus. Inner hair cells have a diverging innervation, about 10 type I fibers, or more, connect to one inner hair cell. The remaining 5% of nerve fibers innervate outer hair cells. Outer hair cells are divergently innervated by type II neurons. Their role are completely unknown to date.

The auditory-nerve contains about 2000 efferent fibers, originating from the superior olivary complexes on both sides of the brainstem. Two neural bundles reach the cochlear area, one from the medial superior olive synapses with the base of outer hair cells, whereas another comes from the lateral olivary complex and projects on the affrent dendrites coming from inner hair cells. The medial olivocochlear neurones have large, almost cholinergic, synapses with the outer hair cells, and presumably modulate their motility. The lateral olivocochlear neurones involve more complex neurotransmission. They probably regulate the function of type I afferent neurones and may also play a role when these neurones are damaged. (Luxon L et al. Audiological medicine 2003)

Sound-wave frequency discrimination is based on the position of the hair-cells along the longitudinal cochlear axis, which is correlated with the position of the sensory neurons in the cochlear ganglion. This tonotopical order is conserved in the central auditory nuclei, where

sensory neurons project, reproducing the hair-cell order in the cochlea (Figure 6). Thus, in addition to cell diversification, spatial patterning is an essential requirement for the correct function of the inner ear.

In most cases, when a patient has a severe-profound hearing loss he will have lost most of the hair cells in the inner ear. These people benefit from the multiple-channel CI.

1.1 Epidemiology of sensorineural hearing loss

Hearing loss is a common disease generally associated with increasing age. Its frequency varies from 0,2% under 5 years of age to over 40% in persons older than 75 years. The incidence of congenital severe to profound hearing loss is about 1-3/1.000 newborns. In Italy it has been recently extimated that there are about 8 millions of people with hearing loss of varying degree. The prevalence for severe to profound deafness is about 59 cases per 100,000 children.(Davis A et al. 1997) About one in 1,000 children is severely or profoundly deaf at three years old. This rises to two in 1,000 children aged 9 -16.(Fortnum HM et al. 2001)

Hearing loss is one of the most common disabilities in adult population in industrial nations, with significant social and psychological implications. Approximately 12% to 17% of American adults (roughly 40 million people) report some degree of hearing loss (Statistics by the National Institute on Deafness and Other Communication Disorders, 2010, http://www.nidcd.nih.gov/health/statistics/Pages/quick.aspx) and this number can be expected to increase as a result of increased life expectancy.

In the UK around 3% of those over 50 and 8% of those over 70 have severe to profound hearing loss. people with severe to profound hearing loss make up around 8% of the adult deaf population (Royal National Institute for the Deaf. Information and resources. 2006 URL:http://www.mid.org.uk/information_resources/).

This number is likely to rise with the increasingly elderly population. In those over 60 years old the prevalence of hearing impairment is higher in men than women, 55% and 45% for all degrees of deafness (Abutan B et al. 1993).

Hearing loss is often associated with other health problems; Fortnum and colleagues found 27% of children who were deaf had additional disabilities.8 In total, 7581 disabilities were reported in 4709 children (Fortnum HM et al. 2002). However, this may be an underestimate as ‘no disability’ and ‘missing data’ responses were not distinguished.

Additionally 45% of severely or profoundly deaf people under 60 years old have other disabilities - usually physical, this rises to 77% of those over 60 years

(Royal National Institute for the Deaf. Information and resources. 2006 URL:http://www.mid.org.uk/information_resources/).

1.2 Aetiology of hearing loss

The prevalence of hearing disorders combined with the lack of recovery makes the treatment of SNHL a major challenge in the area of otology and audiology. In most cases of SNHL, the primary pathology is the loss of mechanosensory hair cells located within the the organ of Corti. The survival and/or death of a hair cell can be influenced by exposure to a variety of factors, including loud sounds, environmental toxins and ototoxic drugs. Aging, genetic background, and mutations in “deafness” genes also contribute to hair cell death. In mammals hair cells are only generated during a relatively brief period in embryogenesis. In the mature organ of Corti, once a hair cell dies, it is not replaced. Instead, the loss of a hair cell leads to rapid changes in the morphology of surrounding cells to seal the opening in the reticular lamina that results from the loss of a hair cell (Lenoir M et al. 1999; Raphael Y 2002).

SNHL in adult population is commonly caused by an age-related hearing loss: presbyacusis (Abutan BB et al. 1993). Presbyacusis is a complex disorder, influenced by genetic, environmental/lifestyle and stochastic factors. Approximately, 13 % of those over age 65 show advanced signs of presbyacusis. By the middle of 21st century, the number of people with hearing impairment will have increased by 80%, partly due to an aging population and partly to the increase of social, military, and industrial noise (Lee FS et al. 2005).

According to Rosenhall (1993), presbyacusis is a biologic phenomenon of which no one can escape, starting at 20-30 years of age, and becoming socially bothersome when the person reaches 40-50 years. Early diagnosis and intervention in presbyacusis are paramount in order to provide the elderly with a good life quality.

Even though every individual shows a steady decline in hearing ability with ageing, there is a large variation in age of onset, severity of hearing loss and progression of disease, which results in a wide spectrum of pure-tone threshold patterns and word discrimination scores. Presbyacusis has always been considered to be an incurable and an unpreventable disorder, thought to be part of the natural process of ageing, but nowadays, it is recognized as a complex disorder, with both environmental and genetic factors contributing to the etiology of the disease. This is a progressive hearing loss due to the failure of hair-cell receptors in the inner ear, in which the highest frequencies are affected first. Hearing loss may also be due to noise exposure, ototoxic drugs, metabolic disorders, infections, or genetic causes.(Weinstein BE. 1989).

The second leading causes of adult sensorineural hearing loss (SNHL) in The United States is noise-induced hearing loss. The usual presentation of noise-induced hearing loss is high frequency sloping SNHL with high-pitched tinnitus and loss of speech discrimination. For broad-spectrum industrial noise, hearing loss is greatest at 3-6 KHz. The mechanism of noise-induced hearing loss appears to be a softening, fusion and eventual loss of stereocilia in the outer hair

cells of frequency specific regions of the cochlea. Acoustic trauma in the form of intense short-duration sound may cause a permanent hearing loss by tearing cochlea membranes, thereby allowing endolymph and perilymph to mix. Infectious causes of hearing loss in adults include viruses, syphilis, chronic and acute otitis media, lyme disease, and bacterial meningitis. Viral infections are common causes of SNHL in children. In adults, herpes zoster oticus is a well known cause SNHL. Other viruses, such as human spumaretrovirus and adenovirus have been implicated in selected cases of sudden SNHL. Chronic otitis media may lead to progressive SNHL, either by tympanogenic supporative labyrinthitis, or by labyrinthine fistula. Acute otitis media may also lead to SNHL, but the etiology is unclear. Bacterial meningitis causes hearing loss in about 20% of cases, most commonly bilateral, permanent, severe to profound SNHL. Haemophilus influentia, Neisseria meningitidis and Streptococcus pneumoniae are the most common in children. The hearing loss associated with bacterial meningitis occurs early in the course of the disease, and appears to be due to the penetration of bacteria and toxins along the cochlear aqueduct or internal auditory canal leading to a suppurative labyrinthitis, perineuritis, or neuritis of the eighth nerve. Thrombophlebitis or emboli of the small labyrinthine vessels and hypoxia of the neural pathways may also contribute to the hearing loss. Antibiotics may contribute to hearing loss indirectly by causing a rapid accumulation of bacterial degradation products such as endotoxins and cell wall antigens. The evoked host inflammatory response is thought to worsen destruction of normal tissue. Support for this theory comes from corticosteroid use during bacterial meningitis, which dampens the inflammatory response, and has been shown to improve post-meningitis hearing.

Ototoxic medications include antibiotics, loop diuretics, chemotherapeutic agents, and antiinflammatory medications. The cochleotoxic activity of aminoglycosides is in part due to their accumulation and prolonged half-life in perilymph, especially in patients with renal compromise. Energy-dependent incorporation of the antibiotics into hair cells occurs, and results in damage in the outer hair cells, beginning in the basilar turn. In the vestibular system, Type I hair cells are more susceptible to aminoglycosides. Gentamicin, tobramycin, and streptomycin are primarily vestibulotoxic, while kanamycin and amikacin are primarily cochleotoxic. Ototoxicity is increased with treatment greater than 10 days, preexisting hearing loss, concomitant exposure to noise, or use of other ototoxic agents. Loop diuretics such as furosimide and ethacrynic acid, as well as erythromycin and vancomycin may cause permanent ototoxicity, especially in combination with other ototoxic agents. Salicylates in high levels may cause reversible hearing loss and tinnitus. The mechanism is likely a salicylate-mediated increase in the membrane conductance of the outer hair cells. Cisplatin causes a permanent, dose-related, bilateral high frequency SNHL. As with aminoglycoside usage, high frequency audiologic

testing (8-14KHz) and by careful monitoring of peak of serum levels may identify early ototoxicity when using cisplatin.

SNHL caused by autoimmune disease may be associated with vertigo and facial palsy. The following autoimmune disorders may cause hearing loss: Cogan's disease, systemic lupus erythematosus, Wegener's granulomatosis, polyarteritis nodosa, relapsing polychondritis, temporal arteritis and Takayasu's disease. Histopathologic findings are quite variable. Some cases of hearing loss from autoimmune disease improve with steroids.

Hematologic disorders such as sickle cell anemia and blood viscosity disorders may lead to SNHL. Sickle cell disease likely causes thrombosis and infarction of the end vessels of the cochlea of some affected patients. Some 20% of sickle cell patients have SNHL. Similarly, blood viscosity disorders and megaloblastic anemias may induce cochlea end vessel disease.

Both otosclerosis and Paget's disease have been associated with SNHL. However, the etiology of the hearing loss is unclear. Histopathologic specimens have failed to show consistent injury to the cochlea or neural pathways.

Endocrine disorders such as diabetes mellitus, hypothyroidism and hypoparathyroidism, may be associated with hearing loss in adults. However, the etiologies of hearing loss associated with endocrine disorders are unclear, and the causal relationships have yet to be established. Summing up SNHL causes are:

• Genetic hearing loss

• Prolonged exposure to loud noises

• Viral infections of the inner ear

• Viral infections of the auditory nerve

• Trauma • Meniere's disease • Acoustic neuroma • Meningitis • Encephalitis • Multiple sclerosis • Stroke • Hematologic disorder • Endocrine disorders • Chemotherapy • Autoimmune disease

CHAPTER 2

2. Cochlear Implant

The multiple-channel CI restores useful hearing in severely-profoundly deaf people. It bypasses the malfunctioning inner ear and provides information to the auditory centres in the brain through electrical stimulation of the auditory nerves. It has enabled tens of thousands of severely profoundly deaf people in over 70 countries to communicate in a hearing world. The prototype speech processor and implant were then developed industrially by Cochlear Pty. Limited and trialled internationally for the US Food and Drug Administration (FDA). In 1985, it was the first multiple-channel CI to be approved by the FDA as safe and effective for postlingually deaf adults. In 1990, it was the first implant to be approved by the FDA as safe and effective for children from two to eighteen years of age.

The CI is now demonstrated to be more cost-effective than a hearing aid in severe-profound SNHL in adult and infant patients (Turchetti G et al. 2011a; Turchetti G et al. 2011b). Furthermore, the costs to achieve a Quality Adjusted Life Year (QALY) show it to be comparable to a coronary angioplasty and an implantable defibrillator.

2.1 Cochlear implant characteristics

CI consists of an external microphone and speech processor (Figure 7), and an implanted receiver–stimulator and electrode array (Figure 8). The microphone is usually directional, and placed above the ear to select the sounds coming from the front, and this is especially important in conversation under noisy conditions. The voltage output from the microphone passes to a speech processor worn behind the ear (in adults-Figure 7) or attached to a belt (body worn version for children-Figure 9). The speech processor filters the speech waveform into frequency bands, and the output voltage of each filter is then modified to lie within the dynamic range required for electrically stimulating each electrode in the inner ear.

A stream of data for the current level and electrode to represent the speech frequency bands at each instant in time, together with power to operate the device, are transmitted by radio waves via a circular aerial through the intact skin to the receiver–stimulator (Figure 10).

The receiver–stimulator (Figure 8) is implanted in the mastoid bone and decodes the signal and produces a pattern of electrical stimulus currents in a bundle of electrodes, inserted around the scala tympani of the basal turn of the cochlea. These electrodes excite the auditory nerve fibres and subsequently the higher brain centres, where they are perceived as speech and environmental sounds.

One of the most important characteristics of CI is the intracochlear array. Current CI devices have from 12 to 22 electrodes in intracochlear array, that could be straight (Figure 11) or perimodiolar (Figure 12).

Perimodiolar electrodes are designed to coil during or after insertion to occupy a position closer to the modiolar wall of the cochlea, where the spiral ganglion cells reside (Figure 13). These electrodes require a different insertion technique than the straight ones, and specialized insertion tools have been created to facilitate insertions. The potential advantages of perimodiolar electrodes include: (1) more selective stimulation of spiral ganglion cell subpopulations (Figure 14); (2) less current required for each stimulus, thereby reducing the power consumption; and (3) less damage to the cochlear elements. These potential advantages may translate into better speech understanding using newer processing strategies, longer battery life and preservation of residual hearing.

2.2 How cochlear implants encode speech

CI signal processors convert acoustic vibrations into electrical stimuli to be transmitted to the auditory nerve. This role is normally served by the external and middle ear, as well as the co-chlea, organ of Corti and inner hair cells. The processor must take a wideband signal with a dy-namic range of greater than 100 dB and convert it into a set of parallel signals each with a ma xi-mum “data-transfer” rate of a few thousand spikes per second, the upper limit of auditory nerve phase-locking with electrical stimulation (Dynes SB et al. 1992), and a dynamic range of ∼2 dB, the typical dynamic range for electrical stimulation of an auditory nerve fiber (Litvak LM et al. 2003). This challenging conversion from wideband, wide dynamic range acoustic signal to mul-tiple parallel narrowband, narrow dynamic range electric signals presented to the auditory nerve represents the performance limiting interface between the implant and the patient’s nervous sy-stem.

In a normal cochlea, approximately 1000 inner hair cells transmit the details of basilar membrane motion via independent synapses to approximately 30,000 auditory neurons and sub-sequently to a normal central nervous system (Schuknecht HF 1993). An implanted ear has bet-ween 1 and 22 intracochlear electrodes with poor spatial selectivity (Cohen LT et al. 2003) acti-vating a potentially reduced complement of auditory neurons (Incesulu A et al. 1998) , which convey signals to a potentially abnormal central auditory pathway (Lee DS et al. 2001). How best to do this is a topic of considerable basic and applied interest that is under active investiga-tion in many laboratories around the world. The task is made no easier by the fact that many ba-sic aspects of normal hearing, for example the precise bases for loudness and pitch coding, are incompletely understood (Moore BC 2003).

Single-channel CI use has been replaced by multichannel CI such as the exploitation of “place-pitch” with multiple electrodes is necessary to support higher levels of speech perception. Multichannel CI devices have bandpass filters that separate sound into different frequency re-gions and compression that reduce the dynamic range in each band to the narrow dynamic range of electrical hearing, approximately 20 dB. While this approach attempts to mimic the tonotopi-city of the normal cochlea, the similarity stops there.

The normal cochlea performs a detailed frequency analysis of incoming sound via the microme-chanics of basilar membrane motion under the influence of outer hair cell motility. Highly filte-red signals are represented in the membrane potential of each inner hair cell and synapses convey a further transformation of these signals to between 10 and 30 individual auditory neurons (No-bili R et al. 1998).

This sharply tuned system can be contrasted with an implanted cochlea where precise filtering can be performed using signal processing, but current spread (Boex C et al. 2003a) and possibly other less well-understood central phenomena limit the spatial selectivity of electrical stimulation (Boex C et al. 2003b).

This subsequently limits the spectral resolution of implant listeners (Henry BA et al. 2003). The perceptual impact of poor spectral resolution has been studied in detail in hearing aid users (Moore BC 1998) as well as for implant hearing (Fu QJ et al. 2002) and in implant simulations (Fu QJ et al. 2003). It appears that “spectral mismatch” between the population of fibers excited by a given electrode, and the frequency range of sound presented to that electrode pose greater li-mitations to speech perception, at least in quiet, than does poor spectral resolution per se (Ba-skent D et al. 2003). This mismatch may be related to depth of electrode insertion (Faulkner A et al. 2003) but may also be due to our ignorance of, and relative inability to control, the precise site of electrical excitation of auditory neurons (Miller CA et al. 2003, Mino H et al. 2004; Ru-binstein JT et al. 1999).

Attempts to improve frequency selectivity include increasing the modiolar proximity of the electrode array (Wackym PA et al. 2004), development of penetrating electrode arrays (Hillman T et al. 2003), possible use of neurotrophic factors to induce growth of neural elements processes toward electrodes, and temporal coding to reduce channel interaction.

2.3 Strategies

The effectiveness of a CI speech processing strategy is principally determined by the amount of information about the original speech signal that is encoded in the electrical pulses that stimulate the auditory nerve. The development of such strategies builds upon the known properties of how the auditory pathway encodes speech. This involves the encoding of information in both a place code and a temporal code.

The Continuous interleaved sampling, or CIS strategy (Wilson BS et al. 1991), Spectral Peak, or SPeak and Advanced Combination Encoders, or ACE (Cochlear Corp., Sydney, Australia), as well as the High Resolution (Advanced Bionics Corp, Sylmar, CA) strategies are all variations on this theme. The primary differences among them are rate of stimulation, number of channels, the relation between the number of filters and the number of electrodes activated, as well as the details of how each channel’s envelope is extracted and how much temporal information is preserved. These strategies all have in common the use of temporally interleaved pulses to avoid electrical field interactions that reduce frequency selectivity. SPeak and ACE attempt to emphasize spectral peaks in the signal by selecting a subset of filter outputs with higher energy levels. High Resolution augments the temporal information by allowing higher frequency components through the envelope detector. Although some patients clearly perform better with one strategy than another, average speech scores across populations of patients do not clearly demonstrate superiority of a particular strategy (Wilson BS et al. 1993, Manrique M et al. 2005). Variability across subjects is still substantially greater than variability across hardware or software although there is a clear and substantial trend since the early 1980s for speech perception with implants to improve. Some of this improvement is due to advances in speech processing, some due to implanting patients with more normal auditory systems, and some is due to unknown causes.

2.4 Surgical procedure

Full atraumatic scala tympani electrode placement is the goal of every CI operation. This goal can be achieved in most CI surgeries, except in patients with obstructed or malformed cochleas. Although there are many modifications and variations to the CI procedure, electrode insertion is the crucial step. Optimal CI results first depend on optimal electrode placement. Improperly or suboptimally placed electrodes, and kinked or damaged electrodes will result in poor CI functioning and poorer audiological outcomes.

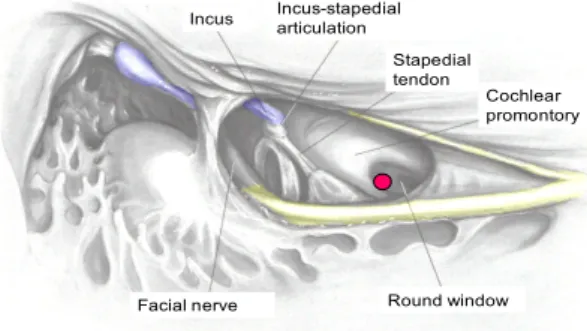

CI electrode insertion requires access to the cochlea with the facial recess approach. Thinning the posterior external auditory canal is the first step to visualize and performe the facial

recess. After mastoidectomy, the descending facial nerve is skeletonized so that it can be seen through a thin layer of bone (Figure 15). The short process of the incus is also visualized, but a thin incus bar remains intact. The incus bar can be taken down and the incus removed if more visualization is needed. Preoperative imaging (ie, computerized tomography) would reveal and alert the surgeon to an abnormal course of the facial nerve, and pneumatization of the facial recess. The chorda tympani nerve is visualized through its course from the facial nerve to the chorda iter posterior, where it enters the middle ear (Figure 16). Bone between the facial and chorda tympani nerves is slowly removed with serially smaller diamond burrs.

An adequate facial recess provides good visualization of the stapedial tendon, round window and cochlear promontory.

To visualize properly and gain access to the cochlear promontory for the cochleostomy, the facial recess must be enlarged inferiorly, and the bone inferior to the stapedial tendon must be removed, anterior to the facial nerve (Figure 17). The chorda tympani nerve can be spared in nearly all cases. Copious irrigation should be used to avoid thermal damage to the nerve when skeletonizing the facial nerve and removing the bone anterior to the facial nerve. An adequate facial recess approach to the middle ear allows full access to the round window niche and inferior cochlea (Figure 17) .

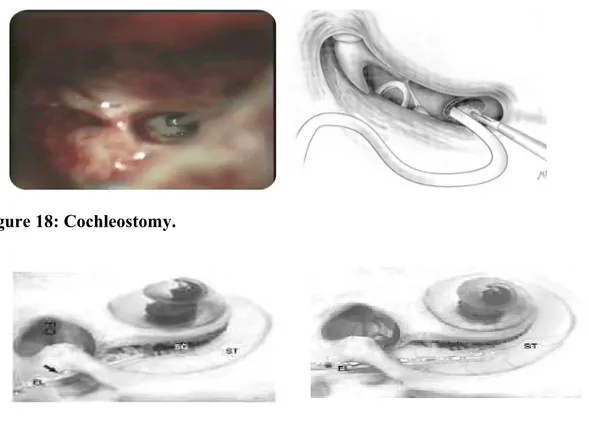

The cochleostomy is made using a 1.0-mm diamond burr, inferior and anterior to the round window membrane (Figure 18). Drilling off the round window niche may be required in some cases to properly visualize the membrane.

The electrode choice and insertion tool used for that electrode determine the cochleostomy size. Round window insertions can also be performed. The ideal cochleostomy position is inferior and slightly anterior to the round window membrane (Figure 18) (Berrettini S et al. 2008b).

A more superior cochleostomy placement would result in injury to the spiral ligament or the basilar membrane, or even result in direct entry to the scala vestibuli (Figure 19). After removal of bone dust and blood, the endosteum is carefully opened with a small pick or a rasp, revealing the lumen of the scala tympani. The receiver/stimulator should be seated and secured before electrode insertion (Figure 20). The Contour Advance® electrode could be inserted into the cochlea using the “ advanced off stylet “ technique (AOS) or throughout inserting the array of electrode till a marker on the antimodiolar side, approximately 11 mm, removing the stylet in a second moment. In the AOS technique the surgeon, while holding the stylet firmly with a forceps in one hand, the electrode is advanced off the stylet into the cochlea using an insertion tool (Figure 21). This maneuver allows the electrode to coil during insertion, thus reducing forces on the cochlear outer walls, obtaining a more consistent perimodiolar position and should determine a reduction of spread of excitation. Because the electrode contacts are only on the modiolar surface, correct orientation of the electrode in the scala tympani is important.

CHAPTER 3

3.1 Assessment for cochlear implantation in adult patients

Assessment for cochlear implantation is undertaken by a multi-disciplinary team, whose aim is to select people who are medically, audiologically and psychologically suitable. This comprises a number of evaluations: audiological, electrophysiological, medical, logopedical, radiological and psychological.

Audiological evaluation involves an accurate anamnestic personal and family history, a pure-tone audiometry, both for air and bone conduction, speech audiometry, a speech perception test with hearing aids, in order to evaluate the word recognition score without lip reading and with the hearing aid in the ear candidate for implantation (AA.VV. 1997, Burdo S et al. 1995), tympanometry with stapedial reflex study, auditory evoked brainstem responses, otoacustic emissions. If this results in a likely indication for CIs then patients undertake a three month trial with acoustic hearing aids to confirm that these do not provide sufficient support.

Medical evaluation includes assessment of fitness for surgery, the aetiology of hearing loss and whether there are other disabilities or medical conditions present that may affect the success of implantation. Aetiological study includes molecular analysis for Connexin 26 and 30 and mitochondrial DNA mutations and deletions (including the A1555G and A3243G point mutations) (Berrettini S et al. 2008a).

Radiological study of the brain and inner ear is made in order to examine the anatomical structure of the cochlear and the auditory nerve for anomalies that might indicate unsuitability for surgery or require a modified implantation such as the presence of inner ear malformations, cochlear obstruction or ossification, auditory nerve morphological abnormalities. Neuroradiological study is done using High Resolution Computed Tomography (HR-CT) of petrous bone and Magnetic Resonance Imaging (MRI) scanning of brain and inner ear with contrast in all patients candidate to CI procedure.

Psychological assessment may be carried out to ensure that realistic expectations of the benefits and the demands of training are understood.

3.2 Cochlear Implant audiological indications in adults and review of the literature

From 1985, data of first FDA approval for cochlear implantation in adults, selection criteria in candidacy have expanded over time. Numerous clinical trials have been conducted by the FDA since CI were first introduced, and numerous supplements have been submitted to the FDA as these devices have undergone technological improvements. Thus, the determination of the CI candidacy is ultimately based on the best knowledge and judgment of the managing

physician. Changes in candidacy have primarily included implanting persons with increasing amounts of residual hearing, implanting persons with increasing amounts of preoperative open-set speech skills, implanting children at younger ages, and implanting greater numbers of persons with abnormal cochleae.

The primary reason that selection criteria have changed is that patients with implants are obtaining increasing amounts of open-set word recognition with the available devices.

Although this increased performance is largely due to the technological advancements that have occurred in the field, it may also due to, at least in part, to the fact that patients with greater amounts of residual hearing and of residual word recognition skills are receiving CIs.

The candidacy requirements for pediatric, and adult, cochlear implantation have gradually loosened. The obvious goal is to never have a single patient perform more poorly with their CI than they previously performed with hearing aids alone (Arts HA et al. 2002; Waltzman SB et al. 2006). The indications for cochlear implantation have expanded, as many unilaterally implanted individuals are able to achieve open-set word recognition. Despite the benefits seen in unilateral implantation, many individuals have di culty perceiving speech in noisyffi environments. Bilateral cochlear implantation has made great strides in providing individuals access to sound information from both ears, allowing improved speech perception in quiet and in noise, as well as sound localization.

CI indications in adults are variable in the different countries. With regard to hearing threshold levels, the international guidelines indicate different levels of hearing over which CI is indicated. Some guidelines refer to the pure tone audiometry between 0.5-1-2 Khz (PTA) while others refer to the mean threshold between 2 and 4 KHz (UK Cochlear Implant Study Group. 2004b).

In adult patients, according to the Food and Drug Administration (FDA), CI is indicated with a PTA>70 dB, while according to Belgian guidelines with a PTA>85 dB associated to auditory brainstem responses (ABR) threshold ≥ 90 dB HL. The British Cochlear Implant Group (BCIG) considers CI appropriate for adult patients with thresholds between 2 and 4 KHz >90dB. Italian guidelines admit CI in adult patients with a PTA >75 dB (Quaranta A. et al. 2009).

Concerning hearing aid training and rehabilitative results with traditional hearing aids before implantation, Italian and British guidelines consider CI appropriate in cases with open set speech recognition score of < 50%, the FDA in cases with open set speech recognition score < 60% and Belgian guidelines in cases with open set speech recognition score < 30%.

In a recent review made by Berrettini S. et al (2011) CI was considered an appropriate procedure for adult patients with bilateral severe to profound hearing loss (mean threshold between 0.5-1-2 KHz > 75 dB HL), with open-set speech recognition score ≤ 50% in the best aided condition without lipreading. In selected cases CI would be indicated if open-set speech

recognition score is ≤ 50% in the best aided condition without lipreading with background noise signal to noise ratio +10.

CI is admitted in selected cases with better residual hearing at low and middle frequencies and hearing threshold between 2 and 4 KHz ≥ 90 dB, with an open-set speech recognition score ≤ 50% in the best aided condition without lipreading.

According to the only two systematic reviews on the clinical effectiveness of unilateral CI, published in the literature to our knowledge (NICE 2011, Bond M 2009) there is consistent evidence that a monolateral CI is a safe, reliable and effective strategy for adults with severe to profound sensorineural deafness.

Our auditory environments are noisy and full of multiple sound sources that are a challenge for auditory system even for a normal hearing listener. The binaural system is responsible for providing cues that segregate target signals from competing sounds, and it is able to identify sound sources in normal hearing listeners. Binaural hearing is the result of integration between inputs from the two ears and auditory pathways. The consequence of binaural hearing is the speech understanding when competing sounds are present, and it is well known that when listening with only one ear, sound localization becomes very difficult to achieve. Three primary effects on perception have been identified in binaural hearing: the head shadow effect, the binaural summation effect, and the binaural squelch effect (Stern R. M. Jr. and H. S. Colburn 1978).

The head shadow effect occurs during everyday listening conditions when speech and noise are spatially separated. For example, background noise coming from the right side would interfere with the right ear but the head would block (create an acoustic shadow) some of the interfering noise from reaching the left ear. Thus, the head shadow effect would result in a better signal-to-noise ratio (SNR) in the protected left ear (Brown K. D. et al. 2007).

A listener is able to selectively attend to the ear with the better SNR to improve intelligibility. The head shadow attenuates high-frequency sounds by approximately 20 dB but low frequency by only 3–6 dB (Tyler R. S et al. 2003), and this effect does not require central auditory processing. The head shadow effect has the largest impact on hearing with binaural cochlear implantation.

The binaural squelch effect also operates when competing noise is spatially separate from the signal so that the two ears receive different inputs. Unlike the purely physical head shadow, however, squelch requires central auditory processing that integrates the signals from each ear so that the auditory cortex receives a better signal than could be possible from either ear alone (Zurek P. M. 1993). The brainstem auditory nuclei process differences in timing, amplitude and spectral signals coming from the two ears, resulting in improved separation of speech and noise. Evidence of benefit of the binaural squelch effect is somewhat limited, not seen in all users, in

particular the adult ones and is not as large as seen with the head shadow effect (Brown K. D. et al. 2007).

Binaural summation also refers to a central processing effect, but it is thought to occur when both ears are presented with a similar signal. The combined signals from the two ears are perceived as louder by up to 3dB compared with monaural listening to the same signal (Litovsky R et al. 2007). This doubling of perceptual loudness is accompanied by increased sensitivity to differences in intensity and frequency and can lead to improvements in speech intelligibility under both quiet conditions and when exposed to noise. The literature in this area is limited, and the benefit is not as great as the head shadow effect (Brown K. D. et al. 2007).

Considering the advent of cochlear implantation and the great benefits experienced by users, it is important to stress its limitations, some of them from the device itself and other ones associated to monoaural hearing.

The unilateral CI user shows poor ability in sound source identification, and it could be difficult hearing speech in noisy environment, so if the bilateral CI recipients are able to utilize the effects described above, this ultimately determines the degree of additional benefit a second implant will provide.

The literature now contains huge series of bilateral CI, and authors reported the ability of bilateral CI users to hear speech in the presence of competing stimuli and they have advantage of spatial separation between target and competing speech (Litovsky R et al. 2007; Gantz B. J. et al. 2002; Muller J. et al. 2002). A common finding of these studies was that binaural advantage occurred to a greater extent under noisy conditions than in a quiet environment with a better speech intelligibility, most of it because of head shadow effect. In a study conducted with 17 native English-speaking adults presenting postlingual deafness that received the same implant model (Nucleus 24 Contour implant) in both ears, either during the same surgery or during two separate surgeries, the authors found speech intelligibility improvement with one versus two CIs improve with time, in particular when spatial cues are used to segregate speech and competing noise.

Localization and speech-in-noise abilities in this group of patients are somewhat correlated (Litovsky RY et al. 2009). In bilaterally implanted children, there is significant increase in speech discrimination when the authors compared bilateral implants with the best-performing unilateral implant (Kuhn-Inacker H et al. 2004; Peters BR et al 2007). The William House group in the annual meeting at the September 15, 2007 Cochlear Implant Study Group discussed the issue of bilateral implantation with about 250 CI professionals.

The specialists were concerned about unilateral cochlear implanted difficulties in everyday listening conditions. Functional localization of sounds is not possible with only one implant, often creating a safety issue and hearing in noise is very difficult. They consider bilateral

cochlear implantation advisable based on the results of improved speech intelligibility and sound localization and the expansion of the receptive sound field. Finally they agree that both children and adult may have better auditory performance with bilateral implants compared with unilateral ones (Balkany T. et al. 2008).

However bilateral cochlear implants may not be an option or recommended for all adult recipients. This could be due to health issues that prevent a second surgery, lack of insurance coverage, or, in many cases, a notable amount of residual hearing in the nonimplanted ear. In these cases, the use of a hearing aid in the nonimplanted ear can represent a viable, affordable, and potentially beneficial option for bilateral stimulation despite the asymmetry in hearing between ears (Berrettini S et al. 2010). This asymmetry occurs because the type of auditory input received by the two ears is quite different. The CI provides electric stimulation, while the hearing aid provides acoustic stimulation, which in combination across the ears is likely to provide atypical interaural difference cues in time and level. Performance in the bimodal condition was significantly better for word recognition and localization in noise (Berrettini S et al. 2010).

With regard to bilateral CI in adult patients, the NICE guidance (NICE 2011) did not consider bilateral CI a cost-effective procedure. The NICE guidance (2011) admit simultaneous bilateral CI in deaf-blind adult patients or in adult patients with additional disabilities. The sequential procedure is not admitted except for deaf-blind adult patients or adults with additional disabilities who previously received a unilateral CI. On the other hand, Bond et al (2009) report that clinical effectiveness evidence for bilateral implantation suggests that there is additional gain from having two devices as these may enable people to hold conversations in social situations by being able to filter out voices from background noise and tell the direction that sounds are coming from (Bond M et al. 2009). The mechanisms underlying the benefit deriving from the use of two implants are attributed to the head shadow effect (Eapen RS et al. 2009, Litovsky R et al. 2006, Ricketts TA et al. 2006, Schleich P et al. 2004, Laszig R et al. 2004), namely the possibility of hearing with the most favourable signal to noise ratio ear. The studies by Eapen RS et al (2009) e di Ricketts TA et al. (2006) document the benefits also deriving from the effect of binaural summation and by the squelch effect, which are linked to binaural integration. The benefits linked to binaural summation are also reported in the study by Schleich P et al (2004).

Recently Berrettini S. et al (2011) stated that monolateral CI procedure in adult patients is preferred, while bilateral CI is limited to patients with: post-meningitic deafness and initial bilateral cochlear ossification, deaf-blind patients or patients with multiple disabilities (who increase their reliance on auditory stimuli as a primary sensory mechanism for spatial awareness), unsatisfactory results with unilateral CI if better results are achievable with a

controlateral CI and in patients with CI failure if reimplantation in the same ear is not recommended.

Both simultaneous and sequential procedures are admitted, although the simultaneous procedure is recommended. In the case of sequential bilateral implantation a short delay between the surgeries is recommended.

Berrettini et al. (2011) have recently investigated the effectiveness of CI procedure in special categories of patients such as advanced age adult patient and adult patients with prelingual deafness.

In the past, the CI procedure was not considered for advanced age adult patients, as the benefit was thought to be significantly inferior to that generally obtained by the younger post-lingual adult patients. This was especially due to the physiological deterioration of the cognitive abilities having an impact on the capacities of speech perception with the CI, and to the problems regarding tolerance of the surgical procedure, risk of post-operatory complications, difficulty of manipulation of the external components of the device. More recently, however, some authors have demonstrated that not only young adults, but also adult patients in advanced age benefit from the CI procedure in terms of improvement of speech perception, and some studies have also demonstrated an improvement in quality of life. No relevant problems have been reported from the point of view of tolerance to the medical procedures and post-operative complications. In 2004 the UK Cochlear Implant Study Group (UKCISG 2004b) established that the CI procedure is cost-effective even in patients implanted after the age of 70 (Friedland DR et al. 2010). The recent Italian HTA report on CI procedure (2011) stated that CI in the elderly is admitted, without any upper limit of age. General health problems and life expectancy have to be taken into account and CI indications have to be considered on a case by case basis (Berrettini S et al. 2011).

Benefits from CI procedure in prelingually deafened adults are reported to be very variable in the literature (Berrettini S et al. 2010).

Two studies (Santarelli R et al. 2008, Klop WMC et al. 2007) selected by HTA on CI procedure recorded post-CI benefits of perceptive abilities, in both closed and open sets.

The study by Klop WMC et al. (2007) also documents an improvement of the quality of life after CI, while the study by Chee GH et al (2004) shows the subjective benefits following CI, in the majority of patients evaluated, as a result of improvement of communicative abilities, awareness of the surrounding environment, and increased independence.

Interindividual variability present in the various studies, is mostly evidenced in the work by Klop WMC et al (2007).

The Italian HTA on CI procedure stated that indications to CI in adults with pre-lingual deafness and prognostic factors have to be analyzed and discussed on a case by case basis. Factors to be

taken into account are mainly progression of deafness, hearing aid use and rehabilitation (in particular methodology of rehabilitation), results with hearing aids, patient’s motivations and psychological aspects.

3.3 Review of the literature of cochlear implant prognostic factors in adult patients

Success with CI in adult patients is variable and depends on many factors. Although CIs are a therapeutical success, huge performance variability in speech comprehension is observed after implantation. The reason for this remains incompletely understood after years of clinical practice and basic research. Which patients are going to respond well and why is an unresolved question. The outcomes of the patients are measured in the studies either speech perception or quality of life.

The outcome of CI is affected by several factors. Preoperative factors that make a significant contribution to postoperative hearing performance are duration of deafness, communication mode, etiology of deafness, age at the moment of CI surgery, speech perception performance and residual hearing. (Osberger MJ et al. 2000;Friedland Dret al. 2003; Rubinstein J. et al. 1999) Postoperative factors that make a significant contribution to postoperative hearing performance include: surgical technique, intracochlear array (straight or perimodiolar), device, cochleostomy and position of the array.

The duration of auditory deprivation plays an important role, and currently is the main predictor of implantation success in children; basically, the earlier the better. A stronger effect was shown by the UK Cochlear Implant Study Group (2004a) with duration of deafness on speech perception and quality of life, with greater effectiveness being associated with a shorter duration of profound deafness. On all measures effectiveness declined with duration of deafness (r = - 0.203, p< 0.01). However, among patients with identical duration of deafness, performance remains highly variable, suggesting there are other more fundamental factors that determine clinical outcome. Infact, recently several studies report no significant correlation for performance with the duration of deafness in their cohorts (Ruffin CV et al. 2007; Francis HW et al. 2005). Rubinstein et al. (1999) and Friedland et al. (2003) used multiple regression analysis with both pre-operative sentence scores and the duration of deafness to predict the postoperative word scores.

This model was extended to include the age at implantation (Leung J et al. 2005); this parameter showed a significant result if used as a step function for younger (<65 yr) and older (>65 yr) implantees. The population 65 years and older demonstrated a clinically insignificant 4.6%-smaller postoperative word score compared with the population younger than 65 years. The

authors stated that the duration of profound deafness and residual speech recognition carry higher predictive value than the age at which an individual receives an implant.

In the recent Peninsula report (Bond M et al. 2007), on the effectiveness and cost-effectiveness of CI procedure, seems that age at implantation may affect effectiveness (r = 0.164,p <0.01), with greater gain being experienced by adults who are post-lingually rather than pre-lingually deaf. A review of the literature revealed that adult CI recipients, with severe to profound early onset hearing loss, results have generally been poor with many gaining little improvement post-implant, more in quality of life than in audiological outcomes. (Waltzman SB et al. 2002, Teoh SW et al. 2004, Santarelli et al. 2008)

Infact prelingually deafened adults are a particular category of patients as they differ in at least two aspects from their postlingually deafened counterparts. First, they have not experienced normal hearing, and thus their neural system lacks spatial and structural organization for auditory processing (Kilgard MP et al. 2002).

Second, linguistic development depends on early auditory input. This means that the main part of patients’ speech intelligibility will be determined by the auditory input during their first years of life (Oller DK et al. 1988). Recently, the role of auditory input on CI performance in the prelingually deafened adult population was further explored, and it was concluded that all factors in the past and present contributing to oral communication might be important to the effectiveness of CI in this specific group (Teoh SW et al. 2004).

The use of varying clinical programs, particularly different devices, can influence the usefulness of performance predictors. Electronics and electrode designs have evolved over time (Hodges AV et al. 1999; Frijns JH et al. 2002; Patrick JF et al. 2006), leading to improved speech perception for the whole group of CI recipients. Outcomes produced by older device technology can partially invalidate predictions of the outcomes of current devices.

As mentioned above, the use of processor variations or different electrode array types could well have influenced these outcomes. Infact pre-curved design of the intracochlear array would match the natural shape of the cochlea, positioning the electrodes close to spiral ganglion cells much more than straight ones. The consistent electrode placement would permit a better electric-neural interface reducing spectral smearing for superior hearing outcomes (Aschendorff A et. al. 2007).

Another factor that may influence the outcome of these predictive studies was the time at which audiological performances were evaluated. It was observed that some users need more time to approach their plateau performance level. Lenarz et al. (2012) reported a stability in long-term hearing performance of postlingually deafened adult CI patients as a results of reliable implant technology and stable biological electrode-nerve interface (long-term biocompatibility). Their postlingually deafened adult CI subjects showed a learning phase of 6 months (Lenarz et

al. 2012), in which a significant improvement in speech perception was observed, infact it takes a relatively short time for the brain of postlingually deafened adults to learn how to process the artificial signal delivered by the CI (Spahr AJ 2007). Following this primary learning phase, further improvements and deteriorations were not statistically significant (Lenarz M et al. 2012). Ruffin et al. (2007) in a retrospective longitudinal study (10 years follow-up) of 31 Clarion 1.0 users, found that after 24 months from implantation where no significant improvement or decline in speech results.

Herzog et al.(2003) reported similar results for the Einsilber test for geriatric and normal adults. The plateau was reached after 2 years for both groups (55%) and remained stable up to 5 years of follow-up.

To this regard we thought that enriching clinical and anamnestical pre-implant data with electrophysiological post-implant data such as NRT and SOE could permit a better indication to patient audiological future outcomes.

3.4 Electrophysiological study post cochlear implantation in adult patients: objective measures (NRT-SOE)

In order to give a specific prognostic results and a more accurate mapping program to CI population after the surgical procedure it would be of great importance to have the possibility to use objective measures during CI follow up.

As is well known, CI is the treatment of choice for bilateral severe and profound SNHL

and it requires programming of each electrode so that we reach appropriate levels of electrical stimulation. The programming is made in the speech processor of the patients, which determines how sound is going to be analyzed and codified by a strategy of speech codification (Bento RF et

al. 1997). The stimulation unit used to program electrodes is arbitrary and it is named current

unit (CU) and ranges from 1 to 255, corresponding to approximately 0.01mA and 1.75mA, re-spectively. One of the main challenges in the case of implants in small children is postoperative follow up, especially in the definition of current levels for stimulation that will determine the programming of the speech processor (Gordon KA et al. 2002).

The success of the patient and satisfaction with the implant are highly dependent on the appropriateness of the speech processor programming, because it is the map that determines the sound and which characteristics are codified. Objective measures have been studied and employed to predict the levels of stimulation for the construction of the first maps and also for the verification of the integrity of the whole system. It is natural that these levels suffer small changes throughout time and they should be complemented and adjusted with psychoacoustic

measurements, as there is an increase in the subjects’ skills to detect, recognize and consistently respond to the sound stimulus (Gordon KA et al. 2002).

There are many methods to reach objective measures of the auditory nerve in users of CI from the electrical stimulation of the auditory system, such as evoked auditory brainstem potentials (ABR), middle latency responses and late potentials and stapedial reflex study, in an attempt to define successful prognosis to the subject (Shallop JK et al. 1999; Brown CJ et al. 2000).

3.4.1 Neural response telemetry

The measurement of auditory nerve activity most frequently used in users of CI is the Evoked Action Potential (EAP). The EAP represents a synchronous response from electrically stimulated auditory nerve fibers and is essentially the electrical version of Wave I of the ABR. The EAP is recorded as a negative peak (N1) at about 0.2-0.4 ms following stimulus onset, followed by a much smaller positive peak or plateau (P2) occurring at about 0.6-0.8 ms (Abbas

et al. 1999; Brown et al.,1998; Cullington 2000) (Figure 24). The amplitude of the EAP can be as large as 1-2 mV, which is roughly larger in magnitude than the electrically evoked ABR (Brown et al. 1998). EAP measures have become a popular alternative to clinical electrically evoked ABR testing due to ease of recording and reduced testing time. In contrast to the electrically evoked ABR, EAP measures do not require surface recording electrodes, sleep/sedation, or additional averaging equipment. The EAP is recorded via the intracochlear electrodes of the implant; therefore, the neural potential is larger than the EABR and thus fewer averages are needed, which significantly reduces testing time.

Because the EAP is an early-latency evoked potential, there are challenges associated with separating it from stimulus artifact. The specific methods used in present-day clinical software vary among manufacturers. It is helpful to understand how each of these artifact reduction methods works in order to make informed decisions about optimizing stimulation and recording parameters for clinical EAP measurements.

All newer CI systems are equipped with two-way telemetry capabilities that allow for quick and easy measurement of electrode impedance and the EAP. Telemetry simply means data transmission via radio frequency from a source to a receiving station.

Neural Response Telemetry (NRT) is the EAP telemetry software used with the last four generations of Cochlear Corporation (Lane Cove, New South Wales, Australia) devices (Nucleus 24M, Nucleus 24R, Nucleus 24RE "Freedom", and Nucleus CI512). NRT was first introduced in 1996 and FDA approved in 1998 as its own software application separate from the clinical programming software. Today, NRT has been integrated with the Nucleus Custom Sound

clinical programming software (Custom Sound EP) so that ECAP threshold information can easily be used to aid in creating speech processor programs, or maps (Figure 26). NRT uses the forward-masking subtraction paradigm as the default artifact reduction method; however, alternating polarity is one of several other available options in the software.

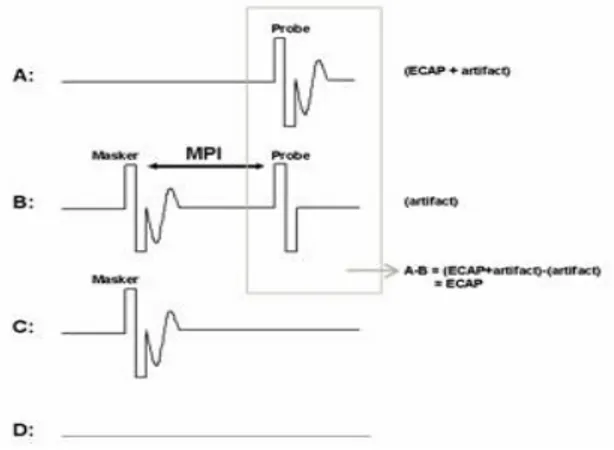

The forward-masking subtraction method exploits refractory properties of the auditory nerve to isolate stimulus artifact. This method has been described in detail in several publications (Abbas et al. 1999; Brown et al. 1990, 1998, 2000; Dillier et al. 2002). In this method, four stimulus conditions are presented: (1) probe stimulus alone, (2) masker stimulus followed by the probe, (3) masker stimulus alone, and (4) zero-current pulse to elicit system artifact (see traces A-D, respectively, in Figure 25). For the sake of simplicity, the first two conditions are the most important for a basic understanding of how this method works. In the probe-alone condition (Figure 25), the probe recruits auditory nerve fibers in the vicinity of the stimulated electrode. The measured waveform contains the neural response as well as stimulus artifact. In the masker-plus-probe condition (Fig. 25), the masker stimulus recruits the same auditory nerve fibers as in condition A (if the stimuli are presented to the same electrode at the same level). The probe is then presented shortly after the masker (typically about 0.4 ms later) within the refractory period of those fibers. As a result, only stimulus artifact (no neural response) is presumably recorded for the probe stimulus in condition B. If the response to the probe in condition B (i.e., artifact only) is subtracted from the response to the probe in condition A (i.e., neural response and artifact), then the neural response to the probe can be isolated from the stimulus artifact. The last two stimulus conditions in the 4-step process (C:masker alone and D: system artifact) are collected, and subtractions are applied to remove additional artifacts and the neural response to the masker

in condition B. One parameter that is unique to the subtraction method is the masker-probe

interval (MPI; sometimes referred to as the masker advance or inter-pulse interval). This is the time between offset of the masker and onset of the probe in the masker-plus-probe condition. Ideally, the MPI should be sufficiently short to ensure that most auditory nerve fibers are fully within their refractory period (e.g., 300-500 msec). For routine clinical EAP measures, the MPI is generally not changed unless the user is specifically measuring MPI effects (Brown et al.

1990, 1998; Miller et al. 2000; Morsnowski et al. 2006; Shpak et al. 2004). Hughes (2006)

provides a detailed discussion of stimulation and recording parameter changes as well as guidance on how to pick peaks and determine the EAP threshold.

The alternating polarity method is similar to that used routinely for clinical ABR testing. With this method, responses are measured for negative-leading (cathodic) and positive-leading (anodic) biphasic pulses. As with acoustically evoked potentials, reversing the polarity of the stimulus results in reversing the polarity of the artifact. However, the polarity of the neural response remains the same. Upon averaging responses from both polarities, the majority of the