C O N T E N T S

1 ���������� ������������ ��� ������ ����������� 3 1.1 Artificial Intelligence 3 1.2 Real-Time Systems 8 1.3 Contributions 11 2 ������ ��� & ��� 13 2.1 MAS: an AI expression 13 2.2 RTS characteristics 16 3 ��� & ����-���� ���������� 20 3.1 Traditional approaches 203.1.1 Case study: eHealth and telerehabilitation 21

3.2 Analysis of MAS Pillars 27

3.2.1 Local scheduling in agent-based frameworks 27

3.2.2 Agents’ communication middleware 34

3.2.3 Agents’ negotiation protocol 34

3.3 MAS and Real-Time: open challenges 45

3.3.1 Timing constraints 45

3.3.2 Communication means 45

3.3.3 Scarcity of resources 46

3.4 MAS and Real-Time: required intervention 46

3.4.1 Towards a real-time agent’s scheduler 46

3.4.2 RM and EDF Analysis 48

3.4.3 Towards a real-time communication middleware 53

3.4.4 Towards a real-time negotiation protocol 54

4 ��-��� 58

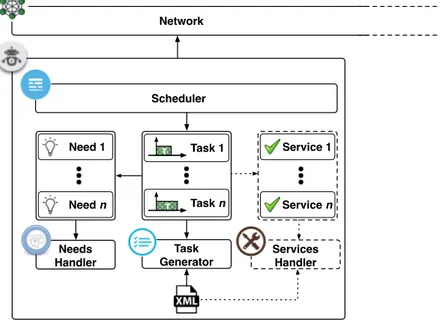

4.1 RT-MAS: the model 58

5 �� ��� ��- ��� ��������� 63

5.1 MAXIM-GPRT Overview 64

5.2 Agent Structure 64

�������� iii

5.2.1 Agent Network and NED 65

5.2.2 Agent Initializtion 67

5.2.3 MessageHandling Mechanisms 70

5.2.4 Local Scheduler 74

5.3 Schedulers Implementation 78

6 ���� ��� ������� 82

6.1 Tests and Setup 82

6.2 Task-set Generator 83

6.3 Results Presentation and Analysis 87

6.4 Results Discussion 95

7 ����������� ��� ������� ����� 97

a �����++ �� � �������� 99

a.1 Modules 100

a.2 NED Language 100

a.3 Messages 101

L I ST O F F I G U R E S

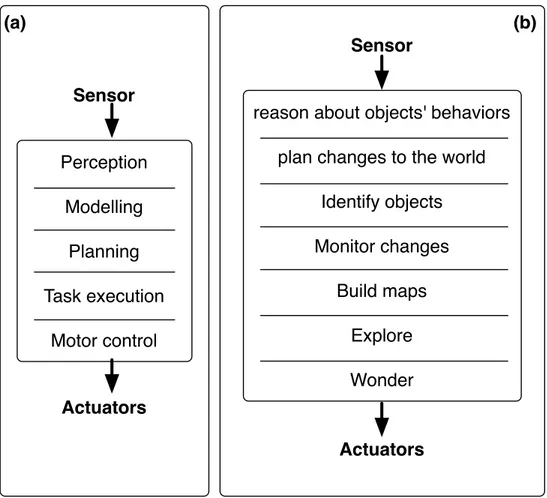

Figure 1 (a) Functional decomposition; (b) Behavior-based

de-composition. 14

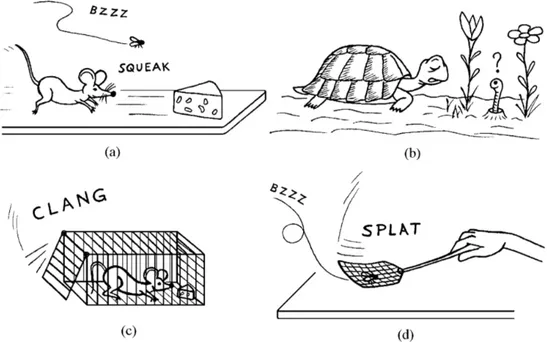

Figure 2 Both the mouse (a) and the turtle (b) behave in real time with respect to their natural habitat. Nevertheless, the survival of fast animals such as a mouse or a fly can be jeopardized by events (c and d) quicker than their reactive capabilities. Copyright 2011 by Springer, "Hard Real-Time Computing Systems", Third Edition. Reprinted

with permission. 17

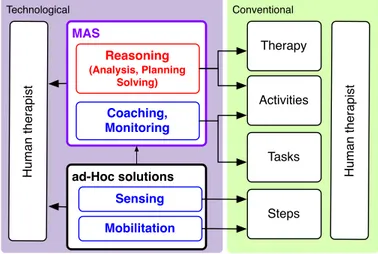

Figure 3 Generic structure of MAS solutions for rehabilitation. 25

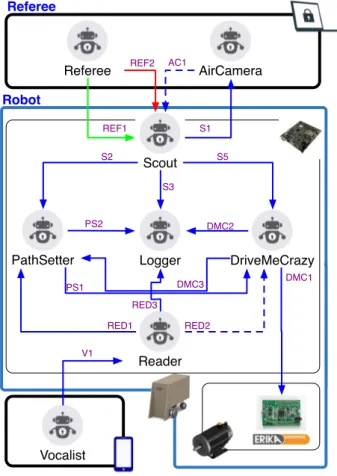

Figure 4 Agent framework, architecture and interactions. 26

Figure 5 Jason’s implementation of RR scheduling: A graphical

representation. 30

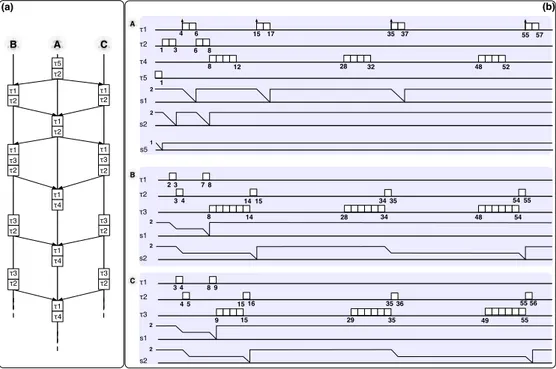

Figure 6 System representation in: (a) AUML, (b) tasks

schedul-ing. 52

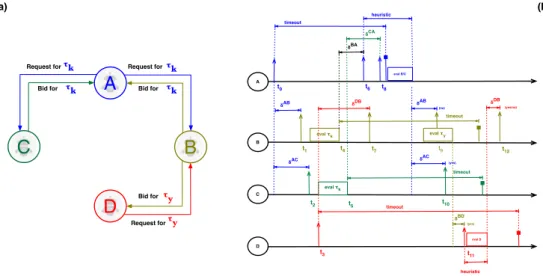

Figure 7 (a) Agents interactions; (b) Schedule of agents A,B,C, and D negotiating tasks ⌧kand ⌧y. 55

Figure 8 From the need for the workload to its execution. 62

Figure 9 Agent composition. 65

Figure 10 Network initialization - flowchart. 66

Figure 11 Agents initialization - flowchart. 68

Figure 12 FCFS internal characterization in MAXIM-GPRT. 79

Figure 13 RR internal characterization in MAXIM-GPRT. 80

Figure 14 EDF internal characterization in MAXIM-GPRT 81

Figure 15 Verbose interface for the task generator tool 84

Figure 16 Web interface for the task generator tool and three

gen-erated examples. 84

Figure 17 Characterization of a simulation execution in

MAXIM-GPRT. 87

Figure 18 Overall FCFS deadline miss: run x.x.x.1 89

Figure 19 Overall RR deadline miss: run x.x.x.1 90

Figure 20 Deadlines misses for: 5 agents, Ua

m, and A⌧l [0-200s].

91

Figure 21 Task-set for simulation 5 agents Ua

m and A⌧l 91

Figure 22 Task-sets for: 10 agents, Ua

h, and U⌧x. 92

Figure 23 Deadline misses for: 10 agents, Ua

h, and U⌧x[0-200s]. 92

Figure 24 Deadline missed for 10 agents over Ua per U⌧

l, U⌧h, and

U⌧x. 93

Figure 25 Deadline missed for 10 agents over U⌧

l, U⌧h, and U⌧xper

Ua. 93

Figure 26 Deadline missed for: 10 agents, Ua

h, and U⌧x[0-1200s]. 94

L I ST O F TA B L E S

Table 1 MAS’ feature supporting their adoption in IoT and CPS 16

Table 2 Brief overview of the major agent platforms. 28

Table 3 Assumptions overview 36

Table 4 Requirements Overview 39

Table 5 Strengths overview 41

Table 6 Limitations Overview 43

Table 7 Mapping of open challenges - Interventions on MAS

pil-lars 46

Table 8 Improvements required for Local Scheduler. 51

Table 9 Improvements required for Local Scheduler. 51

Table 10 Agents’ task-sets 53

Table 11 Algorithms implemented in MAXIM-GPRT 64

Table 12 Agent’s characterizing elements. 64

Table 13 Message parameters 71

Table 14 Actions triggered by self-messages inside the agent 72

Table 15 Possible actions triggered by agent messaging 73

Table 16 Structures used for the scheduling mechanisms 74

Table 17 Functionality composing the scheduling mechanisms 74

Table 18 Levels of Utilization Factor for single agent. 83

Table 19 Levels of Utilization Factor for single single task. 83

List of Tables vi

Table 20 Possible input values for the task-generator tool 85

Table 21 Tasks parameters generated for the task-generator tool 86

Table 22 Features generated for the task-generator tool 86

Table 23 Deadline missed by FCFS in run x.x.x.1 (simulated time

10000 seconds) 88

Table 24 Deadline missed by RR in run x.x.x.1 (simulated time

10000 seconds) 88

Table 25 Improvements required for Local Scheduler. 96

Table 26 Extendible functionalities ofcSimpleModule 101

Table 27 Main features of the NED Language 102

A B ST R A C T

In the last decades, the Multi-Agent Systems (MAS) resulted in being one of the most relevant approaches fostering the development of systems per-forming distributed thinking and reasoning, automated/autonomous actions, and regulating component interactions in unpredictable and uncertain scenar-ios. Some practical examples are intelligent and pervasive systems (assistive monitoring and feedback in telerehabilitation), energy management, and en-ergy negotiation. Those application domains particularly involve three major characteristics: intelligence, autonomy and real-time behavior.

Although MAS are one of the major technological paradigms used to imple-ment such systems, they mainly address intelligence and autonomy but miss to comply with strict timing constraints. The compliance with strict-timing constraints (real-time compliance) is crucial for safety-critical applications op-erating for example in the healthcare, industry 4.0, and automotive domains. The main reasons for this lack of real-time satisfiability in MAS originate from current theories, standards, and technological implementations. In particular, traditional internal agent schedulers, communication middlewares, and nego-tiation protocols have been identified as co-factors inhibiting the real-time com-pliance. This manuscript provides an analysis of such MAS components and paves the road for achieving MAS capable to meet strict-timing constraints, thus fostering reliability and predictability.

Moreover, by studying possible adoptions of MAS in Cyber-Physical Sys-tems (CPS), it has been observed that in current MAS, the actual task execution is still delegated to traditional general-purpose scheduling algorithms running within the agent (local scheduler of behaviors). The main consequence is the incapability to enforce compliance with strict timing constraints (impossibil-ity of providing any guarantee about the system’s behavior in the worst-case scenario). Therefore, the adoption of MAS is hampered, excluding significant application scenarios such as “safety-critical environments". Another contri-bution presented in this manuscript is the schedulability analysis of various tasks-sets, feasible using real-time schedulers, on top of traditional general-purpose solutions. In particular, the study of deadline-missing rate occurring

List of Tables 2

in general-purpose setups is evaluated on a self-developed agent-based simu-lator (named MAXIM-GPRT) developed on OMNET++. The results strengthen the motivations for adopting and adapting real-time scheduling mechanisms as the local scheduler within agents.

1

A RT I F I C I A L I N T E L L I G E N C E A N D T I M

-I N G C O N ST R A -I N T S

Indice 1.1 Artificial Intelligence 3 1.2 Real-Time Systems 8 1.3 Contributions 11Enforcing the compliance of strict timing constraints in Artificial Intelligence applications is a fascinating subject which I always have considered worthy of study. To be able to understand such a challenge, the motivations, what it involves, and the benefits it can provide, it is necessary to introduce and analyze the main players: "The Artificial Intelligence" (AI) and the "Real-Time" (RT) theories.

�.� ���������� ������������

Inspired by human-like societies, the need of transferring “intelligence" to technological systems requires an in-depth knowledge of ourselves. To the limit of this understanding, the definition of intelligence at machine level has been provided by Alan Turing [90]:

“The capability of a machine of providing answers that would naturally be given by a man"

In other words: “to replicate human/animal level intelligence in a machine." Subject to intense debates, many animals could be additionally considered intelligent to some extent [19].

Furthermore, several definitions trying to frame AI have erupted since it became a relevant notion. The term AI is attributed to (i) systems that think like humans, (ii) systems that think rationally, (iii) systems that act like humans and (iv) systems that act rationally[115].

�.� ���������� ������������ 4

However, how is it possible for a slow, sometimes minuscule brain (whether biological or electronic), to perceive, understand, predict, and manipulate a world far larger and more complicated than itself?

Scientifically, it has been hypothesised that humans came into being roughly 2.5 million years ago. Ever since, constant leaps in evolution characterised their path. Around 10,000 years ago, the development of agricultural techniques brought the end of the nomadic lifestyle of certain populations. Furthermore, the movement from storytelling to literacy began 5000 years ago progressing to the outburst in the last couple centuries of novel ways to secure man’s ability of handling “expert" knowledge.

This brief thought suggests that depending on the capability of being and reacting, dealing with problem solving behavior, language, expert knowledge and application might result pretty simple. Hence, at the base of civiliza-tion’s evolution lies the capability of (i) sensing/acting on the surroundings, (ii) “moving" in dynamic environments, and (iii) developing basic knowledge. Such elements are necessary and sufficient for the maintenance of life and repro-duction, and have been studied for years from several view points in terms of foundation for seeing, learning, remembering, and reasoning.

In early 1947, the first manifesto of AI results in being the outcome of the research on “how much a machine can do for the higher parts of the brain", titled Intelligent Machinery [131]. Such a manuscript questioned whether it is possible to make a machine to think for you, presenting concepts and areas of interest that became central in the AI studies.

Highly ambitious goals have been set over the years for AI systems such as the explanation of human dynamics and choices, forecasting trends in multiple scenarios, understanding the animal world, and finally nature itself.

The objective of replicating the entire “intelligence/essence" of human in-telligence has been later disposed in favour of specialized sub-problems (iso-lated aspects of intelligence), such as ways to represent knowledge, natural language understanding, vision or even more specialized areas such as truth maintenance systems or plan verification [19].

Waiting for the day, when these tiles might all fall into place composing “truly" intelligent systems, we clearly speak of “imitating machines". Indeed, another “way" to identify if a machine can be considered intelligent is the Turing test [118], which requires a person to interact through a computerized interface with a possible human or machine. If the tester does not perceive the interlocutor as machine, then the intelligent system passed the test.

�.� ���������� ������������ 5

Designed to closely simulate human behaviour, learning processes based on mistakes-learning mechanisms constitute the most fruitful way of building the whole output layer by layer (final knowledge). Among the most fruitful appli-cations of AI techniques, it is worth to mention:

Learning/Education

A learning algorithm or system is able to build its knowledge over some data by repeating and overcoming mistakes. In particular, the elements character-izing a learning computer program are formed from experience on top of some class of tasks evaluated by performance metrics [80].

The “corrections" may be inducted by external stimuli on internal processes (triggered by the system experience), thus being then able to deal with a far greater range of contingencies. The learning processes can be classified as su-pervised [75], semi-supervised [149], or unsupervised [59].

Natural Language Processing

Dealing with complex expressions in natural language is a smooth and al-most effortless process for humans. Instead, generating an “understanding" (encoding-decoding) even fragments of whatever natural language implies huge challenges of an incredible complexity (e.g., coping with language and communication evolution) at the system’s level.

Knowledge retrieval, processing, and representation

Extensively used for storing data, databases considerably evolved to optimize features such as data-access time, readings, and security. However, the real challenge faced by AI consists in answering the question “what if the needed information is not stored but has to be derived/assumed/inferred/deducted?"

Perception Problems

Considerable attention has been given to enable machines to perceive their sur-roundings, exploiting sensors such as cameras, microphones, resistors and in-ertial boards. From these experiences, it has been learned that processing com-plex input requires the “understanding" of a large base of knowledge about the things being perceived. The point of the whole perception process is to produce a condensed representation to substitute for the unmanageably im-mense, raw input data. A classical example is the Simultaneous Localization and Mapping (SLAM) [45]. Hence, the multitude of data characterizing the surrounding (possibly unknown) requires a clear cut to be processed with a

�.� ���������� ������������ 6

reasonable trade-off between time and result.

Robotics

The physical control of mobile robots seems to require more automation rather than contributions from AI techniques. However, moving in unknown and dynamic spaces, understanding and dealing with cooperative/competitive en-tities require accountable intelligent contributions.

Indeed, a robot may enclose several of the above-mentioned AI areas. Mainly characterized by motion and problem-solving tasks, a given robot can face the surrounding, arranging sets of primitive knowledge and actions (e.g., wheels motion, camera readings, and a bunch of rules). Any action follows a plan-ning phase and possible task distribution. Recently, distribution and optimiza-tion techniques inspired by swarm intelligence have become increasingly pop-ular [16]. Strongly decentralized and inspired from behaviors such as social insects, flocks of birds, or schools of fish, the swarm intelligence guarantees several advantages over traditional techniques (e.g., robustness and flexibility).

Expert systems

Decision analysis enumerates the possible actions and outcomes and elicits preferences from the decision maker to determine the best course of action. Decision-making elaborates on preferences between outcomes. Such processes have been employed in strategical fields such as business, government, law, military, and medical [115]. In many cases, complex sets of simple rules may be sufficient to deduce information. However, dealing with imprecise, uncertain, or anecdotal requires increasingly complex organizational, representation, and learning mechanisms.

Incorporating utility information gives additional capabilities compared to pure inference systems. Additionally to the ability of making decisions, they can decide to acquire more information, and based on their value, calculating the sensitivity of their decisions to small changes in probability and utility assessments.

Usually, rather than making actual decisions, expert system research recom-mended actions more than “simply" providing opinions on matters of fact by using condition-action rules. For example, a common strategy in early medi-cal expert systems was to rank possible diagnoses in order of likelihood, and report the most likely. Unfortunately, missing the diagnosis of relatively rare cases, such strategy can be disastrous.

�.� ���������� ������������ 7 Combinatorial and Scheduling problems

An interesting class of problems is concerned about specifying optimal sched-ules, allocations, and combinations. The classical example is the problem of the travelling salesman, which has to find a minimum distance tour, starting from one of the cities to be visited and passing by each city exclusively once, and finally returning to the starting city. Another example is the problem of the 8-queens, which requires to place eight queens on a standard chessboard in a way that no queen is capturing any of the others (meaning to place at maximum one queen in any row, column or diagonal). Those two examples have been mentioned to enforce the possibly huge dimension of combination-s/sequences that might be elaborated to provide a solution.

Sometimes, these types of problems 1 can generate a combinatorial explo-sion of possibilities, thus exhausting the capacities of even considerably pow-erful computers.

Computational theorists classify this kind of problems’ difficulty based on the worst case scenario (time or number of steps) for achieving the result with the theoretically best method. Thus, according to the problem dimensions, the difficulty may grow linearly, polynomially, or exponentially.

Building complete intelligent systems leaving them loose in the real world with real sensing and action, provide anything less than a candidate to delude ourselves [19].

The verdicts produced by intelligent systems, mainly based on the concept that “the machine will provide any result sooner or later", would be quite worthless (in some cases dangerous) if not provided in/on time. In real-world applica-tions, occurred the massive adoption of heuristics2 trying to cut the response time and tailor the system on the related scenario/setup.

However, most systems developed for commercial purposes are expected to carry out extremely specific jobs with certainty, precision, and considerable speed. Such jobs often consist of repeating identical series of operations over and over again. Therefore, the employment of “pure" intelligent systems is still hampered (or not possible yet).

To cope with such needs, another category of theories and systems arose (which are particularly crucial in safety-critical systems [27]). Subject to an almost parallel evolution, without any form of influence from AI theories, the

1 Several of these problems (salesman included) are part of a class that computational theorists call NP-complete.

�.� ����-���� ������� 8

Real-Time Systems (RTS) dominated scenarios where timing reliability has to be fully guaranteed and empirical application are not enough.

�.� ����-���� �������

Computing systems that must react within precise time constraints to events in the environment are referred as RTS. The correct behavior of such systems depends not only on the value (output) of the computation, but also on the time the results are produced/delivered [24]. Since an increasing number of complex systems rely (partially or completely) on computer control, a delayed or unpredictable reaction could be useless or even catastrophic risking huge losses of money, or even people’ health or life.

Applications that mandatorily require a strict timing-reliability (e.g., in safety-critical scenarios) are: chemical and nuclear plant control, control of com-plex production processes, railway switching systems, automotive applica-tions, flight control systems, environmental acquisition and monitoring, telecom-munication systems, medical/healthcare systems, industrial automation, and robotic systems. Moreover, in the case of non-critical but still highly time-dependable system it can be mentioned: virtual reality applications, internet telephony, and desktop audio and video management.

Such systems received contributions from a plethora of research fields, each one with its own approach in terms of algorithmic solutions and real-time behavior. Since they all derive from different perspectives, when such worlds need to interact or be merged into comprehensive solutions, inconsistencies may arise when they are integrated into unified solutions.

Despite there is no much space for misunderstanding, many researchers, de-velopers, and technical managers have serious misconceptions about real-time computing, [127]. For example, although the term “real time" is clearly asso-ciated with the system capability of responding to external stimuli within a bounded amount of time, common erroneous interpretations depict systems “real-time compliant" as able to respond as fast as possible. Although RTS operate in the order of milli/micro-seconds, such assumption is incorrect, con-fusing computational speed with predictability.

Therefore, most of today’s real-time control systems are still designed using ad-hoc techniques and heuristic approaches. Control applications with strin-gent time constraints are frequently implemented by writing large portions of

�.� ����-���� ������� 9

code in assembly language, programming timers, writing low-level drivers for device handling, and manipulating task and interrupt priorities. Although the code produced by these techniques can be optimized to run efficiently, this ap-proach is inconvenient (difficult programming, code understanding, software maintainability, and code verification).

The major consequence of this approach is that the control software pro-duced by empirical techniques can be highly unpredictable. If all critical time constraints cannot be verified/guaranteed a priori and the operating system does not include specific mechanisms for handling real-time tasks, the system may apparently operate properly for a period of time, but it could collapse in certain rare, unknown, unclear, but possible situations.

In addition to classical faults due to code failures, hardware failures, and conceptual errors in the design phase, a real-time software may be subject to timing errors:

Definition 1. Timing errors may be caused by extra delays

intro-duced by scheduling and synchronization mechanisms, or by mis-alignments between the “real" time evolving in the environment and the internal system time representation.

As mentioned in Section 1.1, conventional intelligent systems focus more on the research of the actual value rather than strictly coupling it with the conception of time and dead-line. The first approach binding timing constraints and an intelligent system is the computer chess system developed by IBM named Deep Blue [33].

Its first version counted 216 chess chips, each one able to search about 1.6 2 million chess positions per second. So, the overall search speed was 50 -100 million chess positions per second. In the second version the efficiency improvements increased the speed per chip search to 2 - 2.5 million positions per second, which enabled defeating Garry Kasparov in the 1997.

Such positions were subject to an evaluation function implemented in hard-ware. On the one hand, this simplified the programming of Deep Blue. On the other hand, the consideration to add new features would have been a tricky decision. Hence, a “better" evaluation function may take too long to execute, slowing down the program to the extent that it plays more weakly.

More-�.� ����-���� ������� 10

over, in practical terms, the developers of Deep Blue explicitly defined such a possibility painful3.

From a timing perspective, chess games typically have a set of requirements on the speed of play, termed the “time control". For example, the Deep Blue -Kasparov games initially required at least 40 moves to be played in two hours. Failure to make the specified number of moves leads to forfeiting the game. The time control mechanism in Deep Blue is relatively straightforward. Before each search, two time targets are set:

(i) the normal time target, which is set to be approximately the time re-maining to the next time control divided by the moves rere-maining. In practice, a considerable time buffer is reserved, which allows for suffi-cient time to handle technical difficulties, as well as saving time for a possible “sudden-death" phase.

(ii) the target is the panic time, which is roughly one third of the remaining time.

If, at the normal time target, the situation is “normal", a time-out occurs and the current best move is played. There are a few conditions under which Deep Blue will go beyond its normal target into “panic time".

• The current best move has dropped 15 points or more from the score of the previous iteration. In this case, the search continues until either a new move is found within the 15 point margin, the iteration is completed, or the panic time target is reached.

• The best move from the previous iteration is in a potential “fail-low" state. It continues until either this state is resolved, or the panic time target is reached. If this move ends up dropping 15 points or more from its prior value, continue as in the previous case.

• A new move is in a potential “fail-high" state. Continue until this state is resolved, or the panic time target is reached.

These conditions are triggered frequently during a game, but it is quite rare to actually go all the way to the panic time target4.

3 Unfortunately the full evaluation function generator takes measurable wall clock time to run and download, and partial downloading was considered too complex to implement.

�.� ������������� 11

In the course of the development of complex systems (e.g., Deep Blue), many diverse design decisions can shape very different outcomes, still leaving room for questions related to the many alternatives left unexplored.

Considering the Deep Blue case, the fact that computation-failures are con-sidered as possible options, and that some time-bounds are defined “approx-imately" [33], raises serious concerns about how the compliance with strict timing constraints is approached in AI systems.

�.� �������������

This section briefly identifies and formalizes the central purpose of this work and the contributions that are addressed in this manuscript.

OBJECTIVE

Having reliable and predictable MAS is the goal we set up. In particular, it means enforcing the capability of complying with strict-timing constraints in MAS, thus enabling their application in safety-critical scenarios.

CONTRIBUTIONS

To achieve such a challenging objective required a study composed of several steps and the achievement of as many milestones. In particular, they are:

(i) the study of the state of the art producing an understanding of what and how things are, and what and how needs to be updated or redefined. (ii) the definition of real-time scheduling models, to be adopted and adapted

according to the MAS pillars.

(iii) the development of reservation based negotiation protocols, operating accordingly to the agent local scheduler, and

(iv) the employment of a communication middleware with bounded time delays.

The next chapter presents AI approaches and systems that tried to cope with temporal constraints. In particular, the agent-based view, base-cell of the

�.� ������������� 12

Multi-Agent Systems (MAS), is presented alongside the RTS pillars to support the understanding of the current state of the art and the still open challenges.

2

I N S I D E M A S & RT S

Indice2.1 MAS: an AI expression 13

2.2 RTS characteristics 16

�.� ���: �� �� ����������

Technological revolutions deeply changed customs within society, in which human beings are irrevocably coupled with uncountable interconnected elec-tronic devices and their cyber models. This process is still ongoing, providing new application scenarios, while constantly raising new scientific challenges in the research domains of AI, Internet of Things (IoT), and Cyber-Physical Systems (CPS). Along the years, the best AI expression suiting real-world sce-narios is the agent-based approach.

In a world where more than one behavior might often be appropriated in a given situation, the agent aims at being a human alter ego in its essence and interactions.

An intelligent agent can be rationalized as an autonomous entity observ-ing the surroundobserv-ing environment through sensors and possibly interactobserv-ing with it using effectors (see Figure 1). Self-developed or induced goals (both pre-programmed decision and dynamic ones) drive the agent choices while trying to maximize its performance. Such an intelligent agent is also able to extend/update its knowledge base, thus renewing its plans to achieve the desired goals [114].

The natural abstraction of Multi-Agent Systems (MAS), in ecological and societal terms, supports the robustness of their mechanisms and behaviors. For example, Zambonelli and Omicini in [145] assert the affinity of ant foraging and the agents’ mobility in finding information within a distributed P2P network, and the similarity of social phenomena like the information propagation in

�.� ���: �� �� ���������� 14

Sensor

Actuators

Perception

Modelling

Planning

Task execution

Motor control

Sensor

Actuators

reason about objects' behaviors

plan changes to the world

Identify objects

Monitor changes

Build maps

Explore

Wonder

(a)

(b)

Figure 1: (a) Functional decomposition; (b) Behavior-based decomposition.

social networks and routing algorithms. Social and natural phenomena with negotiation-based interactions [73] and social conventions [38, 97] have been exploited extensively shaping the multi-agent system paradigm. Moreover, it has also associated physical phenomena such as virtual gravitational fields to the orchestration of the overall movements of a vast number of distributed mobile agents/robots [91].

The complexity range of such agents is notably broad. Observing one or more agent communities operating in IoT and CPS scenarios can unveil an apparently unlimited potential. For example, the application domains that received notable contributions are healthcare [22,56,145, 148], smart environ-ments (e.g., office, home city [7, 11, 84, 113]), smart cities (e.g., mobility [56,

84], urban safety [145], water distribution [7, 85], transportation [22], and en-ergy [22,104,145]), industrial scenarios (e.g., manufacturing [7], workflow and process management [22, 145]), assisted living [11, 30, 32, 113], and telereha-bilitation [31].

�.� ���: �� �� ���������� 15

Related to specific or more general application fields, every programming paradigm has its own strengths relying on exposed peculiarities. The MAS paradigm is conceptually elegant and proposes a comprehensive set of fea-tures. Investigating primary studies, we identified and collected in Table 1

MAS’ characteristics recurring in several studies. Although scenarios and ap-plication domains might be different, the authors of the primary studies iden-tified features supported (either partially or fully) by the various adoption of MAS in IoT and CPS. Full support indicates that MAS provide means to satisfy such a feature, while partial support indicates that MAS’ contribution, although positive, has been assessed as unable to ensure the complete satisfac-tion of such a feature.

The proactiveness and the possibility of performing dynamically intelligent behaviors with a high degree of autonomy are the most important features of MAS. Furthermore, MAS resulted in being particularly appreciated in the case of failure handling or resource optimization where required [11]. Finally, although broadly appreciated, MAS autonomy and flexibility still generate minor concerns about possible evolution in undesired behaviors of inferences and plans.

Nevertheless, MAS are increasingly involved in concrete systems, such as the control of physical devices in smart environments (e.g., water provision-ing [85]), energy negotiation, management [146], and system security [148]. Moreover, in IoT and CPS solutions, the agents have been associated with real-time related services/tasks, representing a fascinating cross-domain class to be analyzed in more depth. For example, in "smart" and other relevant domains, several applications require features compliant with real-time-like contraints, such as sharing information [85], awareness of environmental changes [22], decision support [85], perception of provided energy [104], information shar-ing in manufacturer processes [7], security controls [148], and on time activities execution in production lines [81]. Such services are receiving increasing scien-tific attention, and the MAS, if extended with the above-mentioned real-time services, represent a notable overlap among the IoT and CPS systems.

However, current MAS fail in dealing with real-time properties. Indeed, they typically adopt best-effort approaches under which the system behavior in worst-case scenarios cannot be handled, nor guaranteed in advance. Ensuring real-time compliance would be a priceless milestone for agent-based solutions.

To understand what is meant by compliance with strict timing-constraints or real-time compliance, it is necessary to introduce the concept characterizing the RTS. The next section present the RTS pillars and what they involve.

�.� ��� ��������������� 16 Table 1: MAS’ feature supporting their adoption in IoT and CPS

Feature Contribution Source

Enable lightweight device coop. partial [11]

Increase dependability partial [7,81]

Increase interoperability partial [7,81]

Optimize energy consumption partial [113]

Enable repetability partial [81,113]

Facilitate development (various systems’ complexity) partial [85,142,145]

Reducing communication (Agent Migration) partial [11,142]

Facilitate understanding system model partial [84]

Enable self-healing partial [104]

Handling variability and resources scarcity partial [11]

Enabling self-adaption partial [145]

Simplify software development/extension partial [11]

Ensure robustness partial [104]

Facilitate components evolution and reuse partial [11]

Face unpredictable scenarios partial [145]

Support security (cyber and physical layers) partial [148]

Maximization of resources utilization partial [7]

Reduce redundancy partial [84]

Proactiveness and intelligent behaviors full [81,85,104,113,142,145]

Ensure Scalability full [7,81]

Reactivity full [104,113,142]

Social-able full [104,113]

Increase autonomy (e.g.: failures, resources) full [7,85,104,113,142]

Ensure modularity & encapsulation full [81,145]

Support contex awareness full [11,85,113,145]

Ensure flexibility full [81,85,113,145]

Increase systems integration full [7,81,113]

Support fault-tolerance full [84,85,148]

Enable high-level protocols and langs full [145]

Ensure reconfigurability full [7,81,145]

�.� ��� ���������������

Taken from [24], Figure 2 provides a quick representation of the speed rel-ativity. Hence, the velocity of a system is totally dependent on its operating scenario.

To better understand the timing errors (Definition1), the formalization of the terms real-time follows:

• time component: “the system’s correctness relies on the results’ quality

of the logical computation and on the time required to be produced".

• real component: “the system’s reaction to external stimuli must occur

�.� ��� ��������������� 17

Figure 2: Both the mouse (a) and the turtle (b) behave in real time with respect to their natural habitat. Nevertheless, the survival of fast animals such as a mouse or a fly can be jeopardized by events (c and d) quicker than their reactive capabilities. Copyright 2011 by Springer, "Hard Real-Time Computing Systems", Third Edition. Reprinted with permission.

and the system’s time (internal time) must be aligned and refer to the same time-scale.

No matter how short the average response time of a given system can be, without a scientific methodology, the compliance with individual timing re-quirements (in all the possible circumstances) cannot be guaranteed. In the case of computational activities with different timing constraints, average per-formance has little, if any, significance1.

Thus, common metrics such as speed and average performance have been iden-tified as irrelevant for RTS. Vice-versa, it is crucial to be able to investigate a given application at every stage (from design to testing), thus ensuring the following properties:

Timeliness: the results have to be correct both in terms of value and response time.

1 A short and meaningful story proposed by Buttazzo in [24] recite “There was a man who drowned crossing a stream with an average depth of six inches"

�.� ��� ��������������� 18 Predictability: the system must be observable and analyzable to predict the consequences

of any scheduling decision2

Efficiency: Most of RTS might run into small devices with severe constraints (e.g.,

space, weight, energy, memory, and computational power). Thus, opti-mizing the efficiency is essential.

Robustness: RTS have to be load-independent (e.g, no collapse in peak-load

condi-tions).

Fault-tolerance: Single hardware and software failures should not cause the system to

crash.

Maintainability: Modular RTS should ensure feasable and easy system updates.

Such features rely on the notions of deadline and Worst-Case Execution Time (WCET), which are the main characteristic differentiating RTS from non-RTS. In particular, they are:

Deadline: the maximum time within which a given task in a system must complete

its execution3.

WCET: the maximum amount of time a given task can take to complete its

exe-cution on a specific hardware/software configuration.

In critical applications, a result produced after the deadline is not only late but wrong! Depending on the consequences that may occur because of a missed deadline, a real- time task can be distinguished in three categories:

In safety-critical scenarios, a result given after its deadline can either be just late (no value/meaning) or incorrect and dangerous. For example, a delayed feedback in telerehabilitation scenarios might cause unnecessary patient dis-comfort or even jeopardize the rehabilitation process [31]. Therefore, RTS can be classified in three main categories:

Hard: tasks producing results after their deadlines may cause catastrophic

con-sequences.

Firm: the results produced by tasks missing their deadlines are useless for the

system, but do not cause any damage.

2 all timing requirements should be guaranteed off line, if not alternative actions must be under-taken to handle such exceptions.

�.� ��� ��������������� 19 Soft: the relevance of the results produced by tasks missing their deadlines

decrease monotonically (in most of the cases). Although such results can be useful till certain extents, they cause a performance degradation in the system.

Furthermore, when a system is characterized by a composition of hard, firm, and soft features, specific policies and guarantees has to be provided both off-and on-line.

3

M A S & R E A L-T I M E C O M P L I A N C E

Indice3.1 Traditional approaches 20

3.2 Analysis of MAS Pillars 27

3.3 MAS and Real-Time: open challenges 45 3.4 MAS and Real-Time: required intervention 46

�.� ����������� ����������

Considering the fundamentals characterizing MAS and RTS introduced in Section2.1and2.2, this section presents what has already been done attempt-ing at realizattempt-ing MAS compliant with strict-timattempt-ing constraints.

Medical, industrial, and automotive systems are receiving contributions from a plethora of research fields, each one with its own approach in terms of algo-rithmic solutions and real-time behavior. Since they derive from very different perspectives, when MAS and RTS worlds need to interact or be merged into comprehensive solutions, the existing misconceptions about each other’s area may generate inconsistencies driving to an “ill-formed" solution.

A low level of predictability is typically the major consequence when ad-hoc empirical real-time techniques are wrapped by an agent-based system. The system may operate properly even though all critical time constraints are not verified a priori. This would occur, for example, when the operating system does not include specific mechanisms for handling real-time tasks and services. Nevertheless, under rare and unpredictable circumstances, the system may col-lapse without any clear cause. Considering the possibility of critical failures of the MAS operating in the above-mentioned domains, the consequences of such sporadic failures can be catastrophic, causing physical injuries, environmental damage, and hence financial loss.

�.� ����������� ���������� 21

Current MAS frameworks provide generic and extendible functionalities supporting the standardized development of agent-based platforms. Kravari et al. [77] survey MAS frameworks enumerating and detailing the most rele-vant twenty-four. Most of them run on general-purpose and mobile operating systems (OS) such as (Linux, Mac OS, Windows, or Android), with a few pow-ered by a Java Virtual Machines (JVM) which can claim to be cross-OS. The combination of multi-purpose or mobile OS - MAS as is, cannot guarantee the respect of timing constraints due to “missing" rules and mechanism. Despite the broad range of compatibility, none of the traditional MAS is meant to run on a proper real-time operating system (RTOS).

�.�.� Case study: eHealth and telerehabilitation

eHealth and telerehabilitation are domains in which the enforcement of the timing constraints is crucial. Nevertheless, a plethora of agent-based solutions have been provided over the years.

It is worth to recall that a recovery period (usually 6-8 weeks) can follow an acute trauma (e.g., fall of a fragile elder) or surgical intervention (e.g., joint re-placement). This is the most critical period for patients who are luckily still not chronic (weak phrasing). Nevertheless, the scientific community provided tai-lored solutions to relieve pain and maintain or slowly recover physical and/or mental capabilities. Indeed, telerehabilitation targets not only the physically impaired [3], but also cognitively impaired patients [1, 36] which are on aver-age 76 years old (56 ⇠ 91) [14].

The broad range of available technologies enabled the development of vari-ous techniques and approaches. The main category of applications they have generated are based on:

• video analysis - mostly involving stereoscopic cameras and image process-ing algorithms;

• wearable technology - mostly focusing on embedded devices and inertial sensors supported by kinematic algorithms;

• robotics - mostly focused on in monitoring and motivation involving hu-manoids or basic robots;

• distributed sensing - mostly involving monitoring and reasoning exploit-ing environmental sensors;

�.� ����������� ���������� 22

• gamification - mostly involving coaching techniques and persuasive tech-nologies.

In particular MAS have been extensively adopted in rehabilitation solutions. Specialized models and tools are ment to cope with physical and cognitive reha-bilitation or providing at different levels and stages.

Physical rehabilitation

Roda et al. [110] treated elderly motor impairment employing specific de-vices to control patients movements. Exploiting typical Ambient Intelligence (AmI) techniques, they proposed a context-aware system integrating diverse devices. Thus, the MAS can react accordingly to the context, supporting phys-iotherapists in developing new therapies or precisely tailoring already existing therapies to patient needs. Using a Microsoft Kinect, all motor tasks performed by a patient during the rehabilitation are controlled. Moreover, by employing third-party sensors, they were able to gather oxygen level, posture, gesture, stress, BPM, and mood. Combining such values, indexes such as pain or fa-tigue could also be detected. Physiotherapists expressed vague rules, such as how the use of linguistic values, rather than numerical values, provide a natural way to express how transitions should be. A specific agent equipped with an inference engine elaborates such data while respecting isolation and privacy requirements.

Performing cardiac rehabilitation during its second (sub-acute) and third (in-tensive outpatient therapy) phase, a large amount of cardiac data (complex and arguably) has to be analyzed in a short period of time. The system proposed by Mesa et al. [96] provides support in data analysis, event classification, and visualization. Such an agent-based system has been involved in rehabilitative tests such as (i) walking on a treadmill at different speeds and with different slopes; (ii) cycling on a stationary bike at different speeds; (iii) upper body workout; and (iv) lower body workout.

Data and context awareness is considered paramount to establish actual col-laboration while interacting with remote participants. Dealing with rehabilita-tion systems magnifies this challenge. Hence, for both cognitive and physical rehabilitating users, the information awareness is a crucial element to provide patients with a rehabilitation path as tailored as possible [130].

In the context of Upper Limb Rehabilitation (ULR), Rodriguez et al. [111] proposed an agent-based system to customize exercises to assist different

pa-�.� ����������� ���������� 23

tients providing a bespoke ULR. A noteable peculiarity of such a system is the context-awareness, which enables run-time adaptability. Hence, the system “performs" three abstract concurrent tasks: (i) while the patient is executing the exercise for the upper limb, the movements are recorded and monitored; (ii) analyzing specific patientparameters (e.g., BPM, skin conductance), an agent is in charge of defining the level of stress/fatigue; (iii) the agent behaving as a “virtual therapist" adapts ULR’s parameters such as number of repetitions and

target area limits according to the current level of stress.

Felisberto et al. [49] developed a MAS that recognizes human movements, identifies human postures, and detects harmful activities in order to prevent risk situations (e.g., sudden diseases and falls). The authors exploited wireless sensor nodes and energy harvesting technologies to realize a wireless body area network (WBAN). On top of that, an intelligent agent constantly analyzes possible profiles variations, aiming at identifying physical and posture deteri-oration causing accidents.

Robotic manipulators have also been employed in agent-based solutions. Trainees’ learning phases may be supported by formalizing and enhancing the precision and the input to be understood [2]. Relevant contributions have been provided to the interaction between therapist, trainee, and patient.

In telerehabilitation scenarios, drugs assumption correlated to a highly dy-namic environment can be a recurrent situation. Mutingi et al. [98] presented an agent-based decision-making solution for drugs delivering. The bio-physiological signals the authors took into account are blood-pressure, BPM, and respira-tion. Elaborating the combination of such parameters and drugs therapy may provide important indications to the medical staff about the patient and the pathology’s evolution. Other benefits provided by this solution are staff work-load reduction, increased resource availability, facilitation of understanding patients requirements, and data collection.

Cognitive Rehabilitation

In the scenario of cognitive rehabilitation, Abreu et al. [1] proposed a set of 3D games to rehabilitate neuropsychiatric disorders. They proposed an automatic agent-based control to facilitate the management of the software processes while the patient is playing.

Known as “the silent elderly epidemic", the Acquired Brain Injury (ABI) re-quires rehabilitation practices such as visuospatial, memory, functional

com-�.� ����������� ���������� 24

munication, language, attention, and comprehension training [112]. Roda et al. [109] designed an agent-based system to (i) support the execution of the above-mentioned ABI related therapies, and (ii) monitor/evaluate the per-formed activities and patient’s state (e.g., stress, emotional state, BPM, and oxygen level).

Smith et al. [126] proposed another agent-based solution for functional reha-bilitation involving gamification. In an environment away from rehareha-bilitation centers, such a solution promotes a continuous, fun, and stimulating rehabili-tation. Such “games" have to carefully consider a higher number of variables (e.g., incorporating expertise and motivational capacities of rehabilitation prac-titioners). Thus, they result in being more complex than the ones offered off the shelf which are typically too physically and cognitively challenging for re-habilitation patients. Information about patient compliance and progress are collected and made available to the healthcare specialists for further analysis and considerations. Moreover, the gamification technique has been exploited, seeking for an enhancement of the engagement, while performing patient mon-itoring (what?) and promoting smart learning mechanisms [82].

Other proposed solutions

Providing a platform for interactive learning, Su et al. [128] developed an ontology defining vocabulary, entities and their relationships in rehabilitation medicine. Exploiting an inference engine, existing data can reveal new knowl-edge having an “asserted model" as input and “inferred model" as output. An-other example of agent-based reasoning is presented in [20]. The authors faced two main challenges: (i) scalability - by distributing the reasoning on mobile devices, and (ii) penalization by supporting medical staff with a graphical application, simplifying the definition of temporal patterns of physiological values. Liao et al. [83] addressed reliability and security of an agent-based platform for telemonitoring.

Finally, Lai et al. [79] proposed a study involving a community scenario rather than the conventional single patient scenario. The authors evaluated the use of rehabilitation techniques for the post- or (not and?) chronic-stroke survivors involving video-conferencing solutions. In conclusion, the authors praised efficacy, feasibility, and acceptability of telerehabilitation in community-dwelling stroke clients, recording improvements in both physical and psycho-social wellbeing.

�.� ����������� ���������� 25

Concerning the contributions presented above, it is possible to notice a recur-ring architectural composition, which is schematically represented in Figure3.

Technological Conventional MAS ad-Hoc solutions Reasoning (Analysis, Planning Solving) Coaching, Monitoring Sensing Mobilitation Therapy Activities Tasks Steps H u ma n t h e ra p ist H u ma n t h e ra p ist

Figure 3: Generic structure of MAS solutions for rehabilitation.

The characteristic shared by all those agent-based systems, is that MAS only appear in the “higher levels" of every system, delegating to ad-Hoc or propri-etary solutions the tasks performed by embedded and dedicated hardware (e.g., sensing and mobilization). For example, in [96] and [49], the only ele-ments exploiting agents are the data handler, visualizer and alert manager. In other contributions, such as [110], references or details about how the agents get information from wearable sensors or embedded devices are often missing or omitted. The lack of “agentification" of the embedded and wearable sys-tems is a con-cause hampering the MAS adoption and limiting their benefits.

Moreover, to better understand what is hidden behind the attempts of cou-pling MAS and ad-Hoc embedded systems, it is worth to mention the studies conducted by Calvaresi et al. [28]. The authors proposed a study involving a mobile robot composed of a Pandaboard, a Discovery STM32, two DC mo-tors and a camera. The MAS (running on the Pandaboard) has been realized using Jade [13], and was in charge of performing all the dynamics related to vision and intelligent planning. The robot’s motion was managed by an ad-Hoc non-MAS system running on the Discovery board. Such an element and its functionalities have been wrapped in one of the agents running on the main board, implementing a custom communication protocol (see Figure4).

Such a best-effort solution identified by the authors has been “forced" by the impossibility of running MAS and JVM on the STM32, which is due to

�.� ����������� ���������� 26

Robot Referee

Scout

PathSetter Logger DriveMeCrazy

Reader Referee AirCamera Vocalist REF1 REF2 AC1 S1 S2 S3 PS2 S5 DMC2 PS1 DMC3 RED3 RED2 RED1 DMC1 V1

Figure 4: Agent framework, architecture and interactions.

its limited resources and the incompatibility of JVM (and so Jade) with Erika RTOS [47].

Another example is the study proposed by Julian et al [70]. The authors pro-vided an extension of a method named “Message" for developing a MAS purs-ing real-time compliance. Accordpurs-ing to their analysis, the previous method was not sufficient to guarantee the aimed real-time requirements for the fol-lowing reasons:

• the protocol operates in a framework non-meant for real-time scopes; • its low-layer required an ad-hoc design tailored for any specific situation; • several extensions were required to incorporate all the temporal aspects; • diverse criticality had to be considered.

The proposed methodology introduced the adoption concepts such as (WCET) and schedulability analysis, trying to cope with the overall process of devel-oping a real-time MAS. Although they have foreseen important aspects to be

�.� �������� �� ��� ������� 27

included in such a process, a complete framework matching all the required features is still missing.

�.� �������� �� ��� �������

Elaborating on the presented MAS solutions it has been possible to identify the fundamental elements characterizing a MAS that are: agent internal sched-uler, communication protocol, and negotiation protocol. Unfortunately, upgrading these elements individually is not enough for providing the expected real-time compliance. The following sections deltail the current state of the MAS pillars.

�.�.� Local scheduling in agent-based frameworks

Kravari and Bassiliades [77] proposed a detailed and comprehensive study of multi-agent frameworks (referred as Agent Platforms). However, the notion of scheduling appears only to refer to mechanisms that distribute and organize tasks and resources among the agents within a specific platform. By doing so, they took for granted the behavior execution and the compliance with the agreements stipulated during the negotiation phase. Such an assumption is naively optimistic, thus resulting in being unacceptable for safety-critical applications [27]. In the case of a telerehabilitation system, a delayed, wrong, or miss-aligned (in terms of content - time) feedback may cause severe injuries to the patient [31]. Nevertheless, almost all the agent-based platforms present and have implement at least one local scheduler. Table2collects them detailing programming language, platform purpose (where GP is general purpose, M is Modeling, and S is simulations), status (where A is Active, N is inactive, and U is unclear), last update (according to the last platform release or push in the official repository), and finally the agent’s scheduling algorithm.

All but two agent platforms have implemented specific schedulers. Al-though it provides a default event-driven mechanism to process the agent be-havior, the first exception is NetLogo, which declares that no particular sched-uler is implemented. The second is Cormas which, differently from the pre-vious one, if no custom/Ad-Hoc scheduler is provided, the behaviors are not executed (nothing in the system would happen). Allowing the platform users to directly implement their version of a behavior scheduler ensures a high flex-ibility. Hence, not only pure algorithms are admitted, (e.g., heuristics such

�.� �������� �� ��� ������� 28

Agent Programming Platform Status Last Scheduling

Platform Language Purpose Update Algorithm

Jade Java, GP A Jun 20171 Non-Preemptive

.NET (via add-ons) RR (FCFS)

Cormas SmallTalk M, S A Aug 20172 No default scheduler

(nothing happen) Swarm Java, Objective-C M, S U Oct 20163 Event-driven

(Priority Scheduling, FCFS)

Gama Java M, S A/N Jul 20174 Priority Scheduling

MASON Java GP A - Event-driven (Priority Scheduling) Jason AgentSpeak GP A (?) Aug 20175 RR

MaDKit Java GP A Jul 20176 FCFS

NetLogo Logo Dialect M, S A Aug 20177 No default scheduler

(nothing happen) RePast Java, Python, M, S A Sep 20168 FCFS

.NET, C++, ReLogo, Groovy

Jadex Java GP A Mar 20179 FCFS

(1) https://goo.gl/TKGqT6 (2) https://goo.gl/9sxKtt (3) https://goo.gl/WYJAK2

(4) https://goo.gl/USVVbe (5) https://goo.gl/Wtbm5T (6) https://goo.gl/ysJZRH

(7) https://goo.gl/kngRWj (8) https://goo.gl/yDsqyH (9) https://goo.gl/ZK7fAf

Table 2: Brief overview of the major agent platforms.

as RR, random selection, less workload first, early starting time first) but the custom mix development of the one mentioned above is also encouraged [37]. MaDKit, RePast, and Swarm implement the classic FCFS, GAama and Ma-son [88] implement a type of priority scheduler (e.g., SJF-like), Jason imple-ments an RR applied to structured behaviors, and finally Jade impleimple-ments a non-preemptive RR. The Jason and Jade’s implementations of RR result in be-ing FCFS of intentions [17] in the first case, and of behaviors in the second. They are eventually treated like single entities. Aiming at emphasizing the safety-critical systems point of view, an analysis of those algorithms is presented below and organized as non-priority and priority schedulers.

�.�.�.� Analysis of non-priority local schedulers in MAS

The FIFO and RR scheduling algorithms are two of the most known algo-rithms which inspired a multitude of variants. On the one hand, FIFO (also referred as FCFS) executes tasks in the exact order of their arrival (according to their position in the ready queue). The absence of preemption or re-ordering in

�.� �������� �� ��� ������� 29

this mechanism allows to classify the FCFS as “the simplest scheduling policy with minimal scheduling overhead". On the other hand, RR is mainly appreci-ated for its fairness, playing an important role in general-purpose applications, and prevention from tasks-starvation. Its mechanism is based on the concept of slicing the tasks’ computing time on the processor in equal time-quantum. Thus, the tasks in the ready queue are cycled to get the processor. If a running task is completed, the processor directly computes the next one; otherwise, it saves the task status and puts it back in the ready-queue before computing the next one (context switch).

Given this conceptually simple mechanism, minor adjustments are enough to make it suitable for handling a structured queue of “tasks". A practical ex-ample, showing how Jason revisited the RR scheduler, is presented in Figure5. Such a platform is characterized by the adoption of the “Beliefs, Desires, and Intentions" software model (BDI) [17]. Thus, simple actions compose a plan which aims at satisfying a desire according to the agent’s beliefs and knowl-edge. Assuming to have an agent with multiple and concurrent intentions, the way Jason applies the RR is to execute one action from the plan at the top of the plans stack, composing one intention. At completion, the next action scheduled is the first on top of the actions-stack of the next intention. Referring to Figure5the scheduling is: P1(A1), P2(A1), P3(A1), P1(A2),P2(A2), and so

forth. It is worth to note that the second action of Plan 1 is scheduled only after the execution of at least one action per plan. Moreover, the concept of time-quantum has been overridden by the actual duration of the selected action. So, the time-quantum actually coincide with the computational time required by the currently running task. Finally, this mechanism is repeated for all the in-tentions owned by the agent. In case a new intention is generated, it is placed on the top of the queue.

This simple mechanism cannot be implemented/applied in real-time oper-ating systems because of the long waiting time and significant response time, which has to be recalculated for any new task arrival [141]. The latter, given its complexity due the dependency from the queue characteristics, is too com-plex to be actually considered feasible at run-time. Therefore, in-light of these factors, the risk of missing deadlines (not taken into account at all by the algo-rithm) might dramatically increment, thus degrading system performance and compromising its reliability and safety. Nevertheless, tuning the parameters as proposed in [141] leads to minor improvements, which are still not enough the breach into the world of the real-time systems.

�.� �������� �� ��� ������� 30 A c ti o n s In te n ti o n s Pl a n s Belief Agent Schedule: A 1 A 1 A 1 A 2 A 2 A 2 A 3 A 3 Ag 1 B 1 A 1 A 2 A 3 A 1 A 2 A 3 A 1 A 2 I 1 I 2 P 1 P 2 P 3 I 3

Figure 5: Jason’s implementation of RR scheduling: A graphical representation.

In the Jade platform, the agent tasks are referred to as “behaviors", which can be primitive or composite [27]. They might be compared to the roles played by the actions in Jason. The most relevant for the purpose of our study are: Primitive behaviors:

SimpleB.: an extendable basic class; CyclicB.: a behaviour performing actions

repeatedly, reactivating itself after its execution is completed. It stays active as long as its agent is alive; TickerB.: a periodic behavior which unlike the CyclicBehaviour is re-executed after a set time (customized activation period);

OneShotB.: an instance can only be executed once along with its agent

life-cycle; WakerB.: it allows defining the activation time (delay from the agent life-cycle start); MsgReceiverB.: it is triggered if a timeout expires or a specific type of message is received.

Composite behaviors are enabled by a complex combination of primitive behav-iors:

ParallelB.: it enables the parallel execution of children behaviors allowing the

definition of the termination conditions: it terminates if all, n, or any child is completed. SequentialB.: it executes its children behaviors consecutively and terminates when the last child is terminated.

To handle such behaviors, Jade proposes another customization of the RR algorithm, called non-preemptive RR [66]. However, the reference to the term "Round Robin" is inappropriate since preemption is not admitted and, conse-quently, time-quantum varies from task-to-task (i.e. the computational time of the running behavior). Therefore, the non-preemptive RR turns to operate

�.� �������� �� ��� ������� 31

like a classic FIFO/FCFS which treats both simple and composite behaviors as “atomic task". The only variant is that when the action method of a behavior can return true (it is removed from the list of “active behaviors") or false (it is appended back in the ready queue).

Jadex is a Jade-based platform relying on the BDI notion [18] and based on four Jade elements which operate concurrently on the internal data-structures of the agent. The message receiver listens for ACL messages from other agents creating corresponding message events. The timing behavior releases the events on the timetable, appending them to the list of events to be dis-patched. The dispatcher adopts goals by placing them on the intention stack and selecting plans to be handled from the event list. The selected plans are subsequently executed step-by-step by the scheduler (which also implements the plan supervision).

Implementing the functionalities into separate behaviours allows a flexible be-havior replacement with custom implementations (e.g., alternative schedulers and BDI implementations). However, the dispatcher is responsible for select-ing plans to handle events and goals inside the agent, thus facilitatselect-ing reactive and proactive behavior. It also manages the interrelation between plan in-stances and goals. The dispatcher cyclically removes the next entry from the event list, checks if a goal is associated with the event, and then creates the applicable plans list (APL) for the event. When a goal is finished (success or failure), the owner of the goal will be notified. For a failed goal, the dispatcher may choose another plan for execution depending on the BDI flags of the goal. The scheduler executes the ready-to-run plan instances one at a time, and, step by step, applying an FCFS scheme. In each scheduling cycle, the first plan in-stance is removed from the ready list, and then a single step is executed. The scheduler waits until the plan step finishes or an error occurs. Afterwards, it checks if any of the associated goals are already achieved. At the last step of the plan, the plan instance is removed from the agent.

The schedulers implemented in Jade (non-preemptive RR) and Jadex (FCFS) are essentially extensions of FIFO and thus, are not suitable to provide strong real-time guarantees.

For example, (i) it has no means to handle task priorities, (ii) the schedula-bility under FIFO can only be guaranteed for systems with a considerably low utilization factor1 and with uniform period ranges, (iii) response time has to

�.� �������� �� ��� ������� 32

be recalculated for any new task arrival (unsustainable), and (iv) waiting and response time are affected by the tasks set features even then in the RR case.

Although FIFO guarantees simplicity and fairness (which can apply to general-purpose but not for the real-time systems), the real-world applications often operate under unfavorable conditions and high task-set utilization. Thus, in the best hypothesis, FIFO can only be considered a viable option for “soft real-time" systems. However, Altmeyer et al. [9] revisited FIFO scheduling altering its operating conditions to increase its predictability and improve its real-time performance. They provided a schedulability test for FIFO with and without offsets. Moreover, studying a case with strictly periodic tasks and offsets, they proved the competitiveness of such a scheduling policy when pre-dictability and simplicity matter. Finally, two significant advantages can be achieved by enforcing strictly periodic task releases and adding offsets: (i) per-formance limitations are mitigated and the number of schedulable task sets is increased (even in the case of high utilization rates and task-sets with har-monic or loosely-harhar-monic periods, and (ii) defined by the order of job arrivals, a unique execution order is enforced, thus simplifying validation and testing.

To overcome some of the real-time limitations introduced in MAS by RR, FCFS, and their customization above-mentioned, the priority schedulers have been introduced.

�.�.�.� Analysis of priority local schedulers in MAS

The class of priority schedulers is based on assigning a priority to all the tasks in the task-set which discriminates their position in the ready queue and so their turn to get the CPU. Usually, tasks with higher priorities are carried out first, whereas tasks with equal priorities are treated on the FCFS basis. A general example of a priority-scheduling algorithm is the shortest-job-first (SJF) algorithm. There are two main types of priority algorithms:

• The fixed priority, which schedules general-purpose systems by assigning a “priority-based" value to the tasks offline. Then, the dispatcher sorts them by relevance and occasionally executes the first in the ready queue; • The dynamic priority, which has similar mechanisms, assigning priority depending on the systems’ behaviors at run-time. Thus such values can change over the time.

According to the developers, the Mason platform is not yet a distributed toolkit. It requires a single unified memory space, and has no facilities for