ALMA MATER STUDIORUM - UNIVERSITÀ DI BOLOGNA CAMPUS DI CESENA

SCUOLA DI INGEGNERIA ED ARCHITETTURA CORSO DI LAUREA MAGISTRALE IN INGEGNERIA

INFORMATICA

ICT Security: defence strategies against

targeted attacks

Tesi in

Progetto di Reti di Telecomunicazioni LM

Relatore

Prof. Franco Callegati

Correlatore

Ing. Marco Ramilli

Presentata da

Daniele Bellavista

Sessione Terza

Contents

1 Introduction 1

2 Cyber Attacks 3

2.1 Malware . . . 3

2.1.1 Types of malware . . . 3

2.1.2 Malware infection techniques . . . 4

2.2 Opportunistic and targeted attacks . . . 6

2.2.1 Opportunistic attacks . . . 6

2.2.2 Targeted attacks . . . 7

2.3 Cyber attack process . . . 8

2.3.1 Howard’s Common Language: a first attack process taxonomy . . . 9

2.3.2 Cyber warfare taxonomy and military doctrine . . . . 9

2.3.3 AVOIDIT an extensible taxonomy . . . 10

2.3.4 Kill chain for targeted attacks . . . 11

2.3.5 Modus Operandi for industrial espionage and targeted attacks . . . 12

2.4 Attack process details . . . 12

2.4.1 Information Gathering . . . 12

2.4.2 Attack vector . . . 13

2.4.3 Entry point infection and propagation . . . 14

2.4.4 Action on Objectives . . . 14

3 Methodologies for attack prevention, detection and response 15 3.1 Access Control Models . . . 16

3.1.1 Discretionary Access Control . . . 16

3.1.2 Mandatory Access Control . . . 16

3.1.3 Role Based Access Control . . . 17

3.2 Firewalls . . . 17

3.2.1 Packet Filters Firewall . . . 18

3.2.2 Application Layer Firewall . . . 19

3.3 Intrusion detection and prevention systems . . . 19

3.3.4 Intrusion prevention capabilities . . . 24

3.4 Intrusion Response Systems . . . 25

3.4.1 Taxonomy for Intrusion Response System . . . 26

3.5 Vulnerability Assessment . . . 28

3.6 Malware analysis . . . 29

3.6.1 Dynamic analysis information . . . 30

3.6.2 Dynamic analysis techniques . . . 30

3.6.3 Dynamic analysis implementation . . . 33

4 Defence strategies against targeted attacks 35 4.1 A real targeted attack . . . 35

4.2 Why did defence systems fail? . . . 37

4.3 WASTE: Warning Automatic System for Targeted Events . . 39

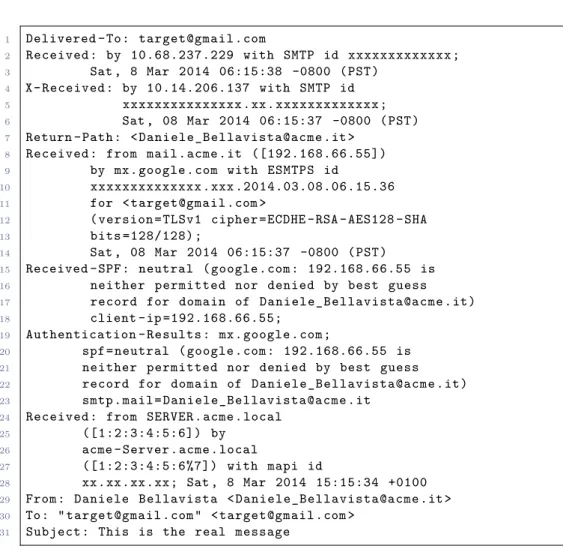

4.3.1 Email-driven attacks detection . . . 45

4.3.2 Executable and documents analysis . . . 48

4.3.3 Network communications . . . 51

4.4 HAZARD: Hacking Approach for Zealot Advanced Response and Detection . . . 51

4.4.1 HAZARD Roles . . . 52

4.4.2 Incident Analysis . . . 53

4.4.3 WASTE warning analysis . . . 54

4.4.4 WASTE issue management . . . 59

4.4.5 Vulnerability Assessment . . . 59

4.4.6 Targeted attack evaluation . . . 61

4.4.7 Targeted attack test . . . 62

5 Defence strategy application 67 5.1 Defence against and mitigation of cyber attacks . . . 67

5.1.1 Lastline . . . 67

5.1.2 Incident monitoring, evaluation and mitigation . . . . 68

5.1.3 Defence against targeted attacks . . . 71

5.2 Vulnerability Assessment . . . 72

5.2.1 OpenVAS . . . 72

6 Conclusion and Future Research 73 6.1 Future research . . . 74

Bibliography 75

List of Figures 79

Chapter 1

Introduction

In March 2013, the guardian published an article with a shocking title:

Is 2013 the Year of the Hacker?1. It states that 1.5 million peoples are impacted by cyber attacks every day with an increasing rate and that even industry giants like Facebook or Dropbox have been hit by cyber attacks.

Similar articles became common in international and national journals, presenting the cyber crime, often associated with the term hacker or hacking, as a rising and dangerous threat that may hit every person, society or nation. Governments and industries are also showing a raising awareness to security risks. Nowadays, the investment in cyber security defences is becoming popular by promoting security researches, bug hunting and by developing new standards.

The current cyber defence race is justified by the continuous cyber-attacks that are massively performed since the Morris worm. Although the most famous cyber security event of 2013 was the global surveillance

disclo-sure, when Edward Snowden leaked several top secret documents, several

cyber attacks were performed in the last year, for instance:

• Spamhaus attack: the second largest DDoS attack ever. Nine day of DNS spoofing starting from March 18th (the primate for a DDoS attack goes to the CloudFlare attack, dated February 10th, 2014 with 400Gbps).

• Department of Energy breach: performed in July, this data breach was possible by exploiting backdoors installed after a previous attack performed in February.

• Columbian Independence Day Attack: on July 20th, 30 Colom-bian government websites were defaced or shut down.

• New York Times Attack: the Syrian Electronic Army targeted the New York Times’ website in August 2013.

• Adobe data breach: before the Snowden leak, it was the most fa-mous data breach of 2013. In September, a successful attack took millions of user credentials and, more important, source code of some Adobe products.

• Data-Broker botnet: in September, a botnet was discovered in the data-broker servers. The malware remained undetected from detection systems for over a year.

The list could be expanded and not only with industrial giants or gov-ernments, but also with average network users, infected by malware, be-cause operating systems and software like browsers and PDF readers are constantly analyzed to find exploitable bugs. Moreover, the massive diffu-sions and connections of smartphones expanded the security context to both mobile OSs and applications. One notorious example is Whatsapp, which protocol has been hacked several times.

The increasing rate and scope of cyber attacks can be attributed to evo-lution of computer technology, especially when dealing with internet con-nectivity. Cloud computing and BYOD solutions, for instance, enlarged the circle of thrust, creating situations that widen the attacker entry points and making the defence work more difficult.

My work, however, doesn’t concern the study of new security solutions in the context of new technologies, but in the study of strategies against tar-geted attacks, which differ from the normal cyber attacks. While it may seem that the cyber crime simply followed the technological evolution, the cost reduction of resources, software development and the information spread-ing led to new conceptual scenarios like hacktivism, industrial espionage and cyber-warfare, where the attacker has a specific target to compromise. Strictly speaking, they are not new scenarios, but the modern context en-larged the actor set, by bringing targeted attacks within the reach of a wider range of people.

Targeted attacks represent the major threat against companies, govern-ments and military organizations. In the last few years, the term targeted

attack started to be used and now it’s becoming one of the major topic

in security communities. Many security solutions claim to offer protection against targeted attacks and academic and professional researcher starts to study the problem as well as approaches to detect and counter targeted attacks.

This thesis aims to analyze differences between targeted and opportunis-tic attacks, to examine existing defense approach and to propose some exten-sions when dealing with targeted attacks. The method is then applied in the real world, by performing analysis and mitigation of cyber-attacks performed against an Italian company, which I will refer to asACME Corporation.

Chapter 2

Cyber Attacks

This chapter describes what a cyber attack is and how it’s performed, by analyzing existing taxonomies and common attack scenarios. Then oppor-tunistic attacks and targeted attacks are compared, highlighting the main differences between them.

2.1 Malware

A malware is any file containing executable code, such as binary files, scripts and documents with active content (e.g. PDF), that performs malicious ac-tions. They are used in cyber attacks, with various motivations (see sec-tion 2.2). In this secsec-tion, I describe types of malware and techniques com-monly used by malicious programs.

2.1.1 Types of malware

Malware programs have been classified basing on the observed behavior in the wild[10]. Each category isn’t mutually exclusive: modern malware have a high level of complexity and exhibit multiple different behaviors. The following list is intended to list features and strategies that can be seen in malware programs:

Worm - A worm is an independent program that can propagate itself to

other machine, usually by means of network connection. The Morris Worm, developed in 1986, was one of the first computer worm capable of distributing over the internet[42]. Nowadays worms exhibit complex behavior, for instance the Conficker worm propagate itself using flaws of windows systems and creates a botnet used for malicious activities. Conficker is also capable of updating itself to newer version[32].

Virus - A virus is a piece of code, incapable of running independently,

first developed between 1981-1983[1], don’t propagate through the net-work; they rather infect files in network shares or removable media to spread to other computers.

Trojan Horse - A trojan horse is program that executes a benign task,

but secretly performs a malicious behavior[1]. Trojan horses have been reported since 1972, but they are common even now as the form of browser plugin, downloadable managers, and so on[10]. A Remote

Access Trojan (RAT) is a trojan that installs a backdoor to allow a

remote control of the system.

Spyware - A spyware is a program, incapable of replication, which

pas-sively records and steals data from the infected host. A spyware may steals usernames and password by using a passive keylogger, email ad-dresses, bank accounts, software licence, and so on[1]. A less danger-ous spyware, called adware, is a malware that steals market-oriented information, such as visited websites.

Bot - A bot is a malware that allow the attacker to remotely control the

infected system[10]. Bots take orders from the command and control center (aka C&C) and, for instance, can be used to deploy distributed denial of service attacks (DDoS) or bitcoin mining.

Rootkit - A rootkit is a malware capable of hiding itself from user and

analysis application. It can operates at any level, user space, ker-nel space or even by creating a virtual machine below the operating system[10, 3].

Logic Bomb - A logic bomb is a program that executes a malicious

pay-load when a triggering condition becomes true[1]. For instance, the trigger can be a date, the presence of a file or a mouse click (like the Upclicker malware[41]).

Dropper - A dropper is a malware that drops other malware on the system.

The dropper can be persistent and continuously drop malware or it can delete itself once the dropped content is installed.

Malware Toolkit - A toolkit is not malicious per se, but it’s capable of

generating malware versions by specifying desired features and behav-ior, such as exploits and targeted. Famous malware toolkits are the BlackHole Exploit Kit (which creator was arrested in 2013[26]) and the Zeus Agent Toolkit.

2.1.2 Malware infection techniques

When designing a malware, the attacker must consider how to infect the sys-tem, hide the malware from the user, detect defence systems and optionally

2.1 Malware 5

evade them. For more details on malware analysis, see section 3.6.

In order to evade signature-based detection and static analysis, a mal-ware can implement one or more of the following techniques:

Encryption - The malware body or part of it is usually encrypted, or

obfuscated, to hide the malicious code and to disrupt antivirus signa-tures. The encryption can be static or use a key.

Oligomorphism - Oligomorphism is an improvement of encryption. At

each infection the malware changes the encryption key and/or the encryption algorithm from a predefined set[1].

Polymorphism - A polymorphic malware implements the same concepts

of oligomorphism, but it uses dynamically generated encryption func-tion, but modifying its code, by using a mutation engine[1]. The mu-tation engine can operate in various ways, for instance by using equiv-alent instruction, changing the used registers, by introducing concur-rency, junk code or by inverting instructions.

Metamorphism - Metamorphism is the process of changing at each

in-fection the malware code, inclusive of the mutation engine itself. A metamorphic malware doesn’t need to encrypt its body to evade sig-natures, because the code itself is changed at every infection[1].

Packing - Packing is another approach to hide the malware code and to

evade signatures. The malware is compressed, encrypted and wrapped by a second program called packer. The duty of the packer is to in-stantiate a valid environment for the malware, by loading libraries, creating memory segments and API wrappers. Then, the packer de-crypts the malware and executes it without writing the code on the disk. The packing operation doesn’t need a mutation engine or com-plex encryption step for parts of the malware body. On the other hand, an unpacked malware is vulnerable to dynamic analysis (see section 3.6).

Encryption techniques are deployed also to thwart static binary analysis. If strings, values and the malicious code are encrypted, the instruction flow of the malware is nearly impossible to follow. To overcome these techniques, modern defence systems perform dynamic analysis by executing the malware inside an emulated system. In such cases, a malware have to detect the emulated environment and to exhibit malicious code only in real machines. This is a typical behavior of logic bombs: if the emulated environment is not detected, then execute the malicious code is executed. Common dynamic analysis evasion includes:

Red pills - Red pills are detection methods, which exploit flaws in the CPU

emulation. A red pill invokes certain assembly instructions that are known to produces different results inside the emulated environment.

System alternation detection - Emulated systems may present or lack

of devices, services or processes that are respectively missing or present in real environment.

Sleeping malware - A simple counter action to automatic analysis is to

wait for it to finish. Real-time defence systems usually perform a time-limited analysis. If the malware sleeps for long enough, the detection could be bypassed.

Wait for user interactions - Like the Upclicker malware, a malicious

ac-tion could be triggered only when a user input is detected. In emulated environment the human user is missing and the defence system can be detected by the lack of its actions. Advanced defence systems emulate user interaction to fool such behaviors.

Multi-stage attack - Multistage attacks are organized in a way that the

downloaded malware is not complete. Its infection process is divided into multiple stages: at each stage the malware downloads another component, which precedes the infection[24, 35].

2.2 Opportunistic and targeted attacks

When dealing with targeted attacks it’s important to identify when an attack can be considered opportunistic and when targeted in order to distinguish truly dangerous targeted attacks among the countless opportunistic events that may resides in the network.

2.2.1 Opportunistic attacks

In opportunistic attacks, the attacker has a general idea of what to do and chose its victim among the possible exploitable targets. Recurring motiva-tions for opportunistic attacks may be:

• Build or expansion of an illegal infrastructure: the attacker wants to build or extends an illegal infrastructure for phishing, spam or botnet. The target is irrelevant since the desired outcome is to infect as many hosts as possible.

• Financial gain: bitcoin mining, ransonware and credit card stealing are common objective of opportunistic attackers.

• 15 minutes of fame: any vulnerable website or service is defaced or taken down by a solo attacker or an organization.

2.2 Opportunistic and targeted attacks 7

Opportunistic attacks’ scenarios have in common the absence of a specific target, resulting in a large number of riskless attacks, as confirmed by the Verizon data breach analysis[11, 20, 21, 22], which states that:

1. More than 70% of attacks as opportunistic.

2. On average, a system on the internet is scanned after 11 minutes from the connection.

3. 97% of opportunistic attackers try one port and 81% send a single packet

When the attack involves the use of malware, the malicious software must be designed to infect a generic target, without a priori knowledge of OS version and defence systems involved. It must also be fast to produce to maximize the duty time between the zero-day and the bug correction. Moreover, since the target is unknown, the attacker has to take in account that the malware could infect a honeypot, or will be analyzed in sandboxes. In order to avoid the automatic identification the malware must contain anti-analysis techniques.

These problems led to the diffusion of malware toolkits that automatize the malware generation. The result is a set of malware, equal in behavior but with different signatures, which are generated every day by the thousands, captured in honeypots and marked in a few weeks.

2.2.2 Targeted attacks

Targeted attacks differ from opportunistic because of the choice of the target. This is the crux that influences every step of the attack process.

As first difference, motivations for targeted attacks share always an im-plicit assumption: the attacker is not going to give up, since the objective is reached only by attacking the specific target.

• Financial Gain: the attacker has financial benefits by stealing infor-mation or by disrupting a service, for instance by executing a DoS on the main web-site of the target.

• Activism: instead of money, emotions guide the attacker. The objec-tive may be the same as the financial gain, but the attacker is driven for instance by political or religious believes.

• Cyber warfare: the attacker launches an assault against a nation, tar-geting strategic objective.

The second difference is the customization. Targeted attacks vector con-tains evidence that the attackers has specifically selected the recipient of the

attack [44]. Emails, for instance, contain evidence of an advanced knowledge of the target beside address and name: format, terminology or the plausi-bility of the content is a sign of strong information gathering. Also mal-ware differs from opportunistic attacks. Targeted malmal-ware are specifically designed to bypass the target defence systems and doesn’t belong to any known family, thus, no signature is present. Unlike opportunistic attacks, a targeted malware requires more skills for its design and development.

The third difference is organization. An opportunistic attack is an end unto itself, while targeted attacks are usually part of an attack campaign, aimed to cause a persistent damage to the target. Once the target is com-promised and the objective is reached, the attacker installs a remote access toolkit inside the host to guarantee a persistent access. In such situation, the targeted attacks can be called advanced persistent thread (APT).

2.3 Cyber attack process

At the turn of 21th century, due to the increase of the internet, illegal ac-tivities involving network and computer were impacting a high number of users. The increasing connectivity also opened attack paths from across the world: with the right resources, cyber warfare operations could be under-taken, threatening the target nation’s security.

While attack taxonomies existed since the Morris Worm, when the CERT was founded, early works were focused on the classification scheme of secu-rity flaws, like the Bishop’s taxonomy of UNIX system and network vulner-abilities [2, 34]. Newer taxonomies faced the problem of which intentions and objective drive the attacker and how to relate them in a larger attack campaign. They still however bind too much to the technical details of vul-nerabilities and security flaws which fail in the classification of a structured and organized targeted attack.

Over the last few years targeted attacks evolved into specialized and persistent attacks aimed to steal information, compromise the infrastructure and leave backdoors to guarantee a persistent access. Moreover, attackers and targets may have relationships with organization or nations, that could help understand what is the real objective and how to defend it [34, 44].

These new scenarios, more similar to cyber warfare than cyber attacks, cannot be handled by the classical taxonomies, thus the security community produced methods to profile attack processes rather than to classify them basing on technical properties. Taxonomies moved to a higher level, to be capable of describing multiple actors, the relation between them and the attackers’ modus operandi.

2.3 Cyber attack process 9

2.3.1 Howard’s Common Language: a first attack process taxonomy

One notorious taxonomy is the Common Language, published by John Howard in 1997 [16]. Howard expanded the existing CERT taxonomies by modelling a primitive attack process. It defines the concept of attacker, who wants to achieve a set of objectives by means of computer attacks. A group of attacks sharing the same attacker, objective and timing is called incident.

Objective: purpose or end goal of an incident.

Attacker: an individual who attempts one or more attacks in order to

achieve an objective. Basing on the objective, the attacker can be classified using various term such as hacker, spy or terrorist.

Attack: a series of steps taken by an attacker to achieve an unauthorized

result.

For the taxonomy to be complete, the attack is divided into steps which formalize an attack classification. An attack starts with a tool, which exploits a vulnerability. The exploit allow a series of actions on the target, which may lead to an unauthorized result. A single action on a target is called

event.

Tool: a means to exploit a vulnerability. As Howard states, this is the most

difficult connection because of the wide variety of methods.

Vulnerability: a weakness in a system allowing unauthorized action. Action: a step taken by a user or process in order to achieve a result. Target: a computer or network logical entity or physical entity.

Unauthorized result: a consequence of an event that is not approved by

the owner or administrator.

2.3.2 Cyber warfare taxonomy and military doctrine

In 2001, the Lieutenant Colonel Lionel D. Alford Jr. published an issue, proposing a military-oriented taxonomy for cyber crime acts. He recognized the danger of a targeted attack toward modern software-controlled compo-nents and urged to develop an appropriate defence plan. “Cyber warfare

may be the greatest threat that nations have ever faced. Never before has it been possible for one person to potentially affect an entire nation’s security. And, never before could one person cause such widespread harm as is possible in cyber warfare.“ [23].

Inspired by military taxonomies, Lt. Col. Alford identified the main stages that occur or may occur during a cyber attack:

Cyber Infiltration (CyI): during this stage, the attacker identifies all the

system that can be attacked, that is, every system that accepts exter-nal input. The infiltration can be both physical and remote.

Cyber Manipulation (CyM): following infiltration, an attacker can

al-ter the operation of a system to do damage or to propagate the infec-tion.

Cyber Assault (CyA): following infiltration, the attacker can destroy

soft-ware and data or disrupt other systems functionality.

Cyber Raid (CyR): following infiltration, the attacker can manipulate or

acquire data and vital information.

A cyber attack can be in the context of cyber warfare (CyW) or

cy-ber crime (CyC), being the attack intent to affect national security or

not. Also, the taxonomy includes some primitive motivation of actors and their intent. The doctrine distinguish between intentional cyber

war-fare attack (IA) and unintentional cyber warwar-fare attack (UA). The

responsible of the cyber attack are called intentional cyber actors. Despite the military terms, the taxonomy describes actions that occur during targeted attacks, being the target a military organization or not. Moreover, the inclusion of both physical and remote access to the computer and network infrastructure is a common point with targeted attacks tax-onomies.

2.3.3 AVOIDIT an extensible taxonomy

AVOIDIT is an acronym for Attack Vector, Operational Impact, Defense, Information Impact and Target. It’s an often cited taxonomy in the security community because it first introduces the concept of impact of an attack.

AVOIDIT use five extensible classifications:

Classification by Attack Vector: an attack vector is defined as a path

by which an attacker can gain access to a host. The path is defined as a vulnerability exploited by the attacker.

Classification by Operational Impact: the operational impact

determi-nates what an attack could do if succeeded. The classification is based on a custom mutually exclusive list, for instance web resource, a mal-ware installation or Denial of Service.

Classification by Defence: classification by defence classifies the attack

basing on the defence strategy against the pre- and post- attack. The defence can involve both a mitigation of the attack damage and a

2.3 Cyber attack process 11

Classification by Informational Impact: the informational impact

de-terminates how the targeted sensitive information has been impacted by the attack.

• Distort - The information is modified.

• Disrupt - The access is changed or denied, for instance as a con-sequence of a DoS.

• Destruct - The information is deleted.

• Disclosure - The information is accessed from an unauthorized viewer.

• Discover - Previously unknown information is discovered by the attacker.

Classification by Attack Target: the target of the attack is any

attack-able entity, such as users, machines or applications.

The AVOIDIT taxonomy allowed to classify complex attacks, by consid-ering both the various attack vectors and the information impact. It lacks however of physical attacks classification[40] which is an important aspect in targeted attacks.

2.3.4 Kill chain for targeted attacks

The kill chain for targeted attacks was never published, but proposed in a Withe Paper by Lockheed Martin’s company [18]. Authors propose a military-inspired intrusion kill chain, with respect to computer network at-tack (CNA) or computer network espionage (CNE). The model describes the stages the attacker must follow as a chain, thus profiling already performed attacks or identifying current attacks.

A defence strategy based on kill chain assumes that any deficiency will interrupt the entire process.

1. Reconnaissance: intelligence and information gathering phase. 2. Weaponization: create the malware and inject it in a weaponized

deliverable.

3. Delivery: transmission of the weapon by means of an attack vector. 4. Exploitation: the malware acts, exploiting the system and

compro-mising the machine.

5. Installation: the malware installs a RAT (remote access tool) or a Backdoor to allow the attacker an access to the environment.

6. Command&Control: the malware establishes a connection to the attacker C&C, getting ready to receive manual commands.

7. Actions on Objectives: the attacker takes actions to achieve his objective.

2.3.5 Modus Operandi for industrial espionage and targeted attacks

The paper “Industrial Espionage and Targeted Attacks: Understanding the Characteristics of an Escalating Threat” in 2012 [44], doesn’t propose a tax-onomy, but rather a modus operandi for targeted attacks. Authors recognize the complexity of a targeted attack, and that the targets can be also small industries and not only large corporations or governments.

The paper identifies a typical modus operandi, based on forensic inves-tigation of multiple targeted attacks:

Incursion: in this phase the attacker attempts to penetrate a network. Discovery: once inside, the attacker analyzes the network to spread the

infection and identify the real objective.

Capture: when a worthy computer is infected, the attacker installs an

ad-vanced RAT (remote access toolkit) to improve the stealth capabilities.

Data Exfiltration: the attacker uses the RAT to steal documents,

pass-word and blueprints. The attacker can also use the advanced capabil-ities to spread deeper into the network.

2.4 Attack process details

This section describes common approach used when performing cyber at-tacks. It’s important to understand how a cyber attack is performed in order to design a proper strategy.

2.4.1 Information Gathering

The first stage of every attack is the information gathering, which provides the intelligence needed by every subsequent phase.

Since recon is the very first phase, the attacker will try not be detected, because an early detection could warn the target and disrupt the entire attack. From the defender side, this is also the most difficult phase to detect, since most of the information can be gathered in legal ways.

2.4 Attack process details 13

Employee’s personally identifiable information: phone numbers,

ad-dresses, biometric records and so forth can be used for the selection of the right target and attack vector.

Network layouts: web and mails servers, their location, software version

and system fingerprints reveal necessary information for exploitation, installation and C&C phases.

Company files: reports, databases and source code, for instance, could

reveal software and useful data.

Company information: information such as partners, services, mergers

and acquisitions, facilities plants could delineate social engineering ap-proach and attack vectors via physical infiltration.

Organizational information: for a successful social engineering attack

and in order to identify the correct target the attacker needs technical staff and C-team names, organizational charts and corporate structure details.

Work interaction: emails content templates, inter-communication

proto-cols, security and authentication mechanisms are essential information for the attack design.

Tons of public information can be obtained by searching in employee’s social networks profiles, public calendar entries and via specific Google searches. Private information can be harder to obtain and often requires the use of social engineering against the company personnel.

2.4.2 Attack vector

Most attacks are performed by creating a malware to be executed inside the target network. However those malware need to be disguised, to bypass both security programs and humans by creating a weaponized deliverable. A typical weaponization it is performed by embedding the malware inside a valid program, thus creating a trojan, or by exploiting vulnerability of pdf readers or browser plugins to embed executable code inside documents and web pages.

Once the weaponized deliverable is ready, it must be sent into the target machine by means of an attack vector. This phase is the very begin of the infiltration process, the attacker will use every gathered information to

During delivering, the attacker uses the available intelligence to design the correct method to reach the objective.

Email: using a spoofed address and a fake signature, the attacker can play

a member of the C-team or a supplier, attaching the weapon to the message.

Message: similar to email, but using some chat-based protocols, for

in-stance Skype, used in many organization.

File Sharing: malicious software may be distributed by means of a sharing

system protocol.

Vulnerabilities: internet services, antiviruses, browsers, OSs and other

program that expose themselves to the internet, may have vulnerabili-ties capable of granting access to an attacker. This vector is dangerous because it can be completely undetected by the logging systems.

Passwords: although this can be considered a vulnerability itself, a weak

password is a standalone and easy-to-use vector. A weak password can be broken regardless of any update or security system a sysadmin could install.

Another possible attack path is the physical interaction. Using social engineering or disguises, the hacker can try to elude the physical security systems and deploy manually the malware. A common path is also the physical deliver of a memory support, such as an USB stick and the use of social engineering skill to connect it to some host.

2.4.3 Entry point infection and propagation

Once deployed the attacker has an infection to a host that may not be the target. The infection must be spread into the target network, by using automated or manual methods.

2.4.4 Action on Objectives

Once the target is infected, the attacker can take the necessary action to fulfil the objective. Usual actions involve keylogging, data downloading or attacks toward other targets.

Chapter 3

Methodologies for attack

prevention, detection and

response

ICT security is the result of a competitive evolution between cyber attacks and systems trying to counter them. Taxonomies and process profiling, described in the previous chapter, were designed to understand and identify cyber attacks, in order to create defence systems able to counter them.

As first cyber attacks taxonomies were based on technical vulnerabilities, first defence approaches were guidelines for developers on how to prevent, identify and fix security vulnerability in software and networks and also policy enforcement techniques to prevent unwanted behaviors[7]. However, defence methods based on these taxonomies failed to be effective against complex attacks, which cannot be detected nor prevented by patching single vulnerabilities.

In 1986, the same year of the Morris Worm and of the Howard’s taxon-omy, Dorothy E. Denning published an intrusion detection model based on real-time monitoring of the system’s audit record[8], defining the concept of

intrusion detection and prevention system. IDPS are widely used nowadays,

however they are proving ineffective against targeted attacks[15, 12, 27, 44], and part of the security community is moving toward the concept of as-sumption of breach[14], which states that a company must be aware that any IDPS can stop a targeted attack, so it may invest in a fast and reliable incident response plan.

The following sections describes the common methodologies used as de-fence against cyber attacks, which are access control models, firewalls, in-trusion detection and prevention systems, inin-trusion response systems, vul-nerability assessment and details on malware analysis.

3.1 Access Control Models

Access control is the process of protecting system resources against unau-thorized accesses, by modelling limitations on interaction between subjects and objects[39]. The act of accessing to a resource may involve the use, read, modification or physical access to it. A subject is authorized to access a resource if a policy that permits it exists.

In the field of computer security, access control manages the interac-tion of users to network and software resources. Generally speaking, users are mapped to multiple subjects and access control assumes that users are already identified and authenticated[37].

The security community produced multiple models for access control, but only three have been widely recognized and used[37], which are Discretionary

Access Control, Mandatory Access Control and Role Based Access Control.

3.1.1 Discretionary Access Control

Discretionary access control (DAC) governs the access of a subject to an object by using a policy determined by the owner of the object.

DAC is suitable for a variety of systems and application for its flexibility, however it doesn’t impose any restriction on the usage of objects, that is the flow of information is not managed[37]. For instance, a user capable of reading a file may allow other non authorized users to read a copy of the file without the owner permission.

An example of discretionary access control is the UNIX file modes, where each file has an owner and a group. The owner sets a subset of permissions (read, write and execute) for itself, the group and other users.

3.1.2 Mandatory Access Control

Mandatory access control (MAC) governs the access of a subject to an object by using a policy imposed by the system administrator.

Originally, MAC was tightly coupled to the multi-level security policy[29]. Each object has a security level and a subject a clearance level. Access to objects must satisfy the following relationships[37]:

Read down: a subject’s clearance must dominate the security level of the

object being read.

Write up: a subject’s clearance must be dominated by the security level of

the object being written.

This approach imposes some degree of control in the information flow. The write up property imposes that higher-level object cannot be written into lower-level objects.

3.2 Firewalls 17

However the original definition of MAC was insufficient to handle com-plex situation as it ignores critical properties such as intransitivity and dy-namic separation of duty[29]. These problems led to a more generic defini-tion of MAC, where the policies are controlled by the system administrator. 3.1.3 Role Based Access Control

Role Based Access Control (RBAC) governs the access of a subject to an object by means of roles, identified in the system. The RBAC model is rich and extendible, because “it provides a valuable level of abstraction to promote security administration at a business enterprise level rather than at the user identity level”[36]. In order to unify ideas from multiple RBAC models and commercial products, the NIST published a unified model in [36], defining RBAC in a four step sequence model. Each step increases the functional capability of the previous step:

1. Flat RBAC: users are assigned to roles, permissions are assigned to

roles and users acquire permissions by being members of roles.

2. Hierarchical RBAC: introduce role hierarchies, with inheritance

(ac-tivation of a role implies ac(ac-tivation of all junior roles) or ac(ac-tivation (no activation assumptions) or both.

3. Constrained RBAC: imposes a separation of concerns, by limiting the

simultaneous activation of certain roles.

4. Symmetric RBAC: include the ability of review a permission-role

as-sociation with respect to a defined user or role.

3.2 Firewalls

A firewall is a network security system, which analyzes the network traffic enforcing policy based on various protocol headers. Packets that do not match policy are rejected. The survey on firewall written by Inghan and Forrest in [19] defines as firewall a machine or a collection of machines be-tween two networks meeting the following criteria:

• The firewall is at the boundary between the two networks.

• All traffic between the two networks must pass through the firewall. • The firewall has a mechanism to allow some traffic to pass while

block-ing other traffic (policy enforcblock-ing).

Firewalls are able to separate a portion of the network, creating a zone of trust, for instance by separating the inner network from the outside network. A trust boundary is needed for security reasons[19]:

• Masking some security problems in OS, by denying the access to in-ternal hosts from the outside.

• Preventing access to certain information present in the outside net-work, for instance by blocking access to certain web sites.

• Preventing information leaks by analyzing the information contained in the outgoing traffic.

• Logging for audit purposes.

Firewalls can operate as packet filtering or as application layer filtering. Packet filtering firewalls analyze the traffic from the data-link layer to the transport layer of the TCP/IP stack, while application layer firewalls enforce policies at the application layer.

3.2.1 Packet Filters Firewall

Packet filters firewalls use a set of rules to decide whether or not to accept a packet. Packet filters operate up to the transport layer of the ISO/OSI stack and make decisions with rules based on a subset of the following information[19]:

• Source/Destination interface.

• Whether the packet is inbound or outbound. • Source/destination address.

• Options in the network header. • Transport-level protocol.

• Flags/options in the transport header.

• Source/destination port or equivalent (if the protocol has such con-struct).

Packet filters can also perform a better filtering at the transport level, by keeping the state of the connection. This kind of firewalls is called stateful filter. For instance, a stateful firewall can keep track of established TCP connection and some ICMP communication[19], such as the “echo request”. Packet filters have high performance and doesn’t requires user inter-actions, however they are incapable of mapping the network traffic to a user[19]. They can only map the traffic with the host IP, which can lead to the bad practice of using IP addresses and DNS names for access control.

3.3 Intrusion detection and prevention systems 19

3.2.2 Application Layer Firewall

An application layer firewall works like a packet filter firewall, but operates in the application layer of the ISO/OSI stack. These firewalls are able to interpret various application protocols, such as FTP, HTTP or DNS.

There are two categories of application layer firewalls:

Network-based firewall: the firewall is a computer system inside the

net-work, which operates as proxy server, or reverse proxy for various ap-plications/services. A typical example is a web application firewall (WAF).

Host-based firewall: the firewall operates over the network stack,

moni-toring communication directly made by the application. Unlike network-based firewalls, a host-network-based firewall can filter per-process packets, but it can protect only the host were it is installed.

3.3 Intrusion detection and prevention systems

Intrusion detection is the process of monitoring a set of resources, such as computer systems or networks, and analyzing them to detect intrusions[38]. Intrusion prevention is the ability of stopping ongoing attacks or preventing them from happening, by modifying the attack context.

Intrusion detection and prevention systems (IDPS) are software or hard-ware system capable of detecting and preventing intrusions, which are at-tempts to compromise the confidentiality, integrity and availability, or to bypass the security mechanism of a computer or network[38, 28]. IDPS, often referred as IPS, are a superset of Intrusion Detection Systems (IDS), which offer only the detection capability. The following sections, however, will focus on IDS and how to classify them.

3.3.1 Intrusion detection model

The intrusion detection model proposed by Denning’s in 1986 is independent of system, application, vulnerability and intrusion. It defines the objects, used by subjects under a particular behavior. Any action, expected or un-expected, is recorded.

Subjects: initiators of activity on a target system.

Objects: resources managed by the system, e.g. files, commands, devices,

etc…

Audit records: generated by the target system in response to actions

per-formed or attempted by subjects on objects. Each audit must have a timestamp.

Profiles: structures that characterize the behavior of subject with respect

to objects in terms of statistical metrics and models of observed ac-tivity. Profiles are automatically generated and initialized from tem-plates.

Anomaly records: generated when abnormal behavior is detected. Activity rules: actions taken when some condition is satisfied, which

up-date profiles, detect abnormal behavior, relates anomalies to suspected intrusion and produce reports.

Although the model is designed for a intrusion detection expert systems[8], it can used to describe others IDS. However, the security community didn’t create a standard model or ontology for IDS, resulting in the commercial-ization of various products with different features and capability. Thus, the security researchers tried to find a comprehensive taxonomy for the existing IDS.

3.3.2 Taxonomy for intrusion detection systems

The production of taxonomies for intrusion detection systems had a burst in the 2000s. Many papers propose classifications based on methodology and technology, and other papers were published as surveys and reviews of existing taxonomies. In an attempt to organize issues and classes, Hung-Jen Liao, Chun-Hung Richard Lin, Ying-Chih Lin and Kuang-Yuan Tung pub-lished a comprehensive review of IDS in 2012[28]. The following taxonomy is the result of their work.

Intrusion detection systems has four characteristic, each of them has multiple viewpoints. An IDS classification is performed by identifying every viewpoint of each characteristic.

• System Deployment: defines on which technology the IDS is de-signed to work. Thus, it determinates which objects can be monitored, • Data source: defines which kinds of data are used and how they are

gathered. Data is the base of audit records.

• Timeliness: define the IDS detection granularity and how it reacts. It’s a particular aspect of activity rules.

• Detection Strategy: defines methodologies and strategies for de-tection and processing. It defines profiles, activity rules and how to generate anomaly records.

3.3 Intrusion detection and prevention systems 21

System Deployment

System deployment concerns with what the system is supposed to moni-tor and how it’s interconnected with the infrastructure. The deployment consists of three different viewpoints: technology type, networking type and

network architecture.

The technology type determinate what events the IDS can collect and inspect. There are four classes of technology type[28]:

• Host-based IDS (HIDS): monitors and collects information from hosts and servers using agents.

• Network-based IDS (NIDS): captures network traffic of specific network segments through sensors and analyzes protocols and com-munication of applications.

• Wireless-based IDS (WIDS): similar to a NIDS, but sensors cap-ture traffic from wireless domain.

• Network Behavior Analysis (NBA): works like an NIDS, but in-spect the traffic to identify attacks with unexpected traffic flows. • Mixed IDS (MIDS): is an IDS with multiple technologies.

With network architecture, an IDS is classified basing on how it detects attacks[28]:

• Centralized: the IDS detects attack basing on data from a single monitored system.

• Distributed: the IDS is capable of detect structured attacks that involve multiple systems. The distributed approach can be parallelized,

grid-based or cloud-based.

• Hybrid: the IDS uses multiple approaches.

Finally, the networking type identifies how the IDS is interconnected with the system[28], which can be wired, wireless or mixed.

Data Source

Data source determinates how the IDS gather data and which data it can handle. An IDS collect data by means of collection components, which can be software based (agents) or hardware based (sensors).

Data from components can be collected in a centralized or distributed fashion, depending on the IDS has a single server which gathers all data or it has intermediate gatherers.

The last viewpoint is the data type, strongly related to the technology

• Host logs: related to the host activities, they include system

com-mands, system accounting, system logs and security logs.

• Application logs: logs from a single application.

• Wireless network traffic: includes all the traffic gathered in the wireless domain.

• Network traffic: in addition to network packets, it includes also SNMP information.

Timeliness

Timeliness determinates how the IDS behaves in the time dimension:

• Time of Detection: can be “real time/on-line” or “non-real

time/off-line”.

• Time granularity: describes when the IDS process the data for sign of attacks. It can be continuous, periodic or batch.

• Detection-response: determinates if the IDS is also an IDPS. It can be passive, that is the IDS take no countermeasures against the detected attack or active if the IDS tries to prevent the attack.

Detection Strategy

Once the data is gathered, the detection strategies determinate how the in-formation is produced. The detection discipline viewpoint establishes how the state of insecureness is handled; if an IDS recognize two states (secure and insecure), the IDS is called state based, while if it recognize the tran-sition from secure to insecure, and vice versa, the IDS is called trantran-sition

based. Both state and transition based IDS can evaluate the state using an non-obstructive or stimulating evaluation, depending on the IDS infers the

system status by the passive monitoring of the systems or if it obtains other data by interacting with the system.

The processing strategy viewpoint, as for the data collection viewpoint of data source, can be centralized or distributed.

Finally, the detection methodology is one of the most important aspect, because it determinates how the IDS recognize a threat [28]. There are three possible methodologies:

• Signature-based: the IDS search the logs for known strings or pat-tern associated with a threat. It is also known as Knowledge-based

3.3 Intrusion detection and prevention systems 23

• Anomaly-based: the IDS detects anomalies, which are deviations from a known behavior (profile). A profile can be either static or dynamic. It is also known as behavior-based detection.

• Specification-based: the IDS understands various protocols and keep track of their state, searching from anomalous behaviors. It’s called stateful protocol analysis and differs from anomaly-based detec-tion, because the protocol profiles are generic and low-level.

3.3.3 IDS comparison

Intrusion detection systems are often specialized in particular field and tech-nologies, thus comparison between them results in a pro-cons list. However, knowing the strength and limitation of different IDS is necessary to build a complete defence system. The major comparison are done basing on

tech-nology type (see table 3.2) and detection methodologies (see table 3.1)[28].

Signature-based

Pros • Simple and effective against known attacks. • Detail contextual analysis.

Cons

• Ineffective against unknown attacks or variants of known attacks. • Little understanding of states and protocols.

• Need to keep signatures and patterns up to date. Anomaly-based

Pros

• Effective against new and unforeseen vulnerabilities. • Less dependent on OS.

• Facilitate detection of privilege abuse.

Cons • Weak profiles accuracy due to observed events being constantly changed. • Difficult to trigger alerts in right time.

Specification-based

Pros • know and trace the protocol states.

• Distinguish unexpected sequence of commands. Cons

• Resource consuming due to protocol state tracing and examination. • Unable to detect attacks conforming to the protocol.

• Too much dependent on OSs or APs.

HIDS

Pros • Can analyze end-to-end encrypted communication.

Cons

• Lack of context knowledge results in difficult detection accuracy. • Delays in alert generation and centralized reporting.

• Consume host resources.

• May conflict with existing security controls. NIDS

Pros • Can analyze the broadest scopes of AP protocols.

Cons

• Cannot monitor wireless protocol.

• High false positive and false negative rates.

• Cannot passively detect attacks within encrypted traffic. • Difficult analysis under high loads.

WIDS

Pros • Narrow focus allows a more accurate detection.

• Only technology able to supervise wireless protocol activities. Cons

• Cannot monitor application, transmission and network level protocol activi-ties.

• Physical jamming attacks against sensors are possible. NBA

Pros

• Able to detect reconnaissance scanning. • Can reconstruct malware infections. • Can reconstruct DoS attacks.

Cons • The transferring flow data to NBA in batches causes a delay in attacks detec-tion.

Table 3.2: Pros and cons of IDS technology types[28] 3.3.4 Intrusion prevention capabilities

The previous taxonomies was focused on intrusion detection, because in-trusion prevention is strongly related to the detection and may be seen as additional capabilities, rather than a factor for further classification[28, 38]. The NIST guide to intrusion detection and prevention systems[38], de-scribe the prevention capabilities for each IDS technology type class.

Network-based IDPS and network behavior analysis IDPS

op-erates in similar ways, by altering the network communication. Such IDPSs can:

3.4 Intrusion Response Systems 25

• Perform inline firewalling by dropping or rejecting suspicious network activity.

• Throttle bandwidth usage, in case of DoS attacks, file sharing or mal-ware distribution.

• Alter malicious content, by active sanitization of the payload.

• Reconfigure other network security devices, such as firewalls, routers and switches to block certain activities or route them toward a decoy or also to quarantine an infected system.

• Trigger a response plan (see section 3.4).

A wireless-based IDPS operated directly by terminating the malicious wireless session and preventing a new connection to be established, or, if a wired link is present, The WIDPS can instruct router and switches connected to the access point to block the suspicious network activity.

A host-based IDPS agent operates directly on the host, thus it can perform multiple prevention actions:

• Prevent malicious code to be executed.

• Prevent some application to invoke certain processes, for instance to prevent network application to execute shells.

• Prevent network traffic to be processed by the host.

• Prevent suspicious outgoing traffic to be sent to the network. • Reconfigure the local firewall.

• Dynamic policy enforcement.

• Prevent access, read or usage to some files, which could stop malware installation.

3.4 Intrusion Response Systems

Intrusion detection systems are in continuous development, and new tech-niques for detection and prevention are always refined. However, in case of attack detection, an IDS doesn’t offers a full support for an automated counter-measure to the attack[43].

From an high-level prospective, the process of intrusion response involve the following actions[13, 30]:

• Contain the effect of an attack, in case of a multi-staged attack or an APT.

• Recover affected services.

• Take longer term actions, such as reconfiguration or patching, to pre-vent further attacks.

• Gather and handle evidences for law enforcement. • Minimize the effect of information leaks.

The process of response to an ongoing or concluded attack is a high heterogeneous, system dependent problem and received less attention from the security community[43, 13, 14]. However, the increasing complexity of systems and attacks, made the manual intrusion response inadequate and the cost necessary to maintain an effective team for incident response raised. An intrusion response system (IRS) is a system capable of automatize and ease the incident response steps. In 2007, Stakhanova proposed a tax-onomy for IRS[43] to provide a foundation for further works on intrusion response.

3.4.1 Taxonomy for Intrusion Response System

Intrusion response systems can be classified according to the activity of

triggered response and to the degree of automation[43]. Activity of Triggered Response

The activity of triggered response determinates how an IRS responds when a new attack is detected. The response can be passive or active, according to the IRS notifies the system administrator and provides attack information or if the IRS attempts to contain the damage or to locate or harm the attacker. Common passive responses are:

• Notify the administrator by generating an alarm. • Generate a comprehensive report of the attack. • Enable additional IDS or logging activity. • Enable forensic and information gathering tools.

An active response can operates on hosts or network. Host-based response actions can be:

• Deny access to files.

• Allow to operate on fake files. • Restore or delete infected files.

3.4 Intrusion Response Systems 27

• Restrict user activity or disable the user account. • Shutdown or disable compromised services or host. • Terminate suspicious process.

• Abort or delay suspicious system calls. While network-based response actions can be:

• Enable or disable additional firewall rules.

• Block suspicious incoming/outgoing network connection. • Block ports or IP addresses

• Trace connection to perform attacker isolation or quarantine • Create a remote decoy or honeypot.

Degree of automation

Basing on the degree of automation an IRS can be categorized as:

Notification system: provides information about the intrusion. The

sys-tem administrator has the duty of perform and intrusion response basing on the notification information.

Manual response system: provides a higher level of automation, by

pre-senting the attack log and a set of pre-determined responses to be launched by the administrator.

Automatic response system: opposed to the manual response system,

the automatic response system provides immediate response through an automated decision-making process.

Automatic response support is very limited, but many IRS offers high level of automation. Thus the automatic response systems can be further classified by the ability to adjust, the time instance of the response, the

cooperation ability and by the response selection mechanism.

The ability to adjust determinates if an IRS is capable of dynamically adapting the response selection to the changing environment during the attack time. A static approach it’s simple and easy to maintain but requires periodical upgrades and the true effectiveness of the response plan is truly tested only during an attack. On the other hand, an adaptive approach can operates in many ways, for instance by adjusting the system resource devoted to intrusion response or by reasoning on previous success or failure responses.

The time instance of the response classifies an IRS basing on when the response plan is activated basing on the attack time. A proactive approach tries to foresee the incoming intrusion before the attack has effected the resource. A delayed approach applies the response plan once the attack has been confirmed. Although a proactive system can execute more effective response actions, its design is complex and a high false rate of the attack foreseen can trigger inappropriate responses.

The cooperation capability relates a set of IRS by their intercommunica-tion. A cooperative IRS, in contrast with an autonomous IRS, can cooperate with other systems to provide a global detection and response.

The last characteristic is the response selection mechanism, which deter-minates how the IRS selects the response plan basing on the alarm and the environment conditions. A static mapping may be seen as an automated manual response system: the system administrator maps a response plan to certain alarms and the IRS automates the response actions. However this approach seems to be infeasible for large systems with vast and com-plex threat scenarios. A dynamic mapping, instead, takes into account also custom metrics, such as confidence or severity of attack. The response plan is then formulated at real-time basing on both alarms and environment. Finally, a more business oriented approach is the cost-sensitive mapping, which determinates the optimal response basing on the balance between the intrusion damage and response cost.

3.5 Vulnerability Assessment

Vulnerability are the gateways by which threads are manifested[4], in other words, vulnerability are attack paths for both opportunistic and targeted attackers. The vulnerability assessment is the process of identifying, quan-tifying and prioritizing the vulnerabilities in a system.

Vulnerability assessment should be performed for external and internal network reachable systems and singular hosts, in order to protect against remote attack and malware spreading and infection. Possible vulnerabilities that can be encountered can be:

• Application misconfiguration - for instance by allowing unauthen-ticated users to perform write actions or by permitting the execution of external applications.

• Unpatched system - system vulnerabilities are published by means of bugs and CVE (Common Vulnerabilities and Exposures) entries. Once a vulnerability has been exposed the system is to consider vul-nerable until a new patch is released and applied.

• Default configuration - many applications come with a default con-figuration, such as passwords, opened ports or seeds, which can be

3.6 Malware analysis 29

easily exploited.

The action of securing the company systems doesn’t scale well with its size and complexity, thus the vulnerability assessment should be expanded in an organized vulnerability management process able to control information security risk[33]. An example of vulnerability management process is[33]:

1. Preparation: the process starts by organizing vulnerability scans into meaningful and limited chunk of systems. The preparation should also determinate which kind of scan to employ (i.e. external, internal or host-based).

2. Initial vulnerability scan: during this phase, the scans defined in the preparation phase are executed. The result is a list of vulnerabili-ties.

3. Remediation phase: after the vulnerabilities are obtained, each one of them must be quantified and prioritized for remediation. The fea-sibility, the risk and the amount of work required depends on the type and rank of the vulnerability, the kind of the affected system/ap-plication and by which corrective measure is required (e.g. patch or reconfiguration?). The result of this phase is a work plan.

4. Implement remediating actions: the remediation work plan is applied. If a corrective action fails, alternatives must be discussed. 5. Rescan: a second scan is performed to test the mitigation plan. If

a mitigation action wasn’t successful, new corrective actions must be discussed and applied.

3.6 Malware analysis

Executables and active documents can exhibit malicious behaviors, threat-ening the safety of the system (see section 2.1). Defence systems try to detect malware by performing an automatic analysis.

The first approach is the static analysis, which analyzes the malware without executing it. From static analysis, information such as strings, call graphs, parameters value and known signature can be extracted[10], how-ever this approach can be drastically complicated by packing, self-modifying code, ciphers and code disallignment.

Therefore, the security community is continuously developing advanced methods to analyze malware actions while executing the malware itself[10]. This approach is called dynamic analysis.

3.6.1 Dynamic analysis information

The dynamic analysis detects malicious software by observing the malware behavior and detecting malicious actions. Malicious actions are identified on how the malware interact with the environment, thus many activities can be observed:

Filesystem access - Malware usually drop other stages or modified

ver-sion of them, by writing executable on the disk. They can also access configuration files or documents for discovering vulnerabilities or steal-ing data.

Network activity analysis - The majority of malware communicates with

remote C&C or tries to scan the network to spread the infection. By analyzing the network communication, the analyst can obtain infor-mation on remote location, protocol and spreading methods.

Process interactions - A malware can interact with other process to hide

its execution, self delete the executable or exploit a running process.

Inter-process synchronization - Inter-process synchronization requires

the creation of files or named mutex. Although not a malicious action by itself, it can provide additional information.

Registry/System configuration access - The system configuration can

be read or written for various reasons. Example of suspicious behavior can be to search information about installed programs, such as the antivirus, or insert the executable inside the autostart list.

3.6.2 Dynamic analysis techniques

Information about malware behaviors can be obtained using multiple ap-proaches. This section describes techniques on how to gather such informa-tion.

System Calls Monitoring

In order to act on the system, a malware needs to access the system calls of the OS. Windows, for instance, offers a set of API (e.g. functions in

kernel32.dll), a set of native API and system calls, which are direct invo-cation of the kernel. The native API is a bridge between the API and the system calls.

By analyzing the sequence of API calls and their parameters, the analysis system can track the malware behavior. The call monitoring is performed by means of function call hooks[10], which can be implemented using:

3.6 Malware analysis 31

• Binary rewriting: binary rewriting consists in rewriting the first byte of every called code with a jmp to a hook function. The hook function must re-implements the overwritten instructions and returns to the original code[17].

• Debugging: a program can debug the malware inserting breakpoints at every call.

• Dynamic linked libraries replacing: the DLL used by the malware can be replaced with custom DLL simulating the expected behavior.

Information Flow Tracking

The tracking on the information flow is an orthogonal approach to the moni-toring of the system calls[10]. The idea is to mark (taint) a memory location with a label and follow its usage and relationships during the execution.

The information flow tracking uses the following concepts[10]: • Taint source: introduces new labels into the system. • Taint sink: react to tainted inputs.

• Direct data dependency: dependency created after a direct assign-ment or arithmetic operations (see figure 3.1).

• Address dependency: created when a tainted value is used as ad-dress for memory read or write operations (see figure 3.2).

• Control flow dependency: conditional assignments of a variable based on tainted values, causes a control flow dependency to be created (see figure 3.3).

Pure dynamic information flow tracking can be easily evaded by using a semantic dependency[10] (see figure 3.4). The value ofxinfluences the value ofvwith an implicit dependency, which can be discovered only by analyzing the unexplored branch. This can be done with branch static analysis, or using multipath techniques[10].

1 // x is tainted 2 a = x;

3 b = x * 3; 4

5 // Know both a and b are tainted and dependent with x 6

1 // x and y are tainted 2

3 a = x[0];

4 // Know a is tainted and dependent with x 5

6 b = v[y];

7 // Know b is tainted and dependent with y 8

Figure 3.2: Address dependency

1 // x is tainted 2 if (x == 0) 3 a = 1; 4 else 5 a = 0; 6

7 // Now a is tainted and dependent with x 8

Figure 3.3: Control flow dependency

1 // x is tainted 2 a = 0; b = 0; 3 if (x == 1) a = 1; else b = 1; 4 if (a == 0) v = 0; 5 if (b == 0) v = 1; 6

7 // If x is 1, a is tainted and dependent with x but v not 8 // If x is 0, b is tainted and dependent with x but v not 9

Figure 3.4: Tainting evasion

Multipath analysis

Multipath analysis is an extension of information flow tracking, proposed by Moser in 2007[31]. It permits to analyze multiple execution paths by recording dependency and constraints between memory locations and forcing a new consistent state at each branch.

Usually the tainting sources are values obtained from the environment, such as network communication, date or files. When registering a depen-dency between a tainted value and a new memory location, the system also registers the constraints between the values. When the program reaches a

![Table 3.1: Pros and cons of intrusion detection methodologies[28]](https://thumb-eu.123doks.com/thumbv2/123dokorg/7453936.101232/29.892.138.685.570.1022/table-pros-cons-intrusion-detection-methodologies.webp)

![Table 3.2: Pros and cons of IDS technology types[28] 3.3.4 Intrusion prevention capabilities](https://thumb-eu.123doks.com/thumbv2/123dokorg/7453936.101232/30.892.212.753.168.783/table-pros-cons-technology-types-intrusion-prevention-capabilities.webp)